What is Hunyuan-GameCraft?

Hunyuan-GameCraft is a high-dynamic interactive game video generation framework jointly developed by Tencent’s Hunyuan team and Huazhong University of Science and Technology. It unifies keyboard and mouse inputs into a shared camera representation space, enabling fine-grained motion control and support for complex interactive inputs. The framework introduces a hybrid historical conditioning training strategy that allows autoregressive video generation while preserving scene information and ensuring long-term temporal consistency. By leveraging model distillation, Hunyuan-GameCraft significantly accelerates inference speed, making it suitable for real-time deployment in complex interactive environments. Trained on a large-scale AAA game dataset, the model demonstrates exceptional visual fidelity, realism, and controllable motion, outperforming existing models.

Key Features of Hunyuan-GameCraft

-

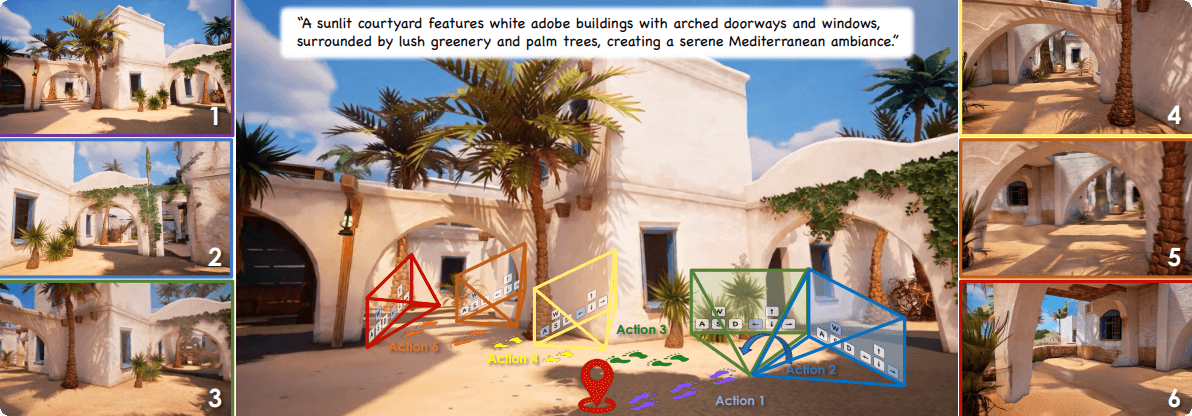

High-Dynamic Interactive Video Generation: Generates high-dynamic game video content from a single image and prompts, allowing real-time user control via keyboard and mouse inputs.

-

Fine-Grained Motion Control: Maps standard keyboard and mouse inputs into a shared camera representation space, supporting detailed input such as speed and angular control.

-

Long-Term Video Generation: Enables generation of temporally coherent long videos while preserving historical scene information to prevent scene collapse.

-

Real-Time Interaction: Achieves high inference speed with low latency, supporting seamless real-time user interactions.

-

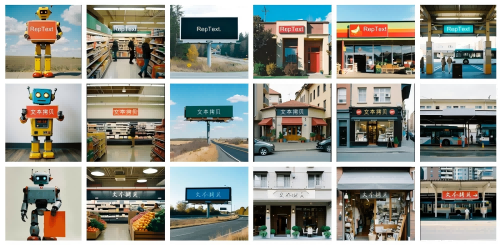

High Visual Fidelity: Trained on a large-scale dataset from AAA games, producing videos with high realism and diverse artistic styles suitable for various game environments.

Technical Principles of Hunyuan-GameCraft

-

Unified Action Representation: Keyboard and mouse inputs (e.g., W, A, S, D, arrow keys) are mapped to a continuous camera representation space. A lightweight action encoder encodes the camera trajectory into feature vectors, enabling smooth motion interpolation.

-

Hybrid Historical Conditioning Strategy: Uses a combination of historical context integration and mask indicators to extend video sequences autoregressively. At each autoregressive step, a historical denoising block guides the denoising of new noise latent variables, preserving scene information and mitigating error accumulation.

-

Model Distillation: Employs a Phased Consistency Model (PCM) for model distillation, compressing the original diffusion process and classifier-free guidance into a compact 8-step consistent model. This dramatically increases inference speed and reduces computational costs.

-

Large-Scale Dataset Training: Trained on over 1 million gameplay recordings from more than 100 AAA titles to ensure broad coverage and diversity. Fine-tuned with a carefully annotated synthetic dataset to enhance accuracy and control precision.

Project Links

-

Official Website: https://hunyuan-gamecraft.github.io/

-

arXiv Technical Paper: https://arxiv.org/pdf/2506.17201

Application Scenarios for Hunyuan-GameCraft

-

Game Video Generation: Quickly generates game trailers, demo videos, and in-game cutscenes, helping developers validate concepts and designs early in development.

-

Game Testing: Automates the generation of game scenes and interactions for performance testing and user experience evaluation, reducing manual testing workload.

-

Game Content Expansion: Generates new levels, scenes, and interactive elements for existing games, extending game lifespan and enhancing player engagement.

-

Interactive Video Content: Powers interactive videos for platforms and social media where users can control the video flow with input commands, offering a novel viewing experience.

-

Virtual and Augmented Reality (VR/AR): Generates immersive and interactive content for VR/AR applications, enhancing user engagement and realism.