Magenta-RealTime:AI music creation enters the ‘real-time’ era

What is Magenta-RealTime?

Magenta-RealTime is a new real-time music generation large model developed by the Google Gemma team, designed to deliver efficient, responsive, and interactive music creation experiences. As a major milestone in the Magenta project’s ongoing research into AI for music, Magenta-RealTime features a lightweight architecture and high-efficiency audio encoding to achieve a generation speed faster than playback. The model can dynamically generate high-fidelity music based on text prompts or audio-style inputs and supports continuous output and real-time control—making it especially suitable for live performances, interactive content creation, and educational scenarios.

Key Features of Magenta-RealTime

-

Low-Latency Real-Time Music Generation: Capable of generating music at a 1.6× real-time speed (i.e., generating 2 seconds of music in just 1.25 seconds), enabling near-instant creative feedback.

-

Multimodal Prompt Control: Accepts both text and audio prompts, allowing users to specify musical styles, tempo, mood, and more, enabling personalized generation.

-

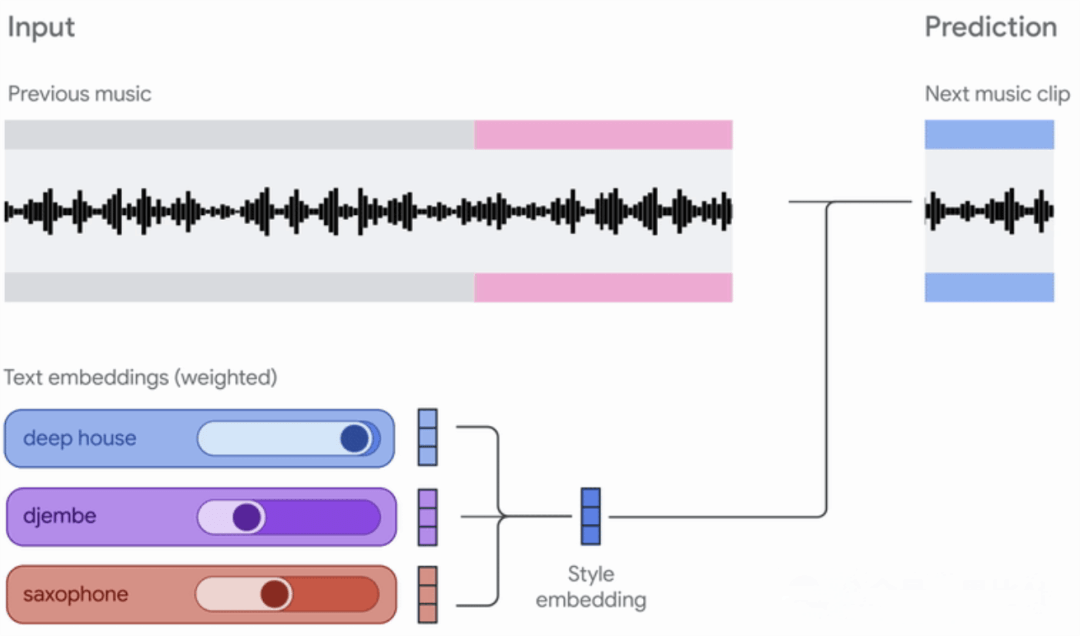

Streamed Generation with Context Memory: Uses a sliding-window approach to generate music in 2-second chunks, with each chunk conditioned on the preceding 10 seconds of context for coherent continuity.

-

High-Fidelity Audio Output: Supports 48kHz stereo audio generation using SpectroStream encoding, producing music with quality comparable to CD audio.

-

Open Source and Deployable: Provides full model code, pretrained weights, and Colab demos, making it easy for developers to experiment, fine-tune, and deploy locally.

Technical Principles Behind Magenta-RealTime

-

Chunkwise Autoregressive Modeling: The model generates music in sequential chunks (~2 seconds each), conditioned on prior output, ensuring fluent and natural progression.

-

SpectroStream Audio Encoding: Converts high-fidelity 48kHz audio into discrete tokens, balancing quality and efficiency for low-latency generation.

-

MusicCoCa Multimodal Embedding: Integrates text and audio prompts into a unified style embedding that guides the music generation process.

-

Optimized Inference Speed: With a lightweight model and efficient chunk processing, Magenta-RealTime achieves near-real-time inference even on free Google Colab TPUs.

Project Links

-

Official Page: https://magenta.withgoogle.com/magenta-realtime

-

GitHub Repository: https://github.com/magenta/magenta-realtime

-

Hugging Face Model Card: https://huggingface.co/google/magenta-realtime

-

arXiv Paper: https://arxiv.org/abs/2506.17201

Application Scenarios for Magenta-RealTime

-

Live Improvisation and Music Performance: Artists can use Magenta-RealTime in real-time on stage, blending human creativity with AI-enhanced accompaniment and variation.

-

Interactive Content and Game Soundtracks: Dynamically generates background music that adapts to user inputs or game events, suitable for immersive entertainment experiences.

-

Music Education and Style Exploration: Enables students to experiment with musical styles, harmony, and rhythm using intuitive prompts, deepening musical understanding.

-

Creative Assistance for Musicians: Provides melodic ideas, chord progressions, or harmonic extensions as a real-time co-creator, lowering the barrier to entry.

-

Accessible Music Creation: Empowers non-musicians or users with disabilities to create personalized music using voice or text, promoting inclusivity.

Related Posts