Meta declares war on OpenAI! Launching a powerful ChatGPT alternative app with voice interaction integrated into AI glasses, and offering free access to the Llama API

Free trial of the Llama API is now available. The independent app has been officially launched

Meta and OpenAI are having a full – blown showdown!

At the inaugural LlamaCon Developer Conference, Meta officially unveiled Meta AI App, a smart assistant designed to rival ChatGPT, and announced the preview release of its official Llama API service for developers.

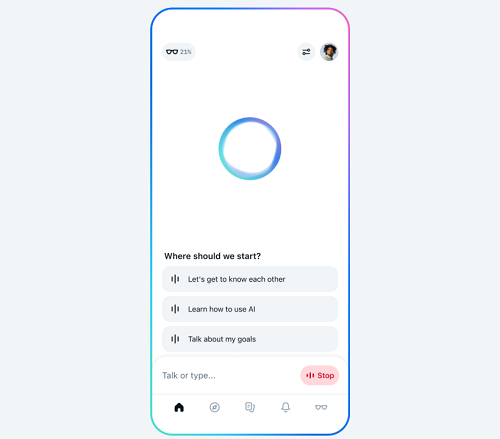

Meta AI App is an intelligent assistant powered by the Llama model, designed to understand user preferences through social media accounts and remember conversational context. Similar to ChatGPT, it supports both text and voice interactions, with the added feature of full-duplex voice communication (enabling simultaneous listening and speaking, real-time interruptions, and parallel processing for seamless conversations).

The Meta AI App can serve as a companion application for the Meta Ray – Ban AI glasses. Users can have conversations with the Meta AI App directly through these glasses.

The Llama API, released at the same event, offers multiple models including Llama 4. This API is compatible with the OpenAI SDK, allowing products using OpenAI API services to seamlessly switch to the Llama API.

Meanwhile, Meta has provided tools for fine – tuning and evaluation in the new API. Developers can create customized models that meet their own needs and also use the fast inference services provided by Cerebras and Groq, two AI chip startups.

It is worth mentioning that in the past few years, the release of Llama has only been a part of the Meta Connect developer conference. The fact that Meta has held a dedicated event this time fully demonstrates their emphasis on the AI business. Meta CEO Mark Zuckerberg did not appear at the press conference and will participate in two dialogues after the event.

Regarding the pricing, developers can apply for access to the free preview version of the Llama API. In the future, this API may adopt a paid model, and the specific price list has not been released yet. Both the Meta AI app and the web version are already online and available for free.

Meta AI Web version:https://www.meta.ai/

Llama API Documentation:https://llama.developer.meta.com/docs/overview

The download volume of the Llama model has exceeded 120 million, and the Meta AI App focuses on voice interaction.

Chris Cox, Chief Product Officer of Meta, shared the latest progress of Meta’s open – source AI at the LlamaCon conference. Cox said that two years ago, there were doubts within Meta about the commercialization prospects and priority of open – source, but now open – source AI has become the general trend in the industry.

Two and a half months ago, Meta announced that Llama and its derivative models had achieved 1 billion downloads. Today, this number has rapidly grown to 1.2 billion.

On the open – source platform Hugging Face, most of the downloads of Llama come from derivative models. Thousands of developers have contributed, tens of thousands of derivative models have been downloaded, and it is used hundreds of thousands of times every month.

Currently, the Llama model has been applied to many apps under Meta, including WhatsApp, Instagram, and so on. In these scenarios, the Llama model has undergone customized processing to meet the requirements of specific use cases, such as conversationality, conciseness (especially in mobile scenarios), and a sense of humor.

To offer a more diverse range of AI experiences, Llama has launched an independent intelligent assistant app – Meta AI.

The Meta AI App places great emphasis on the voice interaction experience, offering low-latency and expressive voice capabilities. Additionally, Meta AI can connect to users’ Facebook and Instagram accounts. By analyzing their interaction history, it can roughly understand their interests and remember relevant user information.

This app comes with a full-duplex voice experimental mode. The relevant model is trained on natural conversational data between people, enabling it to provide more natural audio output. “Full-duplex” means that the communication channel is open in both directions. Users can hear natural interruptions, laughter, etc., just like in a real phone conversation.

The full-duplex voice experimental mode is still in its early stages. It lacks capabilities such as tool utilization and web search, and is unable to keep up with newly occurred current events.

The Meta AI App incorporates social elements, allowing users to share prompts and generation results on the Discover page. This app can be used in conjunction with Meta Ray – Ban smart glasses. Users will be able to start a conversation on the glasses and then access it from the “History” tab of the application or the web to continue from where they left off.

With just one line of code, you can call the model and fine-tune it online

At the meeting, Manohar Paluri, Meta’s vice president in charge of the Llama business, and Angela Fan, a member of the Llama research team, jointly released the Llama API service hosted by Meta.

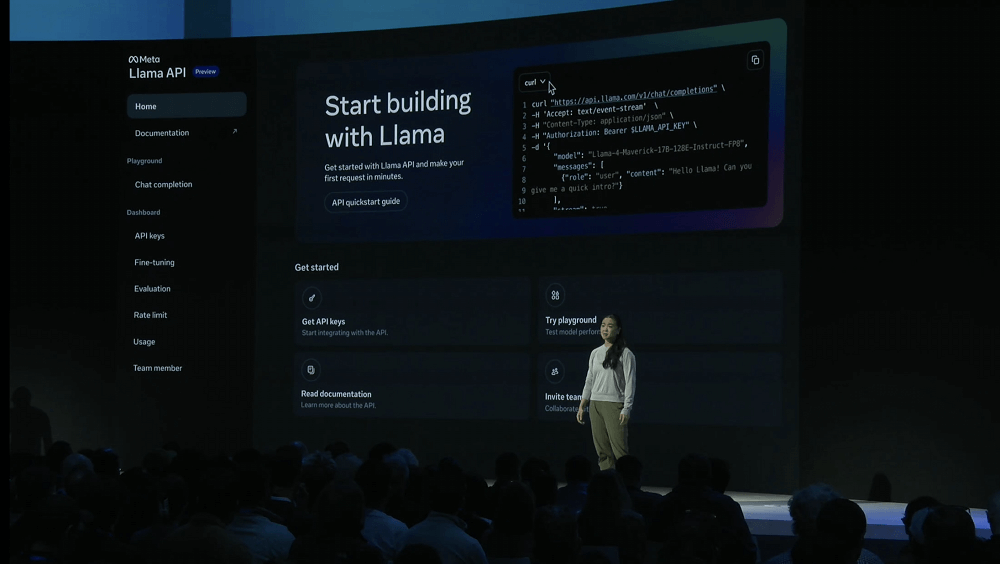

The Llama API offers simple one – click API key creation, and you can make a call with just one line of code. It supports lightweight Python and TypeScript SDK developers as well as the OpenAI SDK.

In the API service webpage, Meta provides an interactive playground. Developers can try different models in it and change model settings, such as system instructions or temperature, to test different configurations.

In addition, developers can use multiple preview features, including structured responses based on JSON, tool invocation, etc.

Paluri believes that customization is where open-source models should truly take the lead. The Llama API offers convenient fine-tuning services and currently supports customization of Llama 3.3 8B.

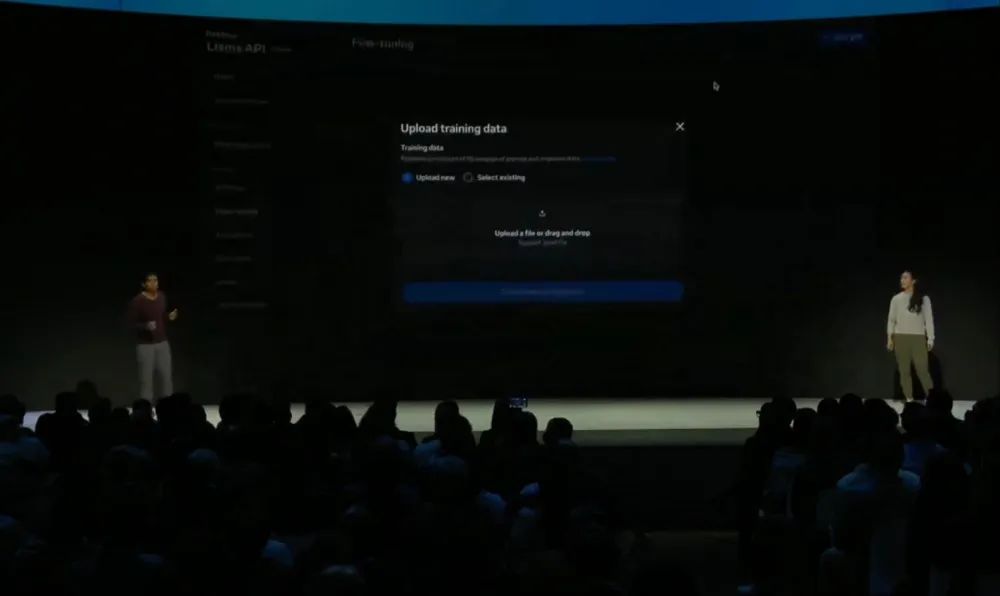

Developers can upload data for fine – tuning on the fine – tuning tab, or generate training data using the synthetic data toolkit provided by Meta.

▲ Llama API Model Fine – Tuning Interface

After the data upload is completed, developers can segment a part of the data to evaluate the performance of the customized model. On the Llama API platform, the training progress can be viewed at any time. The fine – tuned model can be downloaded and used by developers in any scenario.

At the conference, Meta announced its cooperation with Cerebras and Groq. These two companies can provide the computing power support required for the Llama 4 API service, and their computing power can achieve faster inference speeds.

Conclusion: Llama Explores New Commercialization Pathways

Since the release of Llama 1 in 2023, the Llama series of models has gone through four major versions, with dozens of models of different parameter scales and architectures being open – sourced. However, models with parameter quantities reaching hundreds of billions or even trillions of parameters mean huge investments. According to foreign media reports, in the past year, Meta has taken the initiative to reach out to companies such as Microsoft and Amazon, hoping to establish cooperative relationships to share the development costs.

This time, the release of the Llama API and the Meta AI App marks that Meta has begun to actively explore new paths for the commercialization of the open-source Llama series models. Although the relevant services are currently free of charge, in the future, Meta is expected to use the open-source models as an entry point to guide enterprises and users to use associated cloud services or achieve commercial monetization through the App. After telling the story of technological inclusiveness and innovation, commercialization may be a common issue that all open-source model vendors need to face.

Related Posts