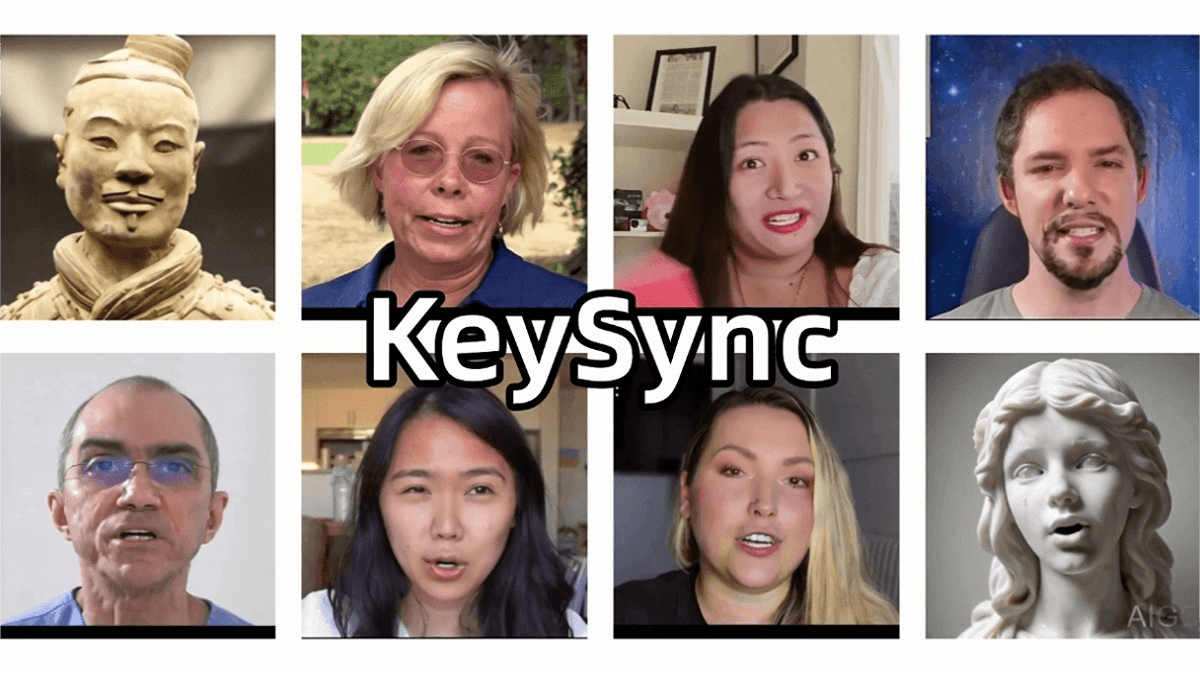

KeySync – A lip-sync framework jointly developed by Imperial College London and University of Wrocław

What is KeySync?

KeySync is a high-resolution lip-sync framework developed by Imperial College London and Wrocław University of Science and Technology, designed to align input audio with mouth movements in video. KeySync adopts a two-stage architecture: it first generates keyframes to capture essential lip movements from the audio, and then interpolates smooth transitional frames between them. By introducing a novel masking strategy, KeySync effectively reduces facial expression leakage from the input video and automatically handles occlusions using a video segmentation model. KeySync outperforms existing methods in visual quality, temporal consistency, and lip-sync accuracy, making it highly applicable for real-world tasks such as automatic dubbing.

Key Features of KeySync

-

High-Resolution Lip Synchronization: Generates 512×512 high-definition videos precisely aligned with input audio, suitable for practical use cases.

-

Reduced Facial Expression Leakage: Minimizes unwanted expression artifacts from the input video to improve synchronization fidelity.

-

Occlusion Handling: Automatically detects and excludes occluding elements (e.g., hands, objects) during inference to maintain natural visual results.

-

Enhanced Visual Quality: Achieves superior performance in multiple quantitative metrics and user studies, producing videos with better clarity and coherence.

Technical Principles of KeySync

-

Two-Stage Generation Framework:

-

Keyframe Generation: Produces a sparse set of keyframes that capture the primary lip movements encoded in the audio, ensuring each frame aligns accurately with the spoken content while preserving identity features.

-

Interpolation Generation: Smoothly interpolates between keyframes to create temporally coherent intermediate frames, resulting in natural lip motion transitions.

-

-

Latent Diffusion Model: Performs denoising operations in a compressed low-dimensional latent space to improve computational efficiency. Gradually transforms random noise into structured video data.

-

Masking Strategy:

Uses facial landmarks to design a mask that covers the lower face region while preserving contextual information. During inference, combines this with pretrained video segmentation models (e.g., SAM²) to automatically detect and remove occlusions, ensuring seamless integration between lip regions and surrounding content. -

Audio-Video Alignment:

Utilizes the HuBERT audio encoder to convert raw audio into feature representations. These are embedded into the video generation model using attention mechanisms to ensure accurate alignment between lip movements and audio. -

Loss Functions:

Combines latent space loss and pixel space L2 loss to optimize video generation quality and ensure precise lip-audio synchronization.

KeySync Project Links

-

Project Website: https://antonibigata.github.io/KeySync/

-

GitHub Repository: https://github.com/antonibigata/keysync

-

HuggingFace Model Hub: https://huggingface.co/toninio19/keysync

-

arXiv Technical Paper: https://arxiv.org/pdf/2505.00497

-

Live Demo on HuggingFace Spaces: https://huggingface.co/spaces/toninio19/keysync-demo

Application Scenarios of KeySync

-

Automatic Dubbing: Enhances alignment of lip movements and speech in multilingual content such as films and advertisements.

-

Virtual Avatars: Generates synchronized lip movements for digital characters, improving realism and user engagement.

-

Video Conferencing: Improves lip-sync accuracy in remote communication, enhancing overall experience.

-

Accessible Content: Helps individuals with hearing impairments better understand video content.

-

Content Restoration: Repairs or replaces lip movements in videos to improve content quality.

Related Posts