ACE-Step – A music generation foundational model open-sourced by ACE Studio in collaboration with StepJupiter

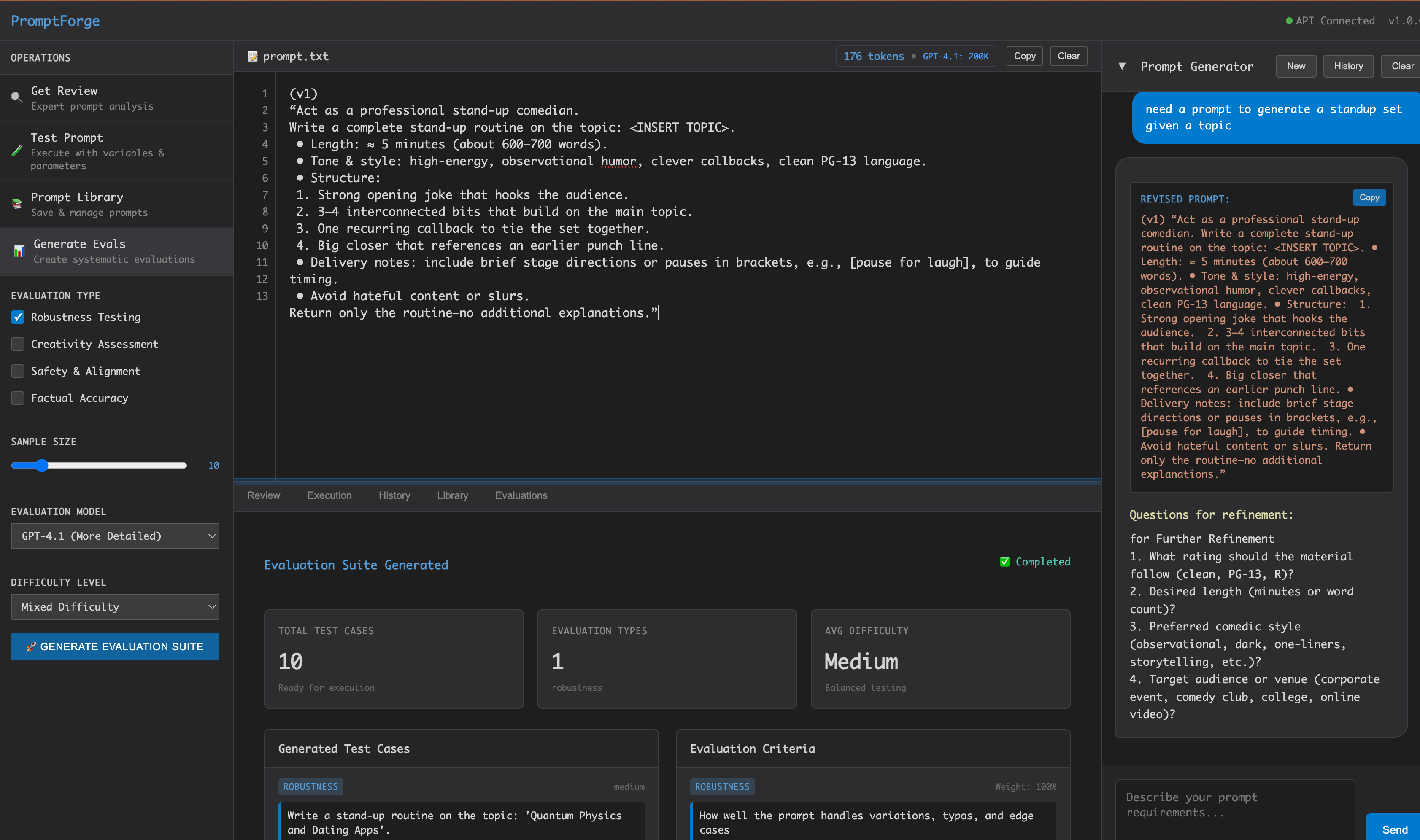

What is ACE-Step?

ACE-Step is an open-source foundational music generation model jointly developed by ACE Studio and StepFun. Through an innovative architecture that combines diffusion models, Deep Compressed Autoencoders (DCAE), and lightweight linear transformers, ACE-Step enables fast, coherent, and controllable music creation. It can generate high-quality music much faster than traditional LLM-based approaches—up to 15 times faster. ACE-Step supports various musical styles, languages, and control features, making it a powerful tool for musicians, producers, and content creators. It serves as a foundational model for a wide range of music generation tasks.

Key Features of ACE-Step

-

Fast Composition:

Generates high-quality music quickly—for example, a 4-minute track can be synthesized in just 20 seconds on an A100 GPU. -

Diverse Styles:

Supports a wide range of popular music genres such as pop, rock, electronic, and jazz, as well as lyrics in multiple languages. -

Variant Generation:

By adjusting the noise ratio, users can generate diverse variations of a musical piece. -

Inpainting (Repainting):

Allows selective regeneration of specific segments, such as changing style, lyrics, or vocals while preserving other elements. -

Lyric Editing:

Enables partial lyric modifications without affecting the melody or instrumental backing. -

Multilingual Support:

Supports 19 languages, with particularly strong performance in 10 languages including English, Chinese, Russian, Spanish, and Japanese. -

Lyric2Vocal:

Uses LoRA fine-tuning to generate human vocals directly from lyrics. -

Text2Samples:

Generates music samples and loops to help producers quickly create instrument loops, sound effects, and more.

Technical Principles of ACE-Step

-

Diffusion Model:

Utilizes stepwise denoising for data generation. Traditional diffusion models often struggle with long-term structural coherence, which ACE-Step addresses through its innovative architecture. -

Deep Compressed Autoencoder (DCAE):

Efficiently compresses and decompresses audio data, preserving fine-grained audio detail while reducing computational cost. -

Lightweight Linear Transformer:

Processes musical sequence information, ensuring coherence in melody, harmony, and rhythm. -

Semantic Alignment:

Uses MERT (Music Embedding Representation) and m-hubert to align semantic representations (REPA) during training, enabling faster convergence and higher generation quality. -

Training Optimization:

Semantic alignment and optimized training strategies allow ACE-Step to balance generation speed and coherence, producing high-quality music efficiently.

ACE-Step Project Links

-

Official Website: https://ace-step.github.io/

-

GitHub Repository: https://github.com/ace-step/ACE-Step

-

HuggingFace Model Hub: https://huggingface.co/ACE-Step/ACE-Step-v1-3.5B

-

Online Demo: https://huggingface.co/spaces/ACE-Step/ACE-Step

Application Scenarios of ACE-Step

-

Music Creation:

Quickly generates melodies and lyrics to inspire new compositions. -

Vocal Generation:

Creates human vocal audio directly from lyrics, ideal for vocal demos. -

Music Production:

Produces instrumental loops and sound effects to enrich music production. -

Multilingual Composition:

Supports cross-language music creation for global audiences. -

Music Education:

Serves as a teaching tool to help learners understand music composition and production.

Related Posts