D – DiT – A multimodal diffusion model launched jointly by Yale University, Byte Seed and other institutions

What is D-DiT?

D-DiT (Dual Diffusion Transformer) is a multimodal diffusion model jointly developed by Carnegie Mellon University, Yale University, and ByteDance Seed Lab. It unifies both image generation and understanding tasks. The model combines continuous image diffusion (via flow matching) with discrete text diffusion (via masked diffusion), and employs a bidirectional attention mechanism to train both image and text modalities simultaneously. D-DiT supports bidirectional tasks such as text-to-image generation and image-to-text generation, enabling applications like visual question answering and image captioning. Built on a multimodal diffusion Transformer architecture with joint diffusion objectives, D-DiT demonstrates multimodal understanding and generation capabilities comparable to autoregressive models, offering a new direction for the development of vision-language models.

Key Features of D-DiT

-

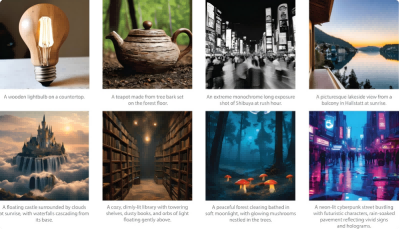

Text-to-Image Generation: Generates high-quality images from input text descriptions.

-

Image-to-Text Generation: Produces descriptive text from input images, including captions, titles, or answers to visual questions.

-

Visual Question Answering: Combines image and question text to generate accurate answers.

-

Multimodal Understanding: Supports various vision-language tasks such as image captioning, visual instruction understanding, and long-text generation.

-

Bidirectional Generation Capability: Enables flexible generation in both directions—text to image and image to text.

Technical Principles of D-DiT

-

Dual-Branch Diffusion Model: D-DiT integrates Continuous Image Diffusion and Discrete Text Diffusion.

-

Continuous Image Diffusion: Uses flow matching to model the reverse diffusion process for image generation.

-

Discrete Text Diffusion: Uses masked diffusion to gradually denoise and generate text.

-

-

Multimodal Transformer Architecture:

-

Image Branch: Processes image data and outputs image diffusion objectives.

-

Text Branch: Processes text data and outputs text diffusion objectives.

-

-

Joint Training Objective: The model is trained on a unified diffusion objective across image and text modalities. The image diffusion loss is optimized using flow matching, while the text diffusion loss is optimized using masked diffusion. This joint training allows the model to learn the joint distribution of images and texts.

-

Bidirectional Attention Mechanism: D-DiT employs a bidirectional attention mechanism to flexibly switch between image and text modalities and process them in an unordered fashion, leveraging information from both modalities to improve multimodal task performance.

Project Links for D-DiT

-

Project Website: https://zijieli-jlee.github.io/dualdiff.github.io/

-

GitHub Repository: https://github.com/zijieli-Jlee/Dual-Diffusion

-

arXiv Paper: https://arxiv.org/pdf/2501.00289

Application Scenarios of D-DiT

-

Text-to-Image Generation: Producing high-quality images from text descriptions for creative design, game development, advertising, and education.

-

Image-to-Text Generation: Generating descriptive texts for images to assist visually impaired users, content recommendation, and smart photo albums.

-

Visual Question Answering: Answering questions based on image input for intelligent assistants, educational tools, and customer support.

-

Multimodal Dialogue Systems: Generating detailed responses using both image and text within conversations, useful in customer service, virtual assistants, and tutoring systems.

-

Image Editing and Enhancement: Repairing, transforming, or enhancing images based on text prompts, applicable in image restoration, style transfer, and enhancement tasks.

Related Posts