What is OmniGen2?

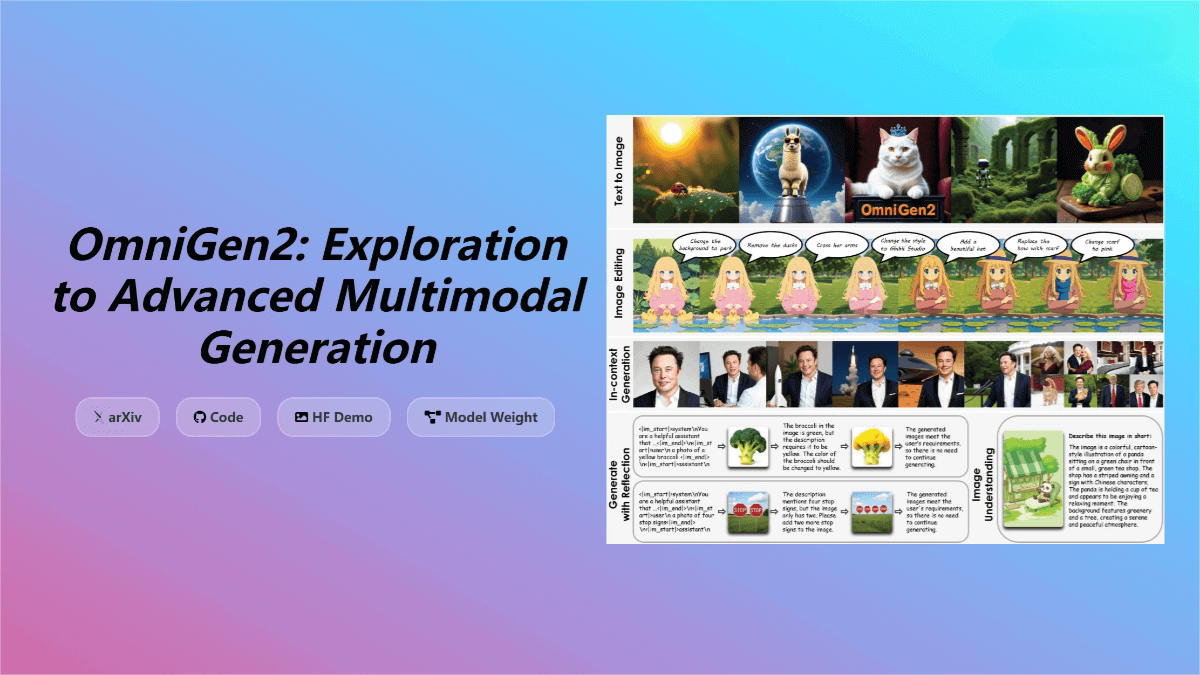

OmniGen2 is an open-source multimodal generation model developed by the Beijing Academy of Artificial Intelligence (BAAI). It can generate high-quality images from text prompts and supports instruction-guided image editing, such as modifying backgrounds or character features. OmniGen2 adopts a dual-component architecture that combines a Vision-Language Model (VLM) with a diffusion model to unify various generation tasks. With advantages including open-source accessibility, high performance, and strong context-aware generation capabilities, it is well-suited for commercial use, creative design, and research and development.

Key Features of OmniGen2

-

Text-to-Image Generation: Generates high-fidelity and visually appealing images from text prompts. It performs excellently on multiple benchmarks, scoring 0.86 on GenEval and 83.57 on DPG-Bench.

-

Instruction-Guided Image Editing: Supports complex, instruction-driven image edits, including localized changes (e.g., altering clothing color) and global style transfers (e.g., transforming a photo into an anime style). OmniGen2 balances editing accuracy with image fidelity across several benchmarks.

-

Context-Aware Generation: Capable of handling and integrating various inputs (e.g., characters, reference objects, and scenes) to create novel and coherent visual outputs. On the OmniContext benchmark, OmniGen2 outperformed existing open-source models by over 15% in visual consistency.

-

Visual Understanding: Built upon Qwen-VL-2.5, inheriting its strong capabilities in image content parsing and analysis.

Technical Foundations of OmniGen2

-

Dual-Path Architecture: OmniGen2 separates the text and image decoding processes. The text generation module is powered by the Qwen2.5-VL-3B multimodal language model (MLLM), while image generation is handled by an independent diffusion Transformer. This separation prevents the text component from negatively impacting image quality.

-

Diffusion Transformer: The image generation module is a 32-layer diffusion Transformer with a hidden dimension of 2520 and approximately 4 billion parameters. It uses a Rectified Flow method for efficient image synthesis.

-

Omni-RoPE Positional Encoding: OmniGen2 introduces a novel multimodal rotary position embedding method (Omni-RoPE) that decomposes position into sequence and modality identifiers, as well as 2D height and width coordinates. This enables precise encoding of positional information within images, supporting spatial localization and identity distinction across multiple images.

-

Reflection Mechanism: A unique reflection mechanism allows the model to self-evaluate its generated images and iteratively refine them across multiple rounds to improve quality and coherence.

-

Training Strategy: OmniGen2 uses a staged training pipeline. It begins with pretraining the diffusion model on text-to-image tasks, proceeds with mixed-task training, and concludes with end-to-end training to develop reflection capabilities.

-

Data Processing: Training data is extracted from videos and undergoes multiple filtering steps, including DINO similarity filtering and VLM consistency checks, to ensure high data quality.

Project Links

-

Official Website: https://vectorspacelab.github.io/OmniGen2/

-

GitHub Repository: https://github.com/VectorSpaceLab/OmniGen2

-

arXiv Paper: https://arxiv.org/pdf/2506.18871

Application Scenarios of OmniGen2

-

Design Concept Generation: Designers can use simple text descriptions to quickly generate conceptual sketches and visual ideas.

-

Storytelling Assistance: Content creators can generate scenes and character images based on plotlines and character descriptions.

-

Video Production Asset Generation: Creators can produce various scenes, character actions, and visual effects for use in animation, VFX, or live-action video editing.

-

Game Scene and Character Creation: Game developers can rapidly generate in-game environments and characters using text prompts.

-

Educational Resource Creation: Educators can generate relevant visuals and diagrams based on lesson content—for example, creating historical battle scenes or portraits of historical figures when teaching history.