FairyGen – An AI-powered animation and storytelling video generation framework, delivering consistent style and coherent narratives

What is FairyGen?

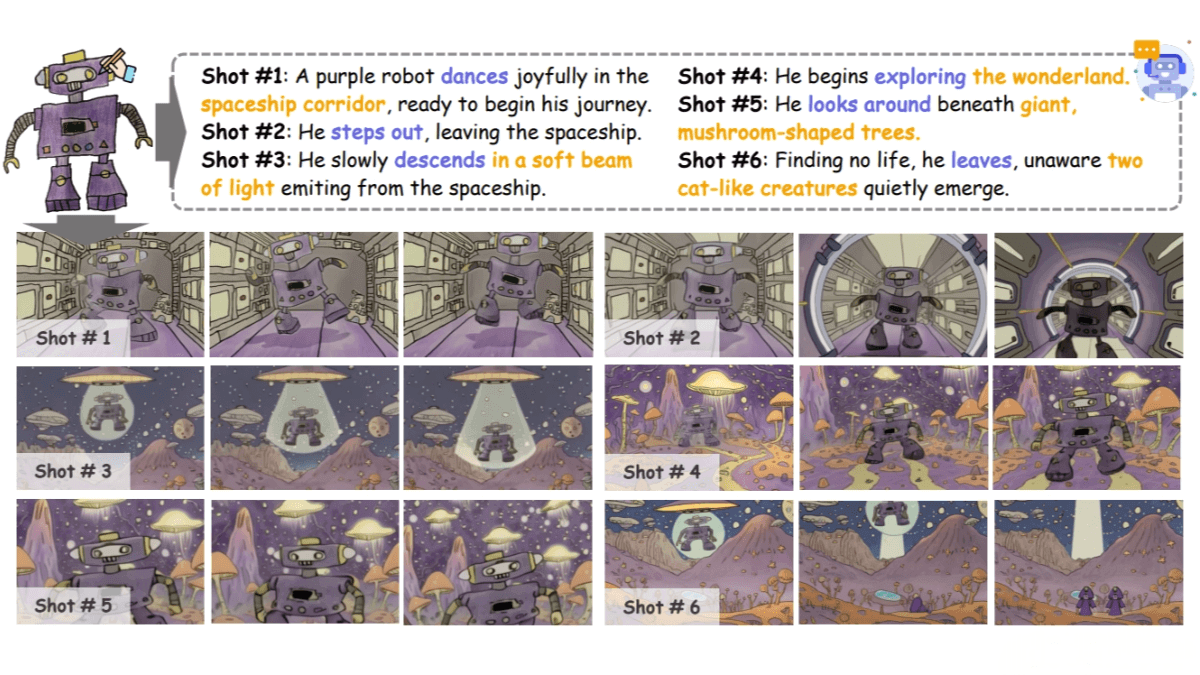

FairyGen is an animated story video generation framework developed by the Greater Bay Area University. It enables users to generate narrative-rich and stylistically consistent animated videos starting from a single hand-drawn character sketch. Powered by multimodal large language models (MLLMs) for story planning, FairyGen uses a style propagation adapter to apply the visual style of the character to the background, a 3D Agent to reconstruct the character and generate realistic motion sequences, and a two-stage motion adapter to enhance animation coherence and naturalness. FairyGen excels in style consistency, narrative fluency, and motion quality, opening up new possibilities for personalized animation creation.

Key Features of FairyGen

-

Animated Story Video Generation: Generates animated story videos with coherent narratives and consistent styles from a single hand-drawn character sketch.

-

Style Consistency: Uses a style propagation adapter to apply the hand-drawn character’s visual style to the background, ensuring a unified visual aesthetic.

-

Complex Motion Generation: Employs a 3D Agent to reconstruct characters and generate physically realistic motion sequences, supporting complex and natural movement.

-

Narrative Coherence: Leverages multimodal large language models (MLLMs) for story planning, generating structured storyboards to maintain logical narrative flow throughout the video.

Technical Principles of FairyGen

-

Story Planning: Utilizes MLLMs to generate structured storyboards from a single hand-drawn character sketch. This includes a global narrative outline and detailed shot-level storyboards, laying the foundation for storytelling.

-

Style Propagation: Learns the visual style of the hand-drawn character through a style propagation adapter and applies it to the background, ensuring visual harmony and enhancing stylistic consistency.

-

3D Motion Modeling: Uses a 3D Agent to reconstruct the character with techniques like skeletal binding and motion retargeting to produce physically plausible motion sequences, delivering natural and smooth animation.

-

Two-Stage Motion Adapter: Based on image-to-video diffusion models, this adapter is trained in two stages:

-

Stage 1: Learns spatial features of the character while eliminating temporal bias.

-

Stage 2: Applies a timestep-shifting strategy to model motion dynamics, ensuring motion continuity and realism.

-

-

Video Generation and Optimization: Integrates all components into a fine-tuned image-to-video diffusion model to render diverse and storyboard-aligned video scenes, resulting in complete animated story videos.

Project Links

-

Official Website: https://jayleejia.github.io/FairyGen/

-

GitHub Repository: https://github.com/GVCLab/FairyGen

-

arXiv Paper: https://arxiv.org/pdf/2506.21272

Use Cases of FairyGen

-

Education: Teachers can use FairyGen to transform students’ hand-drawn characters into animated stories, sparking creativity and enhancing writing skills.

-

Digital Art Creation: Artists can quickly convert sketches into animated videos, bringing ideas to life efficiently while saving time and cost.

-

Mental Health Therapy: Therapists can help patients express emotions and facilitate healing by turning hand-drawn characters into personalized animated stories.

-

Children’s Creative Development: Parents and children can co-create animations from children’s drawings, nurturing imagination and strengthening family bonds.

-

Advertising and Marketing: Marketers can create personalized animated ads using FairyGen to attract attention and enhance brand engagement.

Related Posts