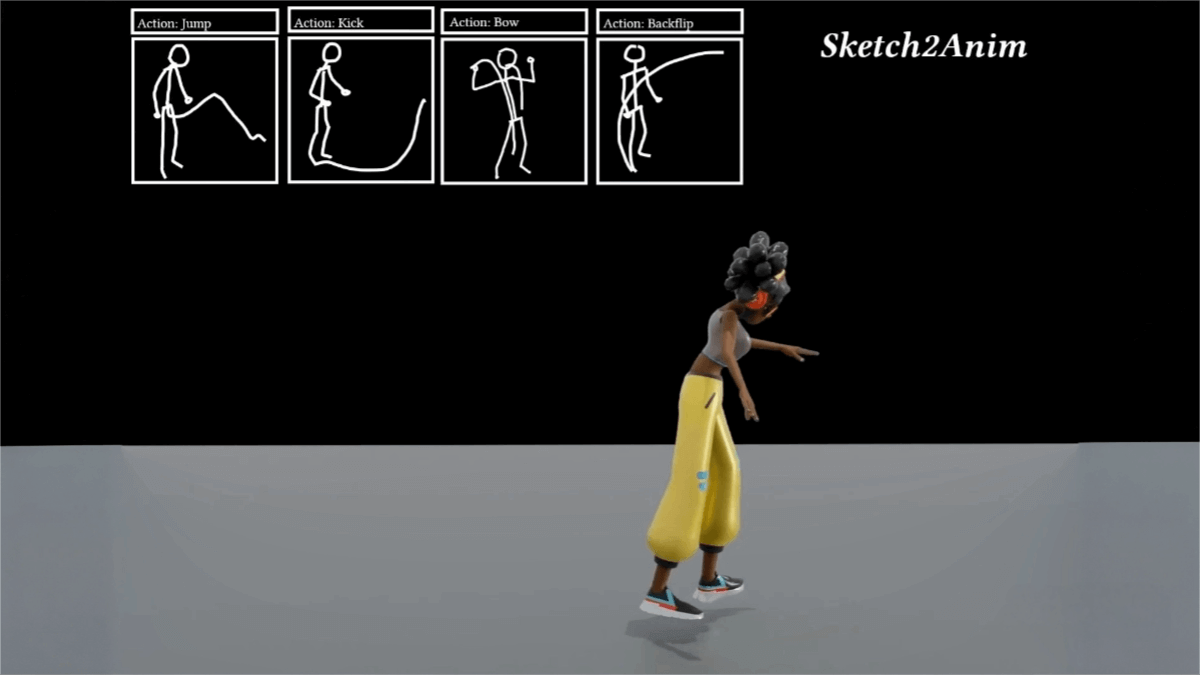

Sketch2Anim – A 2D sketch-to-3D animation framework launched by institutions including the University of Edinburgh

What is Sketch2Anim?

Sketch2Anim is an automated framework developed by the University of Edinburgh in collaboration with Snap Research and Northeastern University. It enables direct conversion of 2D sketch-based storyboards into high-quality 3D animations. Leveraging conditional motion synthesis, it uses 3D key poses, joint trajectories, and action tokens to precisely control animation generation.

The framework consists of two core modules: a multi-conditional motion generator and a 2D-3D neural mapper. Sketch2Anim produces natural and fluid 3D animations, supports interactive editing, and significantly improves both the efficiency and flexibility of animation production.

Key Features of Sketch2Anim

-

Automated Conversion: Quickly transforms 2D sketch storyboards into 3D animations, reducing the need for tedious manual steps.

-

High-Quality Animation: Generates smooth and realistic 3D animations with seamless transitions between segments.

-

Interactive Editing: Allows users to adjust generated animations in real time, such as modifying key poses or trajectories, enhancing design flexibility.

-

Enhanced Efficiency: The automated workflow significantly boosts production speed and supports rapid design iterations.

Technical Principles Behind Sketch2Anim

Multi-Conditional Motion Generator

Based on a motion diffusion model, this generator incorporates:

-

Trajectory ControlNet: Embeds joint trajectories into the motion diffusion model to control global dynamic movement.

-

Keypose Adapter: Built on top of the ControlNet, it refines local static poses to ensure the generated animation precisely matches the input key poses.

2D-3D Neural Mapper

This module includes encoders for both 2D and 3D key poses and trajectories. It aligns 2D sketches and 3D motion constraints within a shared embedding space, enabling direct 2D-to-3D animation control.

Motion Fusion and Optimization

Using a deterministic DDIM reverse process and guided denoising, it ensures smooth transitions between animation segments and produces complete, coherent animations. The framework further optimizes results using classifier-free guidance and second-order optimization methods to better fit the input 2D conditions.

Data Processing and Augmentation

The system is trained on the HumanML3D dataset, which includes 14,646 motions and 44,970 corresponding annotations. Through techniques such as camera view augmentation, joint perturbation, and body proportion adjustments, it generates diverse 2D key poses and trajectories to improve the model’s generalizability.

Project Resources

-

Project Website: https://zhongleilz.github.io/Sketch2Anim/

-

arXiv Technical Paper: https://arxiv.org/pdf/2504.19189

Application Scenarios

-

Film and Animation: Used in the early previsualization stages of film production to improve workflow efficiency.

-

Game Development: Accelerates the design of character movements and narrative cutscenes.

-

Advertising and Marketing: Converts creative sketches into eye-catching 3D animated ads to attract audiences.

-

Education and Training: Helps students better understand complex concepts through animated visualizations.

-

VR/AR Applications: Instantly transforms 2D sketches into immersive 3D animations, enriching virtual environments.

Related Posts