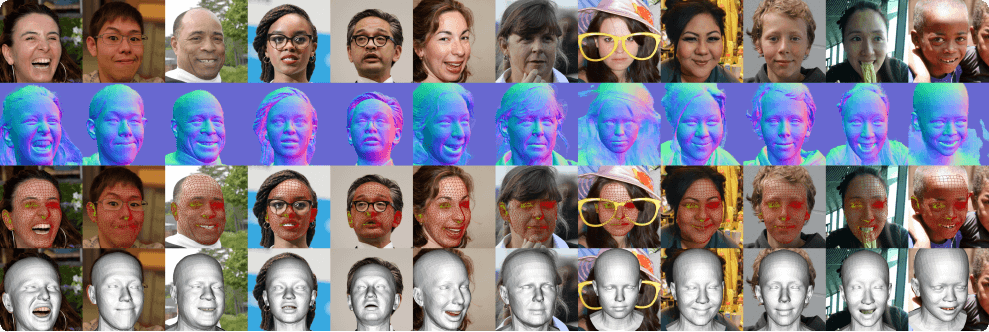

Pixel3DMM – A 3D Face Reconstruction Framework Introduced by Universities in Munich and London

What is Pixel3DMM?

Pixel3DMM is a single-image 3D face reconstruction framework developed jointly by the Technical University of Munich, University College London, and Synthesia. Based on the DINO foundation model, it introduces a dedicated prediction head to accurately reconstruct the 3D geometry of a human face from a single RGB image. Pixel3DMM achieves outstanding performance across multiple benchmarks, significantly outperforming existing methods in handling complex facial expressions and poses. It also introduces new benchmark datasets that cover diverse facial expressions, viewpoints, and ethnicities, providing a new standard for evaluation in the field.

Key Features of Pixel3DMM

-

High-Precision 3D Face Reconstruction: Accurately reconstructs 3D facial geometry—including shape, expression, and pose—from a single RGB image.

-

Handling of Complex Expressions and Poses: Excels at reconstructing high-quality 3D face models from images with complex expressions and non-frontal viewpoints.

-

Disentanglement of Identity and Expression: Capable of recovering neutral facial geometry from expressive images, effectively separating identity features from expression features.

Technical Foundations of Pixel3DMM

-

Pre-trained Vision Transformer: Uses DINOv2 as the backbone network to extract features from the input image. DINOv2 is a powerful self-supervised learning model that captures rich semantic features, forming a strong foundation for subsequent geometry prediction.

-

Prediction Head: Built on top of the DINOv2 backbone, additional transformer blocks and up-convolution layers are added to upscale the feature maps to the required resolution, ultimately predicting geometric cues such as surface normals and UV coordinates. These cues serve as crucial constraints for optimizing the 3D face model.

-

FLAME Model Fitting: Utilizes the predicted surface normals and UV coordinates as optimization targets to fit the parameters of the FLAME model. FLAME is a parametric 3D face model that represents identity, expression, and pose. By minimizing the difference between the predicted geometric cues and the rendered output of the FLAME model, it achieves high-accuracy 3D face reconstruction.

-

Optimization Strategy: During inference, FLAME model parameters are optimized to minimize the discrepancy between predicted geometric cues and the rendered results of the FLAME model.

-

Data Preparation and Training: The model is trained on multiple high-quality 3D face datasets, such as NPHM, FaceScape, and Ava256. These datasets are aligned to the FLAME model’s topology via non-rigid registration and cover diverse identities, expressions, viewpoints, and lighting conditions to ensure strong generalization.

Project Links for Pixel3DMM

-

Official Website: https://simongiebenhain.github.io/pixel3dmm/

-

arXiv Paper: https://arxiv.org/pdf/2505.00615

Application Scenarios of Pixel3DMM

-

Film and Gaming: Enables rapid generation of high-quality 3D face models, improving facial animation and expression capture while reducing costs.

-

VR/AR: Facilitates the creation of realistic virtual avatars, enhancing immersion and interactive realism.

-

Social Media & Video: Powers virtual backgrounds and effects, enhances visual quality, and improves expression recognition and interaction.

-

Medical & Aesthetic Applications: Assists in facial surgery planning and offers previews of virtual makeup and cosmetic effects.

-

Academic Research: Introduces new methods and benchmarks to advance the development of 3D face reconstruction technologies.

Related Posts