MiniMax-M1 – The latest open-source reasoning model from MiniMax

What is MiniMax-M1?

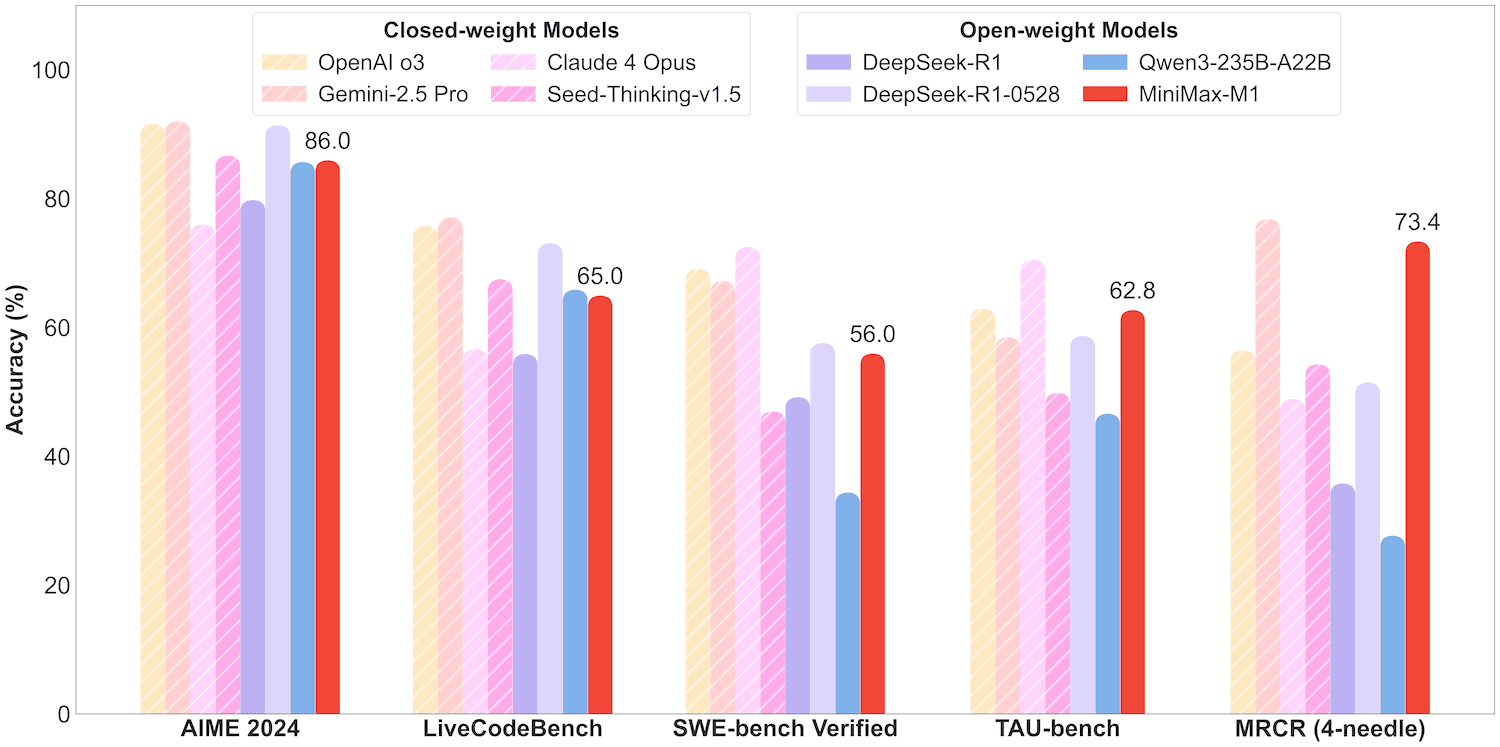

MiniMax-M1 is the latest open-source inference model released by the MiniMax team. It is built upon a hybrid expert architecture (Mixture of Experts, MoE) combined with lightning attention mechanisms. The model has a total of 456 billion parameters, with 45.9 billion parameters activated per token. It surpasses domestic closed-source models and approaches the performance of leading international models, offering unmatched cost-effectiveness.

MiniMax-M1 natively supports 1 million tokens of context length and offers two inference budget versions: 40K and 80K, making it suitable for long-input and complex reasoning tasks. In benchmark evaluations, MiniMax-M1 outperforms other open-source models like DeepSeek in several performance metrics and excels in complex software engineering, long-context understanding, and tool usage tasks. Its computational efficiency and powerful reasoning abilities make it a strong foundation for next-generation language agents.

Key Features of MiniMax-M1

-

Long-context processing: Supports inputs up to 1 million tokens and outputs up to 80,000 tokens — ideal for lengthy documents and complex reasoning.

-

Efficient inference: Offers 40K and 80K token budget versions, optimizing computing resource usage and reducing inference costs.

-

Optimized for diverse domains: Excels in math reasoning, software engineering, long-context comprehension, and tool usage, supporting a wide range of applications.

-

Function calling: Supports structured function calls, including recognition and output of external function parameters — facilitating smooth interaction with external tools.

Technical Foundations of MiniMax-M1

-

Mixture of Experts (MoE): The model is divided into expert modules, each responsible for handling specific subtasks or data subsets. Input data is dynamically routed to appropriate experts based on its characteristics. This enables efficient parallelism and resource utilization while maintaining high performance under large-scale parameters.

-

Lightning Attention: This mechanism optimizes the attention computation flow by focusing on key parts of the input sequence using a sparse attention strategy, significantly reducing computation overhead. It supports efficient processing of sequences up to 1 million tokens.

-

Large-scale Reinforcement Learning (RL): MiniMax-M1 is trained using large-scale RL with a new algorithm called CISPO (Clipped Importance Sampling with Policy Optimization). Unlike traditional token-level updates, CISPO operates by clipping importance weights, boosting training efficiency and model performance. The hybrid attention architecture naturally enhances RL efficiency and overcomes challenges in scaling RL on MoE models.

Performance Highlights

-

Software Engineering Tasks: On the SWE-bench benchmark, MiniMax-M1-40k and MiniMax-M1-80k scored 55.6% and 56.0% respectively — slightly below DeepSeek-R1-0528 (57.6%) but significantly ahead of other open-source models.

-

Long-context Understanding: With a context window up to 1 million tokens, the M1 series shows outstanding performance in long-context tasks, surpassing all open-source models and even outperforming OpenAI o3 and Claude 4 Opus. Globally, it ranks second only to Gemini 2.5 Pro by a small margin.

-

Tool Use and Agent Scenarios: In the TAU-bench for tool-augmented tasks, MiniMax-M1-40k leads all open-source models, outperforming Gemini-2.5 Pro.

Project Links for MiniMax-M1

-

GitHub Repository: https://github.com/MiniMax-AI/MiniMax-M1

-

Hugging Face Collection: https://huggingface.co/collections/MiniMaxAI/minimax-m1

-

Technical Report: https://github.com/MiniMax-AI/MiniMax-M1/blob/main/MiniMax_M1_tech_report

Pricing for MiniMax-M1 API

Inference Pricing by Input Length:

-

0–32K tokens:

-

Input: ¥0.8 / million tokens

-

Output: ¥8 / million tokens

-

-

32K–128K tokens:

-

Input: ¥1.2 / million tokens

-

Output: ¥16 / million tokens

-

-

128K–1M tokens:

-

Input: ¥2.4 / million tokens

-

Output: ¥24 / million tokens

-

App & Web Access: Unlimited free usage available on the MiniMax App and Web platforms.

Application Scenarios for MiniMax-M1

-

Complex Software Engineering: Enables code generation, optimization, debugging, and documentation — helping developers build functional modules efficiently.

-

Long-text Processing: Capable of generating long-form content such as reports, academic papers, and novels; supports long-text analysis and multi-document summarization.

-

Mathematics & Logical Reasoning: Solves complex math problems (e.g., competition-level questions, mathematical modeling) and handles logic-based tasks with structured solutions.

-

Tool Usage & Interaction: Acts as an intelligent assistant to invoke external tools, complete multi-step tasks, and deliver automated solutions — boosting productivity.

Related Posts