VRAG-RL – A Multimodal RAG Reasoning Framework by Alibaba Tongyi

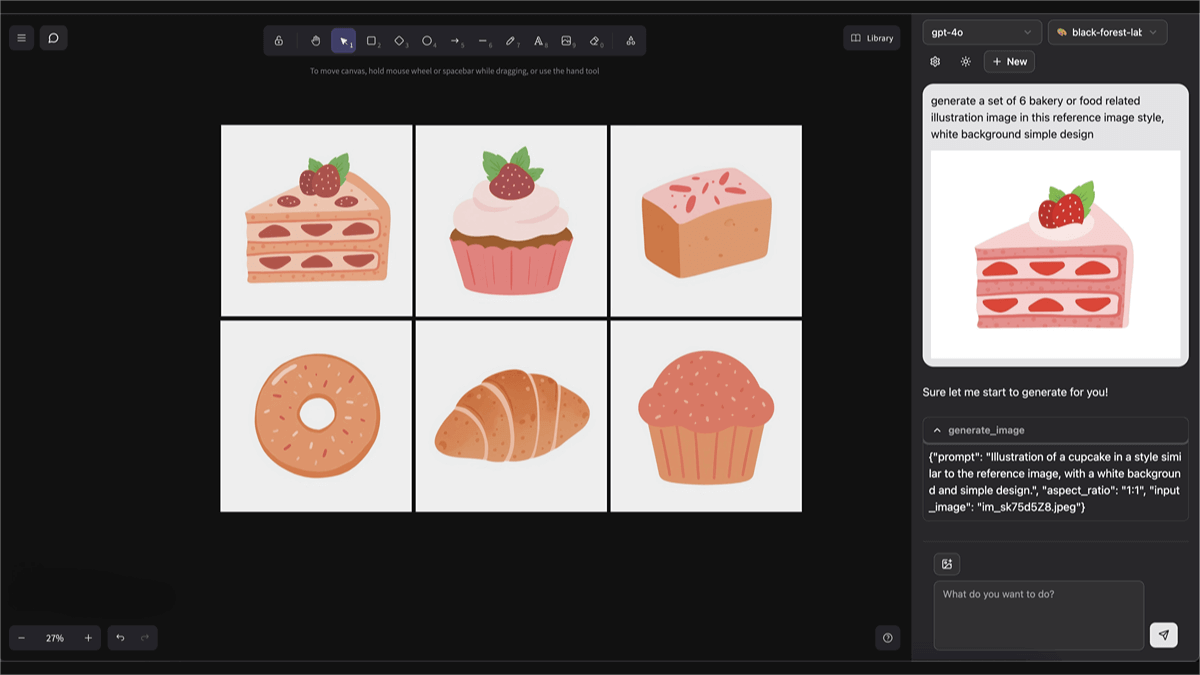

What is VRAG-RL?

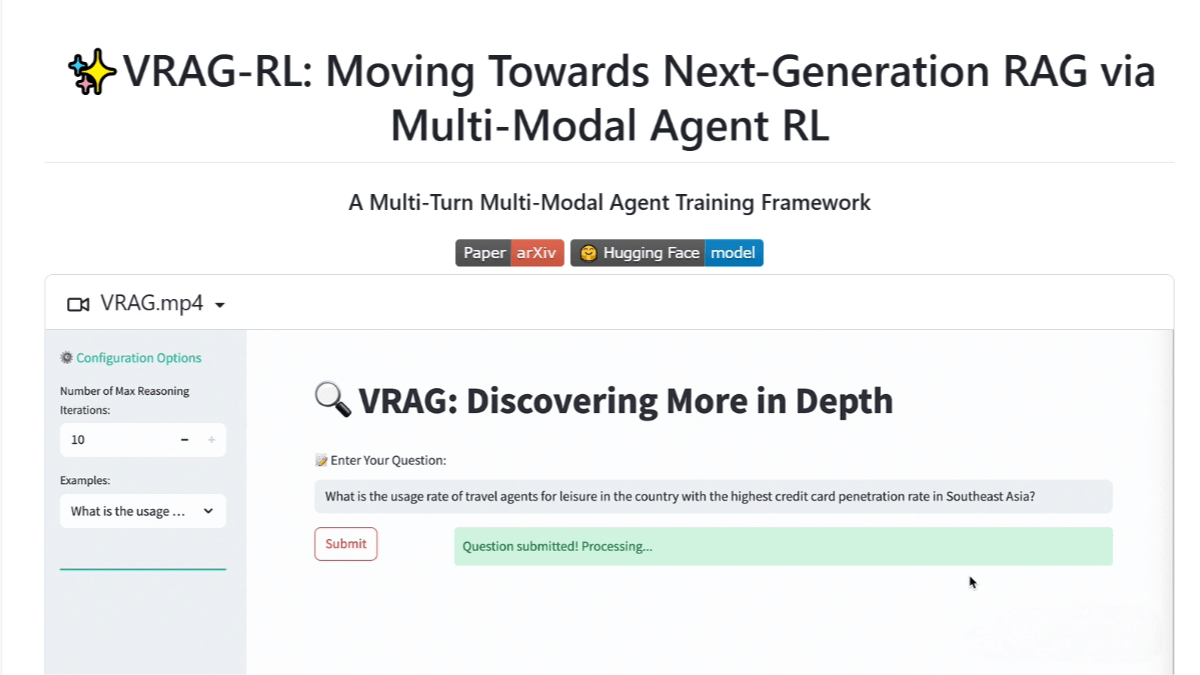

VRAG-RL is a vision-perception-driven multimodal RAG (Retrieval-Augmented Generation) reasoning framework developed by Alibaba’s Tongyi large model team. It focuses on enhancing the retrieval, reasoning, and understanding capabilities of Vision-Language Models (VLMs) when processing visually rich information. By defining a visual perception action space, the framework enables the model to progressively acquire information from coarse to fine granularity, effectively activating its reasoning ability. VRAG-RL introduces a composite reward mechanism that combines retrieval efficiency with model-based outcome rewards to optimize both retrieval and generation performance. In multiple benchmark evaluations, VRAG-RL significantly outperforms existing methods, demonstrating strong potential in the understanding of visually rich information.

Key Features of VRAG-RL

-

Vision-Enhanced Perception:

By defining a visual perception action space (such as cropping and zooming), the model can progressively acquire information from coarse to fine granularity, focusing more effectively on information-dense regions and enhancing its reasoning ability. -

Multi-Turn Interactive Reasoning:

Supports multi-turn interactions, enabling the model to continually interact with search engines and iteratively refine its reasoning process. -

Composite Reward Mechanism:

Combines retrieval efficiency and model-based outcome rewards to holistically guide the model’s optimization for reasoning and retrieval, making it more aligned with real-world application scenarios. -

Scalability:

The framework is highly scalable, supporting the integration of various tools and models, allowing for customization and easy expansion by users.

Technical Principles of VRAG-RL

-

Visual Perception Action Space:

A set of visual perception actions is defined, including selecting regions of interest, cropping, and zooming. These actions allow the model to progressively focus on finer details, enabling it to concentrate more effectively on relevant visual areas. -

Reinforcement Learning Framework:

VRAG-RL uses reinforcement learning (RL) to optimize the model’s reasoning and retrieval capabilities. Through interaction with search engines, the model autonomously samples single- or multi-turn reasoning trajectories and improves itself through iterative training. -

Composite Reward Mechanism:

A reward function is designed that includes retrieval efficiency rewards, pattern consistency rewards, and model-based outcome rewards. This mechanism focuses on optimizing the retrieval process based on final performance, allowing the model to more effectively obtain relevant information. -

Multi-Turn Interactive Training:

With a multi-turn interaction training strategy, the model is gradually optimized through ongoing interaction with external environments, improving reasoning stability and consistency. -

Data Expansion and Pretraining:

Training data is expanded through a multi-expert sampling strategy, ensuring that the model learns effective visual perception and reasoning capabilities during the pretraining phase.

Project Resources for VRAG-RL

-

GitHub Repository: https://github.com/Alibaba-NLP/VRAG

-

HuggingFace Model Collection: https://huggingface.co/collections/autumncc/vrag-rl

-

arXiv Technical Paper: https://arxiv.org/pdf/2505.22019

Application Scenarios for VRAG-RL

-

Intelligent Document QA:

Quickly retrieve and understand information from documents like PPTs and reports to efficiently answer questions. -

Visual Information Retrieval:

Rapidly locate and extract relevant visual information from large volumes of charts and images. -

Multimodal Content Generation:

Generate rich reports, summaries, and other content that combine visual and textual data. -

Education and Training:

Assist in teaching by helping students better understand and analyze visual materials. -

Smart Customer Service and Virtual Assistants:

Accurately respond to user queries involving visual content.

Related Posts