Mercury – A diffusion language model developed by Inception Labs

What is Mercury?

Mercury is a commercial-grade diffusion-based LLM developed by Inception Labs, specifically tailored for chat applications. Based on a “coarse-to-fine” generation process, it can generate multiple tokens in parallel, significantly improving text generation speed and inference efficiency compared to traditional autoregressive models. Mercury excels in programming applications and real-time voice interactions, providing users with fast and efficient AI solutions. A specialized version, Mercury Coder, has been released for coding applications, offering a public API and a free online demo platform for developers and researchers to test and use.

Key Features of Mercury

-

Fast Text Generation: Generates text at extremely high speeds, making it ideal for applications requiring quick responses, such as chatbots and real-time translation.

-

Multilingual Support: Supports multiple programming and natural languages, suitable for multilingual development and communication.

-

Real-Time Interaction: Designed for real-time interactive scenarios, such as live voice translation and call center agents, providing low-latency responses.

-

Reasoning & Logical Processing: Capable of handling complex reasoning tasks and delivering highly logical answers.

Technical Principles of Mercury

-

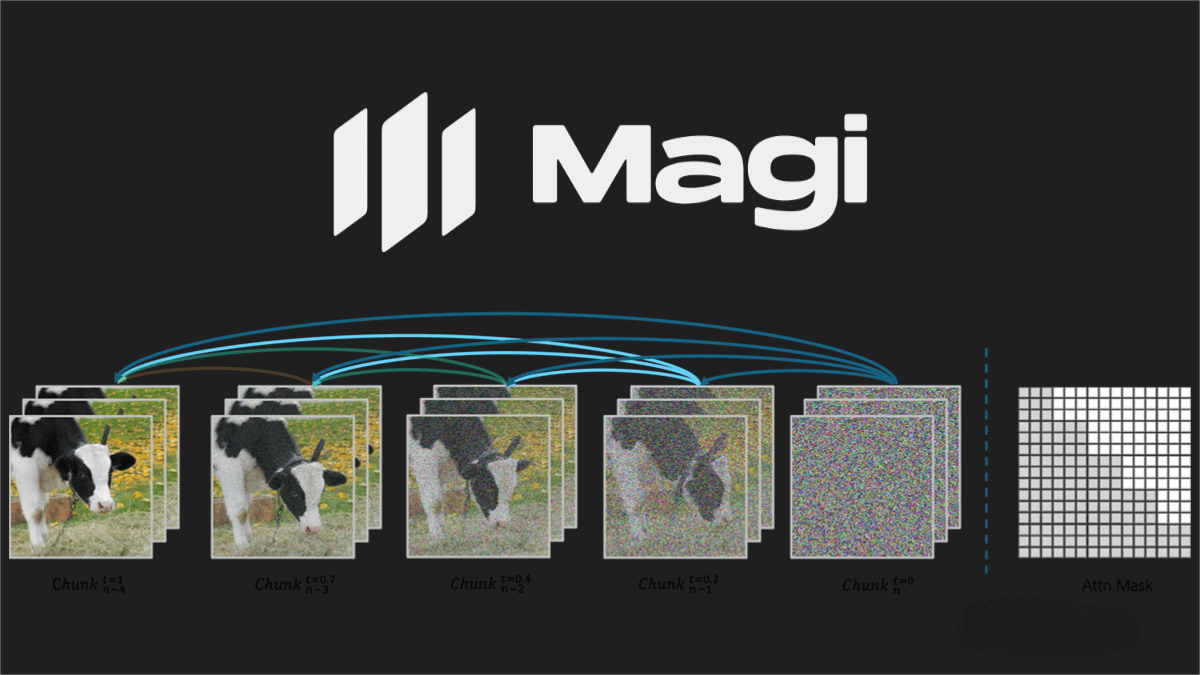

Diffusion Model: Mercury is based on a diffusion model, which generates data by gradually removing noise. The model starts with pure noise and progressively refines it into coherent text through a series of “denoising” steps.

-

Parallel Generation: Unlike traditional autoregressive models that generate tokens one by one, Mercury can produce multiple tokens in parallel, significantly boosting generation speed.

-

Transformer Architecture: Built on the Transformer architecture, Mercury efficiently processes sequential data, leveraging parallel computing resources to enhance model performance.

-

Optimized Training & Inference: Mercury is optimized for both training and inference, fully utilizing modern GPU architectures to maximize computational efficiency and response speed.

Mercury Project Links

-

Official Website: https://www.inceptionlabs.ai/introducing-mercury

-

arXiv Research Paper: https://arxiv.org/pdf/2506.17298

-

Online Demo: https://poe.com/Inception-Mercury

Applications of Mercury

-

Real-Time Interaction: Ideal for chatbots, live translation, and call center agents, Mercury provides instant responses, enabling smooth conversations and low-latency translations to enhance efficiency and user experience.

-

Language Learning: Assists in language acquisition by offering common phrases, grammar exercises, and simulated dialogues, helping users quickly learn and master new languages.

-

Content Creation: Rapidly generates articles, news reports, ad copies, and more, providing inspiration and efficient tools for content creators to boost productivity.

-

Enterprise Solutions: Integrates into customer service systems to create AI-powered support agents, delivering fast and accurate assistance to customers.

Related Posts