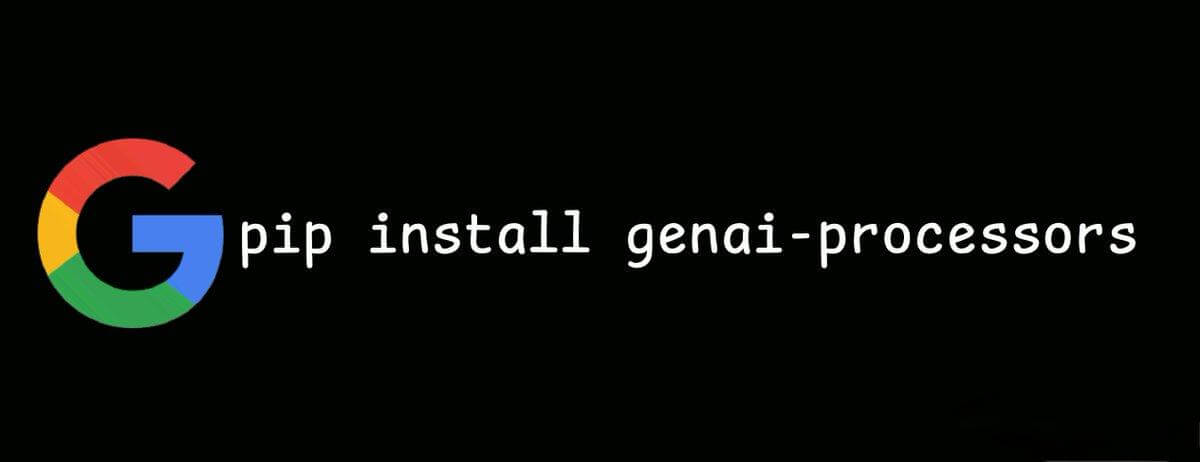

GenAI Processors:Building the ‘Processor Architecture’ for Generative AI Workflows

What are GenAI Processors?

GenAI Processors is an open-source, lightweight Python library developed by Google DeepMind. It enables developers to build modular, parallel, and efficient pipelines for generative AI tasks. Centered around a Processor abstraction, it supports asynchronous, multi-modal content streams (text, audio, images, JSON, etc.).

What are its main features?

-

Modular architecture: Break down complex tasks into reusable

ProcessororPartProcessorunits that can be composed using+(sequential) or//(parallel) operators. -

GenAI API integration: Comes with built-in processors like

GenaiModelandLiveProcessorfor seamless interaction with Gemini models. -

Async and concurrency: Built on Python’s

asynciofor true non-blocking execution and faster Time To First Token (TTFT). -

Multi-modal stream support: Wraps content and metadata in

ProcessorPartobjects, handling text, image, audio, or any JSON format uniformly. -

Streaming tools: Offers tools to split, merge, and concatenate content streams, perfect for building flexible, real-time pipelines.

How does it work

-

Processor abstraction: Each

Processorimplements an async stream interface that ingests and outputsProcessorPartobjects. -

Asynchronous and parallel execution: Tasks within and across processors run concurrently, boosting performance and responsiveness.

-

Unified multi-modal processing: A consistent API layer handles diverse data types with ease, making cross-modal tasks simple to manage.

-

Composable logic: Use intuitive Python operators (

+,//) to create complex pipeline logic; processors can be extended or decorated easily.

Project info

Application scenarios

-

Real-time AI agents: For example, voice input → Gemini response → TTS output; suitable for live interaction and multi-modal interfaces.

-

Research agents: Create pipelines for retrieval → reasoning → summarization, perfect for knowledge mining or question answering.

-

AI commentary & broadcast systems: Combine processors for event detection, content generation, and audio narration.

-

Low-latency AI services: Ideal for use cases with tight latency requirements, such as chatbots, co-pilots, or content generation tools.

Related Posts