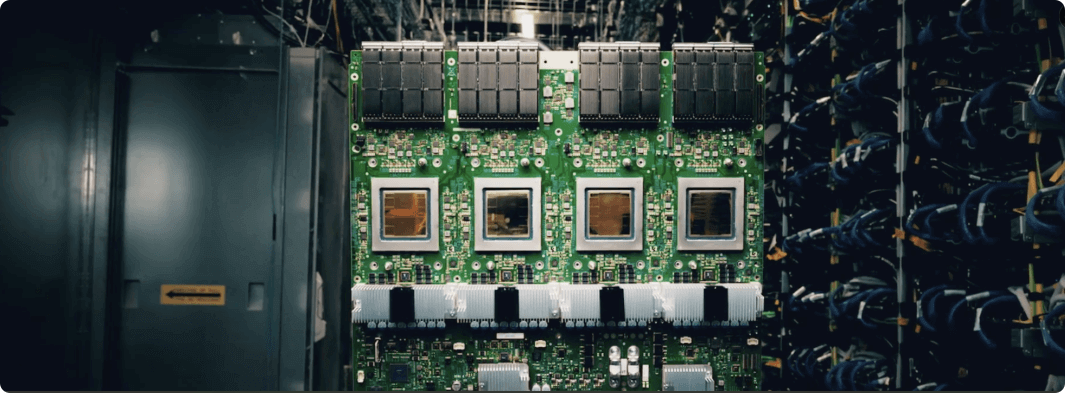

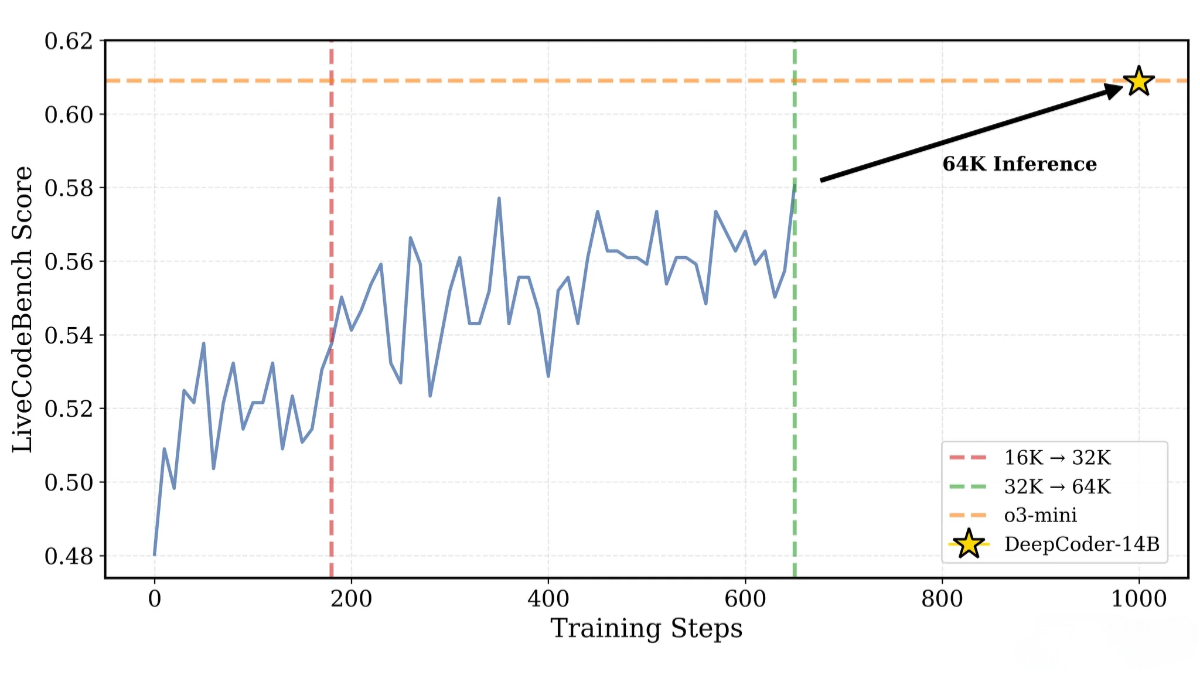

Google’s latest AI chip matches Nvidia B200, specifically designed for inference models, with the highest configuration capable of performing 42.5 quintillion floating-point operations per second.

Google's First AI Inference-Specialized TPU Chip is Here, Tailored for Deep-Thinking Models. Code-na...