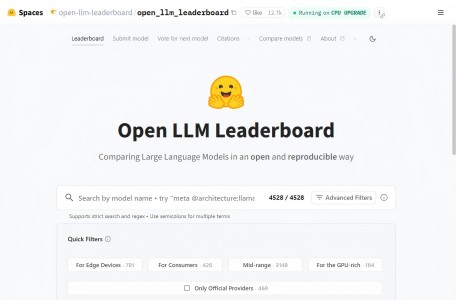

The Open LLM Leaderboard is a ranking list for open-source large models launched by HuggingFace, the largest community for large models and datasets, and is encapsulated based on the Eleuther AI Language Model Evaluation Harness.

Since the community often exaggerates the performance of large language models (LLMs) and chatbots after releasing a large number of them, it is difficult to filter out the real progress made by the open-source community and the current state-of-the-art models. Therefore, Hugging Face uses the Eleuther AI language model evaluation framework to conduct four key benchmark evaluations on models. This is a unified framework for testing generative language models on a large number of different evaluation tasks.

The Evaluation Benchmark of Open LLM Leaderboard

- AI2 Reasoning Challenge (25-shot): A set of elementary school science questions.

- HellaSwag (10-shot): A task for testing commonsense reasoning, which is easy for humans (about 95%) but challenging for state-of-the-art (SOTA) models.

- MMLU (5-shot) – Used to measure the multitask accuracy of text models. The test covers 57 tasks, including basic mathematics, American history, computer science, law, etc.

- TruthfulQA (0-shot) – Used to measure the tendency of models to reproduce common misinformation found online.

Similar Sites

OpenCompass

H2O Eval Studio

MMLU

PubMedQA

MMBench

Chatbot Arena

C-Eval