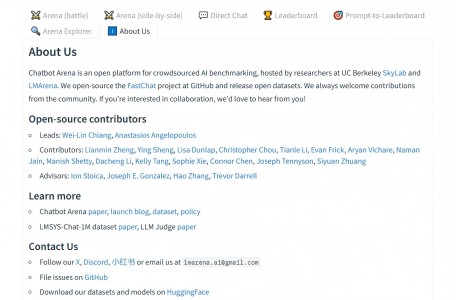

Chatbot Arena is a benchmark platform for large language models (LLMs), conducting anonymous random battles in a crowdsourced manner. The project party, LMSYS Org, is a research organization jointly founded by the University of California, Berkeley, the University of California, San Diego, and Carnegie Mellon University.

You can enter the battle platform through the demo experience address. Enter the questions you are interested in and submit them. Subsequently, the anonymous models will compete in pairs to generate relevant answers respectively. Users are required to evaluate the answers by selecting one of the four evaluation options: Model A is better, Model B is better, a tie, or both are poor. Multi-round dialogues are supported. Finally, the Elo rating system will be used to comprehensively evaluate the capabilities of large models. (You can specify a model to view its performance, but it will not be included in the final ranking.)

Similar Sites

Open LLM Leaderboard

C-Eval

FlagEval

CMMLU

OpenCompass

HELM

MMLU