Open LLM Leaderboard

The open-source large model ranking list launched by Hugging Face.

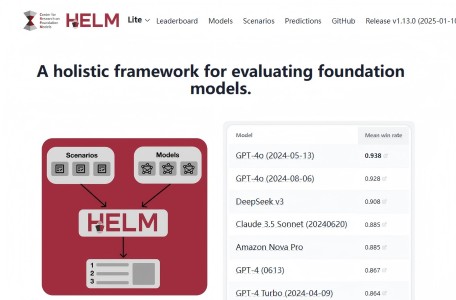

The large model evaluation system launched by Stanford University.

HELM, short for Holistic Evaluation of Language Models, is a large model evaluation system launched by Stanford University. This evaluation method mainly consists of three modules: scenarios, adaptations, and metrics. Each evaluation run requires specifying a scenario, a prompt for the adapted model, and one or more metrics. Its evaluation mainly focuses on English, with seven metrics including accuracy, uncertainty/calibration, robustness, fairness, bias, toxicity, and inference efficiency. The tasks include question answering, information retrieval, summarization, text classification, etc.