RustGPT – An AI Language Model for Automatic Text Completion Based on Input Content

What is RustGPT?

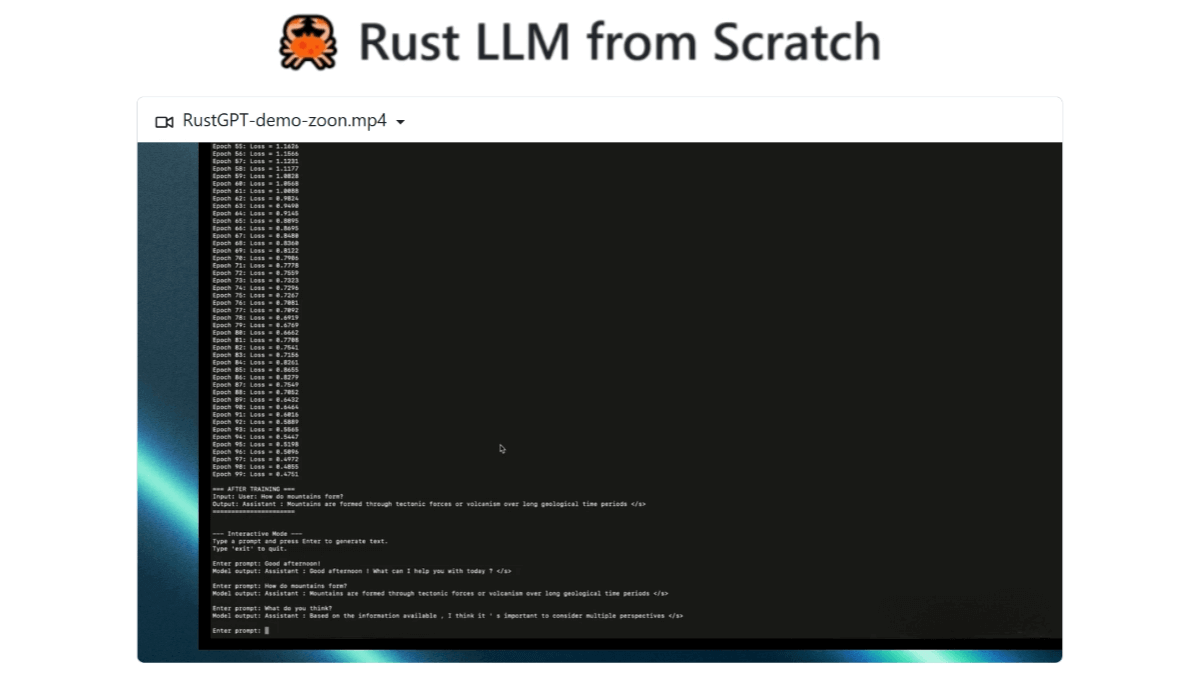

RustGPT is a Transformer-based language model written in Rust. Built entirely from scratch without relying on external machine learning frameworks, it only uses the ndarray library for matrix operations. The project includes pretraining for factual text completion, instruction fine-tuning for conversational AI, and interactive chat mode testing. Its modular architecture ensures clear separation of concerns, making it easier to understand and extend. RustGPT is ideal for developers interested in Rust and machine learning, serving as an excellent learning project.

Key Features of RustGPT

-

Factual text completion: Generates coherent continuations based on input text fragments.

-

Instruction fine-tuning: Fine-tuned to understand and generate text aligned with human instructions.

-

Interactive chat mode: Supports an interactive chat mode where users can input prompts or questions and receive corresponding responses.

-

Dynamic vocabulary: Supports dynamically building vocabularies, allowing the model to automatically expand based on input data and adapt to different text domains.

Technical Principles of RustGPT

-

Transformer-based architecture: Uses the Transformer architecture, a neural network design based on attention mechanisms that can handle long sequences and capture long-range dependencies. It includes multi-head self-attention and feed-forward neural networks.

-

Custom tokenization: Employs a custom tokenization method to split text into tokens (words, subwords, or characters). Tokens are then embedded into a high-dimensional vector space as model inputs.

-

Matrix operations: Relies heavily on matrix operations implemented with the

ndarraylibrary, including embedding layer multiplications, computations in multi-head self-attention, and operations in feed-forward networks. -

Pretraining and fine-tuning:

-

Pretraining: Trained on large-scale text data to learn fundamental language patterns and structures. The pretraining objective is to maximize the probability of predicting the next token.

-

Instruction fine-tuning: Builds on pretraining by optimizing the model to follow human instructions for specific tasks.

-

Project Repository

Application Scenarios of RustGPT

-

Text completion: Automatically generates logical continuations for partially written text, helping users finish writing faster.

-

Creative writing: Assists writers and content creators by generating stories, poems, articles, and other creative text.

-

Chatbots: Powers intelligent chatbots for customer service, virtual assistants, and other conversational AI use cases.

-

Machine translation: Translates text from one language to another, bridging language barriers.

-

Multilingual dialogue: Supports multi-language interactions, enabling cross-lingual communication.

Related Posts