What is Ling-1T?

Ling-1T is an open-source trillion-parameter language model released by Ant Group, positioned as a “flagship non-thinking model.” Built on a Mixture of Experts (MoE) architecture, it contains 1 trillion parameters, with approximately 51 billion parameters activated per inference. The model supports a 128K context length, making it well-suited for long-document tasks. Ling-1T focuses on delivering high-quality reasoning results with a limited number of output tokens, achieving extremely high inference efficiency. It excels in programming, mathematical reasoning, knowledge comprehension, creative writing, and other tasks, placing it at the forefront of open-source models.

Key Features of Ling-1T

-

Efficient Reasoning: Produces high-quality reasoning results within limited output tokens, achieving very high inference efficiency for rapid problem-solving.

-

Long-Text Processing: Supports a 128K context length, enabling complex reasoning over long documents, suitable for fields such as law, finance, and scientific research.

-

Creative Writing: Can generate creative content such as copywriting, scripts, poetry, and more, meeting the needs of content marketing and advertising.

-

Multilingual Support: Capable of handling tasks in English and other languages, with a degree of multilingual ability.

-

Multi-Task Capability: Excels in programming assistance, math problem solving, knowledge Q&A, and multi-turn conversations, generating high-quality code and designs.

-

Application Integration: Can be integrated into various tools, such as payment apps, financial assistants, and health assistants, enhancing intelligence and automation.

Technical Principles of Ling-1T

-

MoE Architecture: Built on a Mixture of Experts (MoE) framework with 1 trillion parameters and 256 experts. Only about 51 billion parameters are activated per inference, significantly reducing inference costs while maintaining high performance. The initial layers use dense structures, switching to MoE in later layers to reduce load imbalance in shallow networks.

-

High-Density Reasoning Corpus: Pretrained on over 20T+ tokens of high-quality, high reasoning-density data to ensure strong logical depth and reasoning capability. Pretraining occurs in three stages:

-

Stage 1: 10T tokens of high-knowledge-density corpus.

-

Stage 2: 10T tokens of high-reasoning-density corpus.

-

Mid-training: Extends context to 128K tokens and incorporates chain-of-thought data.

-

-

Efficient Training: Uses FP8 precision throughout training, which significantly saves memory and speeds up training compared to BF16. In a 1T-token comparison experiment, the loss deviation was only 0.1%.

-

LPO Optimization: Employs Linguistics-Unit Policy Optimization (LPO), optimizing at the sentence level to better align with semantic logic and improve reasoning and generation quality.

Project Links

-

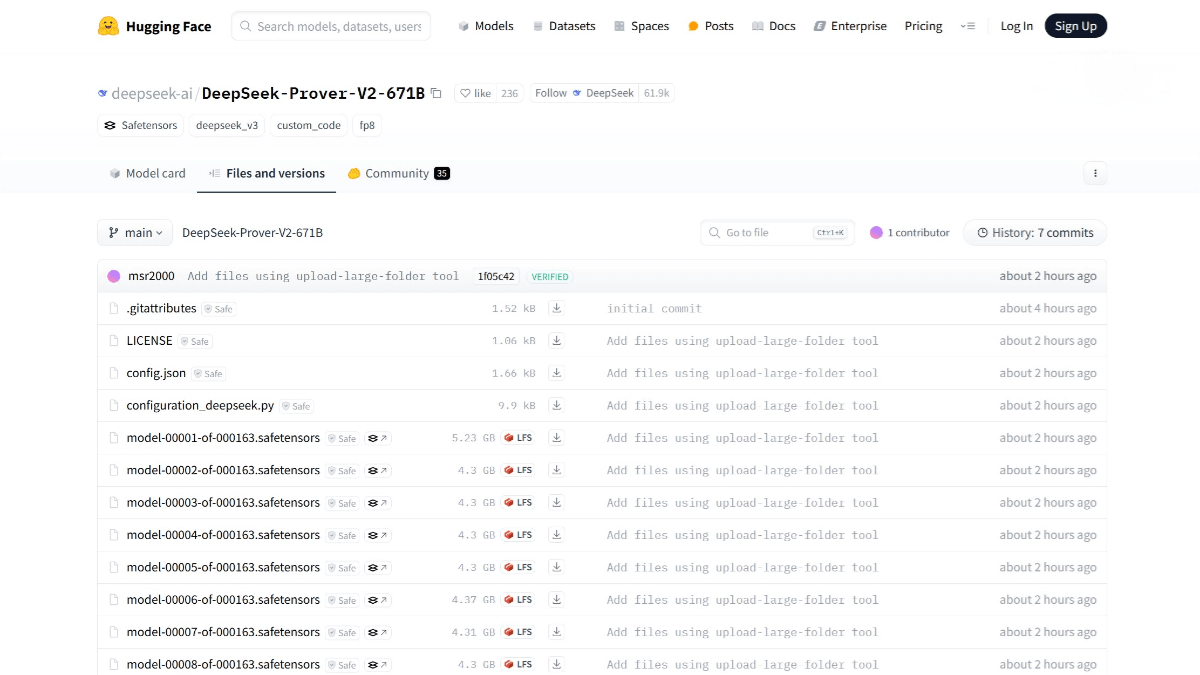

HuggingFace Model Repository: https://huggingface.co/inclusionAI/Ling-1T

Application Scenarios of Ling-1T

-

Programming Assistance: Generates high-quality code snippets to help developers implement features quickly and improve programming efficiency.

-

Mathematical Problem Solving: Excels in mathematical reasoning and problem-solving, supporting solutions to complex problems such as competition-level questions.

-

Knowledge Q&A: Strong knowledge comprehension capabilities, accurately answering a wide range of factual questions and providing reliable information.

-

Creative Writing: Generates creative content including copywriting, scripts, and poetry, supporting content creation and advertising needs.