DeepSeek-Prover-V2-671B – An open-source mathematical reasoning large model launched by DeepSeek

What is DeepSeek-Prover-V2-671B

DeepSeek-Prover-V2-671B is a large-scale language model focused on mathematical reasoning, released by DeepSeek on the Hugging Face open-source platform. With 671 billion parameters, it is an upgraded version of last year’s Prover-V1.5 model for mathematical AI.

The model features a cutting-edge Multi-head Latent Attention (MLA) architecture, which significantly reduces memory usage and computational overhead during inference through KV Cache compression. It supports multiple computation formats including BF16, FP8, and F32, allowing for faster and more resource-efficient training and deployment.

Key Features of DeepSeek-Prover-V2-671B

-

Mathematical Problem Solving: Handles a wide range of problems from basic algebra to advanced mathematics. Excels at theorem proving and complex computations.

-

Formal Reasoning Training: Trained for formal reasoning using the Lean 4 framework, combining reinforcement learning with large-scale synthetic datasets to enhance automated proof capabilities.

-

Efficient Training & Deployment: Utilizes the efficient safetensors file format and supports multiple computation precisions (BF16, FP8, F32), making it faster and more efficient to train and deploy.

Technical Highlights

-

Multi-head Latent Attention (MLA) Architecture: The model adopts an advanced MLA architecture, which compresses KV cache to reduce memory and compute requirements during inference, enabling efficient operation even in resource-constrained environments.

-

Mixture of Experts (MoE) Architecture: Incorporates a Mixture of Experts design, combined with formal reasoning training via Lean 4, leveraging reinforcement learning and synthetic data to significantly enhance automated reasoning.

-

File Format & Precision Support: Built with the safetensors format and supports BF16, FP8, and F32 computation, optimizing training and deployment for speed and efficiency.

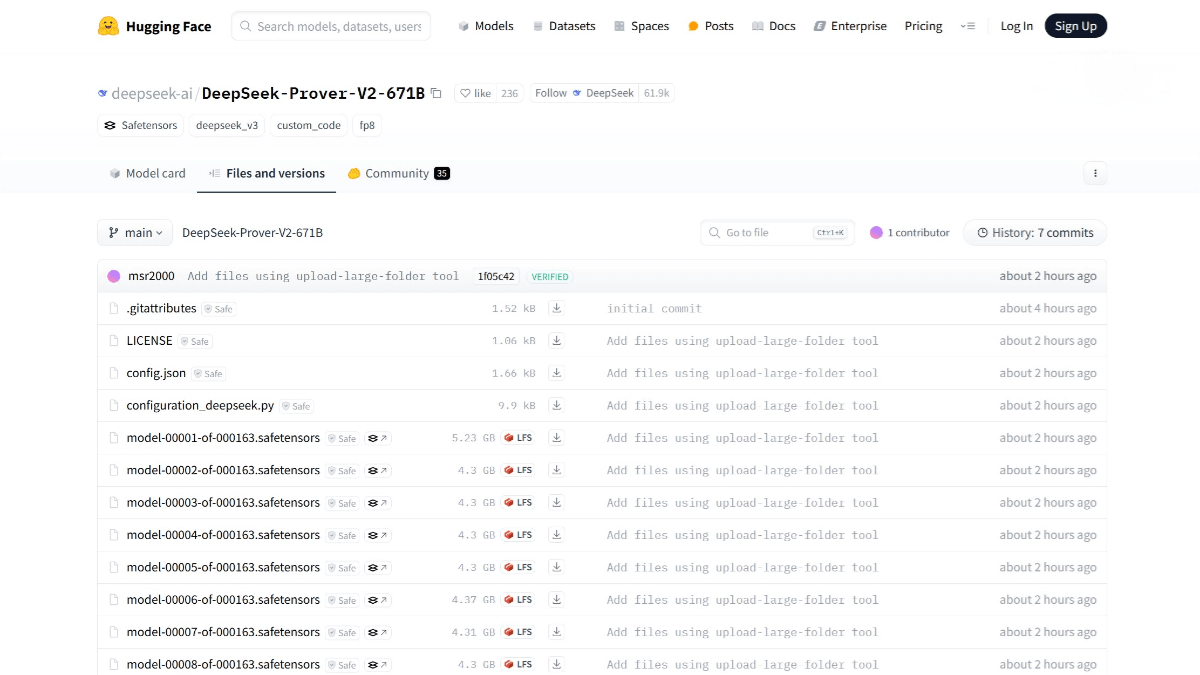

Project Repository

-

Hugging Face Model Hub: https://huggingface.co/deepseek-ai/DeepSeek-Prover-V2-671B

Application Scenarios

-

Education: A powerful teaching assistant for solving complex math problems, supporting both students and educators.

-

Scientific Research: Aids researchers in complex mathematical modeling and theoretical validation.

-

Engineering Design: Useful in optimizing designs and conducting simulation tests.

-

Financial Analysis: Supports tasks like risk assessment and investment strategy analysis.

-

Software Development: Assists developers in algorithm design and performance optimization.

Related Posts