DiaMoE-TTS – a multi-dialect TTS framework open-sourced by Tsinghua University in collaboration with Giant Network

What is DiaMoE-TTS?

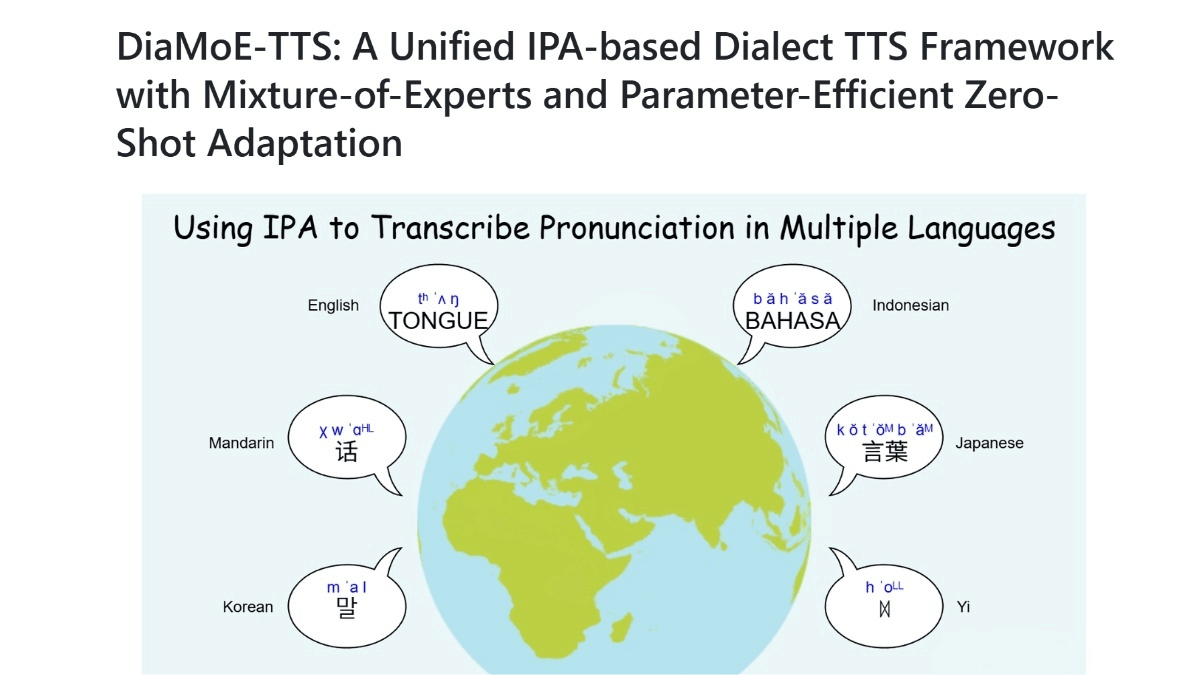

DiaMoE-TTS is a multi-dialect text-to-speech (TTS) framework jointly developed by Tsinghua University and Giant Network. The framework is based on a unified International Phonetic Alphabet (IPA) input system, combined with a dialect-aware Mixture-of-Experts (MoE) architecture and low-resource adaptation strategy (PEFT), enabling low-cost and low-barrier multi-dialect speech synthesis. It supports a wide range of dialects and minor languages, allowing rapid modeling with limited data while maintaining high efficiency and flexibility. DiaMoE-TTS is fully open-sourced, including data, code, and methods, promoting dialect preservation and cultural heritage, giving niche languages a voice in the digital world.

Main Features of DiaMoE-TTS

-

Multi-Dialect Speech Synthesis: Supports various dialects and minor languages, including Cantonese, Minnan, Wu, and can be extended to special forms such as Peking opera rhymes, enabling niche languages to be spoken naturally.

-

Low-Resource Adaptation: Using PEFT strategies and data augmentation, the framework can quickly adapt to new dialects with only a few hours of speech data, synthesizing natural and fluent speech.

-

High Scalability: Fully open-source pipeline with complete data preprocessing, training, and inference code, supporting multiple languages and facilitating reproducibility and extension by researchers and developers.

-

High Naturalness: The dialect-aware MoE architecture dynamically selects expert networks, preserving the unique timbre and prosody of each dialect to improve speech naturalness.

Technical Principles

-

Unified IPA Frontend: Uses the IPA as a standardized input system, mapping all dialects into the same phoneme space. This eliminates cross-dialect discrepancies, ensuring consistent training and strong generalization.

-

Dialect-Aware MoE Architecture: Multiple expert networks are introduced, each focusing on one or several dialects, avoiding “style averaging” by a single network. The IPA input automatically selects the most suitable expert network, and an auxiliary dialect classification loss enhances expert differentiation.

-

Low-Resource Adaptation (PEFT): Incorporates Conditioning Adapter and LoRA in the text embedding and attention layers, allowing dialect expansion with minimal parameter tuning while keeping the backbone and MoE modules frozen. Pitch and speed perturbations further improve synthesis under low-resource conditions.

-

Multi-Stage Training: Builds on the original F5-TTS checkpoint and pre-trains with IPA phoneme-converted data for smooth input transition. Jointly models multiple open-source dialect datasets to activate the MoE structure, learning shared features while distinguishing dialect-specific pronunciation patterns. Dynamic gating and auxiliary dialect classification loss optimize expert routing and capture unique dialect characteristics. For new dialects with only a few hours of data, PEFT combined with data augmentation enables efficient transfer learning while preserving existing knowledge.

Project Resources

-

GitHub Repository: https://github.com/GiantAILab/DiaMoE-TTS

-

HuggingFace Model Hub: https://huggingface.co/RICHARD12369/DiaMoE_TTS

-

arXiv Paper: https://www.arxiv.org/pdf/2509.22727

Application Scenarios

-

Education: Provides vivid speech synthesis tools for teaching dialects and minor languages, helping students better learn and master pronunciations.

-

Cultural Preservation: Assists in preserving and passing on dialects and minor languages, recording and recreating endangered dialects to maintain cultural diversity.

-

Virtual Humans and Digital Content: Generates diverse dialect speech for virtual humans and digital assistants, enriching character expressiveness and enhancing user experience.

-

Digital Tourism: Offers multilingual dialect audio guides at tourist sites, strengthening visitors’ cultural recognition and sense of connection.

-

Cross-Border Communication: Supports speech synthesis in multiple languages and dialects, facilitating communication and understanding among people from different linguistic backgrounds.

Related Posts