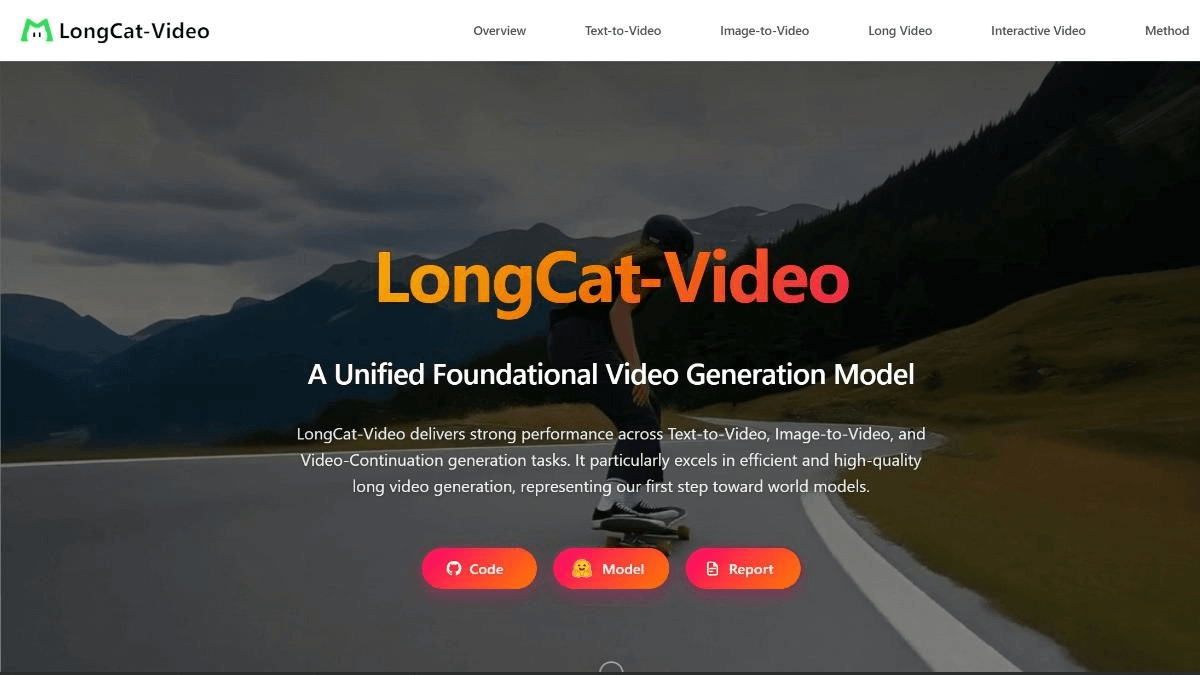

What is LongCat-Video?

LongCat-Video is an open-source video generation model developed by Meituan’s LongCat team, featuring 13.6 billion parameters. It excels in tasks such as Text-to-Video, Image-to-Video, and Video-Continuation, particularly in efficiently generating high-quality long videos. Through multi-reward reinforcement learning optimization (GRPO), the model demonstrates performance comparable to leading open-source video generation models and the latest commercial solutions in both internal and public benchmarks.

Main Features of LongCat-Video

1. Long Video Generation:

Pretrained on video continuation tasks, LongCat-Video can generate videos lasting several minutes without noticeable color drift or quality degradation.

2. Unified Multi-Task Architecture:

Text-to-Video, Image-to-Video, and Video-Continuation tasks are unified under a single video generation framework, enabling all tasks to be handled by one model.

3. Efficient Inference:

With a coarse-to-fine generation strategy and Block Sparse Attention technology, the model can generate 720p, 30fps videos within minutes.

4. Multi-Reward Reinforcement Learning Optimization:

Using the Group Relative Policy Optimization (GRPO) approach with multiple reward signals, LongCat-Video achieves performance comparable to leading open-source and commercial video generation systems in both internal and public evaluations.

Technical Principles of LongCat-Video

1. Unified Architecture:

LongCat-Video employs a single, unified framework that integrates Text-to-Video, Image-to-Video, and Video-Continuation tasks. By sharing the same architecture and parameters, it efficiently handles multiple generation tasks.

2. Long Video Generation Technology:

Through pretraining on video continuation tasks and adopting specialized training strategies, the model can generate coherent, high-quality videos lasting several minutes without loss of consistency or visual fidelity.

3. Efficient Inference Strategy:

The model follows a coarse-to-fine generation process—first creating a rough structure and then refining details—while leveraging Block Sparse Attention to boost inference efficiency and reduce generation time for high-resolution videos.

4. Multi-Reward Reinforcement Learning Optimization:

By applying the multi-reward Group Relative Policy Optimization (GRPO) method, the model is optimized across multiple dimensions, including text-video alignment, visual fidelity, and motion quality, enhancing the overall output quality of generated videos.

Project Links

-

Official Website: https://meituan-longcat.github.io/LongCat-Video/

-

GitHub Repository: https://github.com/meituan-longcat/LongCat-Video

-

Hugging Face Model Hub: https://huggingface.co/meituan-longcat/LongCat-Video

Application Scenarios of LongCat-Video

-

Content Creation:

Enables creators to quickly generate video materials such as advertisements, short videos, and animations, greatly improving production efficiency. -

Video Continuation:

Generates follow-up content for existing video clips, useful for storytelling, video editing, and creative extensions. -

Education and Training:

Produces instructional or demonstration videos to enhance teaching and training experiences. -

Entertainment and Gaming:

Generates dynamic scenes or character animations to enrich visual effects and immersion in games. -

Intelligent Customer Service and Virtual Assistants:

Creates video-based responses for more intuitive and engaging user interactions. -

Creative Design:

Assists designers in concept visualization and rapid video prototyping to express creative ideas efficiently.

Related Posts