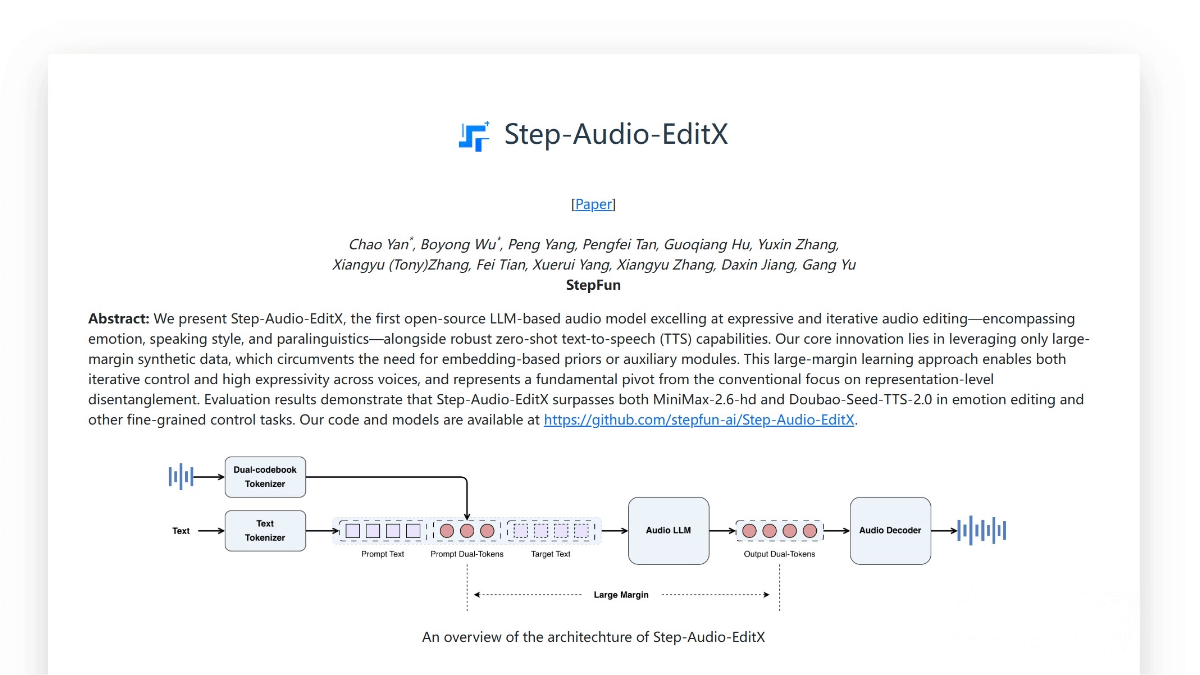

Step-Audio-EditX – An open-source audio-editing large model developed by StepStar

What is Step-Audio-EditX?

Step-Audio-EditX is the world’s first LLM-grade open-source audio editing model, released by StepStar. It focuses on three-axis fine-grained iterative control—emotion, speaking style, and paralinguistics. It can arbitrarily increase or decrease emotional intensity (anger, joy, sadness, etc.), stack styles like coquettish tone, whispering, or elderly speech, and insert 10 types of natural paralinguistic tokens such as breathing, laughter, sighs, and more, just like adding subtitles.

It supports zero-shot TTS, cloning a target voice without needing reference audio. By adding tags like “[Sichuan dialect]” or “[Cantonese]” before the text, the model can instantly switch dialects.

The model is trained fully on large-margin synthetic data with SFT + PPO, and achieves attribute disentanglement and iterative control without requiring any additional encoders or adapters during post-training.

Key Features

Emotional Editing:

Dozens of emotional labels—anger, happiness, sadness, excitement, fear, surprise, disgust, etc.—with support for iterative strengthening or weakening.

Style Editing:

More than ten speaking styles such as coquettish, whispering, elderly, child-like, serious, generous, exaggerated, etc. Styles can be stacked and finely tuned.

Paralinguistic Insertion:

Precisely insert 10 types of natural tokens including breathing, laughter, sighs, surprise (oh/ah), confirmation (en), discontent (hnn), questioning (ei), and thinking sounds (uhm), etc.

Zero-shot TTS:

Clone a voice without needing any reference audio. Add tags like “[Sichuan dialect]” or “[Cantonese]” before the text to instantly switch dialects.

Iterative Control:

Repeatedly edit the same audio with disentangled attributes that do not interfere with each other, enabling step-by-step enhancement.

Lightweight & Open Source:

Includes an 8-bit quantized version runnable on a single 8GB GPU. Best audio quality achieved on 4×A800/H800.

Contains inference & training code, a Gradio demo, and a HuggingFace Space.

Technical Principles of Step-Audio-EditX

Dual-Codebook Audio Tokenization:

Parallel 16.7 Hz / 1024-item “language codebook” and 25 Hz / 4096-item “semantic codebook” interleaved at a 2:3 ratio.

This converts any speech into discrete tokens while preserving emotion and prosody, giving the LLM a “speech vocabulary” for direct manipulation.

3B Audio LLM:

Bootstrapped from a text-pretrained 3B model. Text tokens and dual-codebook audio tokens are concatenated in dialogue format, and the model outputs only audio tokens.

Training uses a 1:1 text-to-audio ratio to leverage existing text LLM ecosystems for post-training.

Large-Margin Synthetic Data:

No additional encoders or adapters are used. SFT + PPO is performed on paired data with same text but different attributes (emotion/style/paralinguistics).

Large attribute margins force the model to learn attribute disentanglement, enabling iterative intensity control and multi-attribute stacking.

Flow Matching + BigVGANv2 Decoding:

LLM-output tokens are converted into Mel spectrograms via a DiT-flow-matching module, then BigVGANv2 reconstructs waveforms.

Trained on 200k hours of high-quality data for accurate pronunciation and voice similarity.

Unified Framework:

The same “tokenize → LLM → decode” pipeline supports zero-shot TTS, emotional/style/paralinguistic editing, speed adjustment, and noise reduction—without task-specific modules, reducing complexity and inference cost.

Project Links

-

Official Website: https://stepaudiollm.github.io/step-audio-editx/

-

GitHub Repository: https://github.com/stepfun-ai/Step-Audio-EditX

-

HuggingFace Model Hub: https://huggingface.co/stepfun-ai/Step-Audio-EditX

-

arXiv Paper: https://arxiv.org/pdf/2511.03601

Application Scenarios

Audio Content Enhancement:

Audiobooks, podcasts, and news narration can instantly add styles like “happy/sad/whispering” without re-recording. Multiple versions can be generated quickly to improve immersion.

Video & Advertising Voiceovers:

Short videos, animations, and ads can clone character voices in zero-shot, then iteratively add styles such as coquettish, exaggerated, or serious—achieving low-cost, multi-character, multi-emotion voiceovers.

Games / Virtual Idols:

NPCs, virtual streamers, and VTubers can clone a voice using a single reference line, then insert paralinguistic sounds like laughter, breaths, and sighs to create lively, interactive character voices.

Customer Service & Voice Assistants:

Customer service bots can upgrade flat TTS responses into emotional ones—warm, enthusiastic, comforting—improving user experience.

Supports dialect tags for localized service.

Education / Language Learning:

Online courses and language apps can use elderly/child/whisper styles for age-appropriate narration or switch Mandarin to Cantonese/Sichuan instantly for imitation practice.

Meetings & Accessibility:

For noisy or pause-heavy meeting recordings, the model can perform noise reduction and silence trimming, then adjust speaking speed or add emotion to generate clear, easy-to-understand meeting summaries.

Related Posts