Depth Anything 3 – A Visual Spatial Reconstruction Model Developed by ByteDance

What is Depth Anything 3?

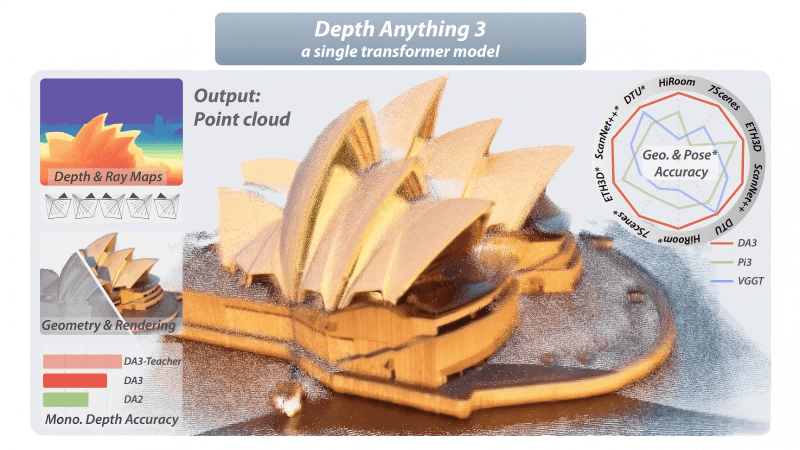

Depth Anything 3 (DA3) is a visual spatial reconstruction model developed by ByteDance’s Seed team. It reconstructs 3D geometric structure from visual inputs of arbitrary viewpoints using a unified Transformer architecture. The model introduces a “depth-ray” representation that eliminates the need for complex multi-task training, greatly simplifying model design. Depth Anything 3 surpasses previous mainstream models in both camera pose accuracy and geometric reconstruction performance, while maintaining high inference efficiency. It is suitable for applications in autonomous driving, robot navigation, virtual reality, and more, offering a new and efficient solution for visual spatial reconstruction.

Key Features of Depth Anything 3

Multi-view 3D Spatial Reconstruction

DA3 can reconstruct 3D spatial structure from any number of visual inputs, including single images, multi-view photos, or video streams.

Camera Pose Estimation

The model can accurately estimate camera pose—including position and orientation—even without known camera parameters.

Monocular Depth Estimation

DA3 delivers strong performance in monocular depth estimation, predicting pixel-level depth from a single image to support 3D scene understanding.

Novel View Synthesis

By integrating with 3D Gaussian rendering, the model can generate high-quality images from novel viewpoints, making it suitable for VR/AR view-synthesis tasks.

Efficient Inference and Deployment

Its streamlined architecture enables fast inference with low resource consumption, supporting deployment on mobile and embedded devices.

Technical Principles of Depth Anything 3

Unified Transformer Architecture

DA3 uses a single Transformer model (e.g., DINOv2) as its core architecture. Without complex custom modules, the Transformer’s self-attention mechanism flexibly handles any number of input views and dynamically exchanges cross-view information for efficient global spatial modeling.

Depth-Ray Representation

The model introduces a “depth-ray” representation that predicts a depth map and a ray map to describe the 3D scene. The depth map gives pixel-to-camera distance, while the ray map describes the projection direction of each pixel in 3D space. This representation naturally decouples scene geometry from camera motion, simplifying outputs and improving accuracy and efficiency.

Input-Adaptive Cross-View Attention

DA3 incorporates an input-adaptive cross-view self-attention mechanism that dynamically reorders input view tokens to enable efficient cross-view information exchange. This allows the model to handle a wide range of input configurations, from single-view to multi-view setups.

Dual DPT Heads

To jointly predict depth and ray maps, DA3 employs a dual DPT-head design. The two heads share feature-processing modules and optimize depth and ray outputs independently in the final stage, enhancing interaction and consistency between the two tasks.

Teacher–Student Training Paradigm

DA3 uses a teacher–student training paradigm, where a teacher model trained on synthetic data generates high-quality pseudo-labels to provide better supervision signals for the student model.

Single-Step High-Accuracy Output

DA3 generates accurate depth and ray outputs in one forward pass, without the iterative optimization used in traditional methods. This greatly increases inference speed, simplifies training and deployment, and maintains high precision in 3D reconstruction.

Project Resources

-

Project Website: https://depth-anything-3.github.io/

-

GitHub Repository: https://github.com/ByteDance-Seed/depth-anything-3

-

arXiv Paper: https://arxiv.org/pdf/2511.10647

-

Online Demo: https://huggingface.co/spaces/depth-anything/depth-anything-3

Application Scenarios of Depth Anything 3

Autonomous Driving

DA3 reconstructs 3D environments from multi-view camera images, helping autonomous vehicles more accurately perceive object positions and distances, improving decision reliability and safety.

Robotic Navigation

By reconstructing real-time 3D structure, DA3 provides robots with precise terrain and obstacle information for efficient navigation and path planning in complex environments.

Virtual Reality (VR) & Augmented Reality (AR)

DA3 can rapidly convert real-world scenes into high-quality 3D models for VR scene reconstruction or AR virtual object integration, enhancing immersion.

Architectural Mapping & Design

DA3 reconstructs detailed 3D point clouds of architectural scenes from multi-angle images, supporting architectural surveying, interior design, and virtual walkthroughs.

Cultural Heritage Preservation

DA3 enables high-fidelity 3D reconstruction of historical buildings and artifacts, supporting digital preservation, restoration research, and virtual exhibition.

Related Posts