Today, I want to talk about AI agents—arguably one of the hottest topics in tech right now. Companies are racing to launch agent-related projects, but here’s the catch: most people have a superficial understanding of agents and tend to overhype their capabilities. To cut through the noise, we need to dig into the fundamental why behind agents. Where do they come from? What makes them necessary? And crucially, when don’t we need them?

Let’s start with a basic truth: Not every problem requires an agent. For simple tasks, a single input-output cycle with a large language model (LLM) is often sufficient. You give the model a prompt, it generates a response, and you’re done. Easy.

The Limits of Prompts

But not all tasks are simple. Take writing a report, for example. On the surface, it seems straightforward—until you break it down:

- Define the report’s topic and align it with market trends.

- Create an outline with specific sections.

- Write content for each section, adhering to style guidelines.

- Edit for clarity, formatting, and coherence.

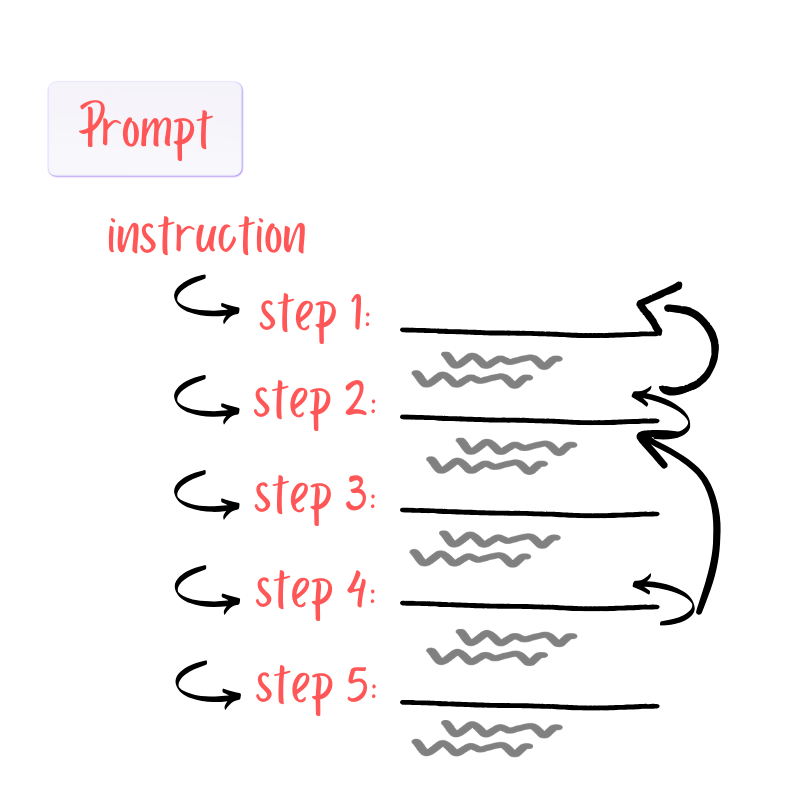

To handle this with a single prompt, you’d need a convoluted, multi-step instruction like:

“First, generate a topic based on X and Y. Second, outline three sections addressing A, B, and C. Third, write each section with bullet points and examples. Fourth, restructure the draft using APA format…”

Here’s the problem: LLMs struggle with overly complex prompts. The longer and more detailed the instruction, the higher the chance of errors. For instance, if Step 2 (the outline) misses your requirements, you’d need to manually troubleshoot—reexamining Step 1, tweaking prompts, and rerunning iterations. This trial-and-error loop quickly becomes unsustainable.

The Agent Solution

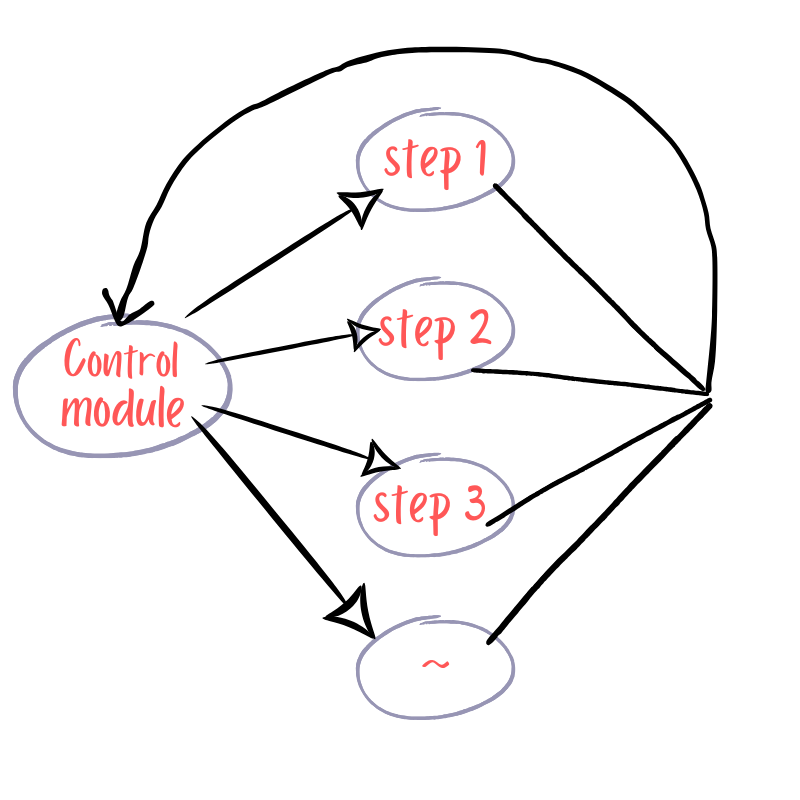

This is where agents shine. Instead of dumping a 10-step prompt into an LLM and praying, agents break the task into smaller, specialized subtasks. Each agent handles one specific job and passes its output to the next, like a relay team.

Imagine a workflow:

- Topic Agent: Generates and refines the report’s core theme.

- Outline Agent: Designs a structured blueprint, iterating until approved.

- Content Agent: Writes each section based on the outline.

- Editing Agent: Polishes formatting and style.

A Controller Agent oversees this chain, checking quality at each step. If the outline fails, it loops back to the Outline Agent—no human intervention needed. This mirrors how a product manager delegates tasks to specialists: assign the right work to the right “expert.”

Key Design Principles

Building effective agents requires:

- Task Decomposition: Split complex goals into sub-tasks that align with each agent’s capabilities.

- Memory Management: Agents need context (e.g., past steps, user preferences) but with strict access controls.

- Feedback Loops: Automated validation at each stage to catch errors early.

The core philosophy? Respect the LLM’s limits. Agents work because they avoid overwhelming the model. By assigning bite-sized tasks, we stay within the model’s “skill ceiling” and maximize success rates.

When to Use Agents

Agents aren’t magic. For tasks like answering FAQs or summarizing text, a well-crafted prompt is faster and cheaper. Save agents for workflows where:

- Tasks require sequential dependencies (e.g., Step 2 can’t start until Step 1 is validated).

- Iterative refinement is critical (e.g., drafting → editing → formatting).

- Specialization matters (e.g., separate agents for research vs. creative writing).

The Bottom Line

Agents aren’t just a buzzword—they’re a pragmatic response to LLM limitations. By orchestrating specialized sub-tasks, they turn brittle, monolithic prompts into robust, self-correcting systems. The future of AI isn’t about building bigger models; it’s about building smarter workflows.

Related Posts