Since OpenAI released function calling in 2023, we have been thinking about how to unlock an ecosystem of agents and tool use. As foundational models become smarter, the ability of agents to interact with external tools, data, and APIs is becoming increasingly fragmented: developers need to implement agents with special business logic for every system that agents run and integrate. Clearly, there needs to be a standard interface for execution, data retrieval, and tool invocation. APIs were the first great unifier of the internet—creating a shared language for software communication—but AI models lack an equivalent.

The Model Context Protocol (MCP) was introduced in November 2024 as a potential solution, gaining significant attention among developers and the AI community. In this article, we will explore what MCP is, how it changes the way AI interacts with tools, what developers have built with it so far, and the challenges that still need to be addressed.

What is MCP?

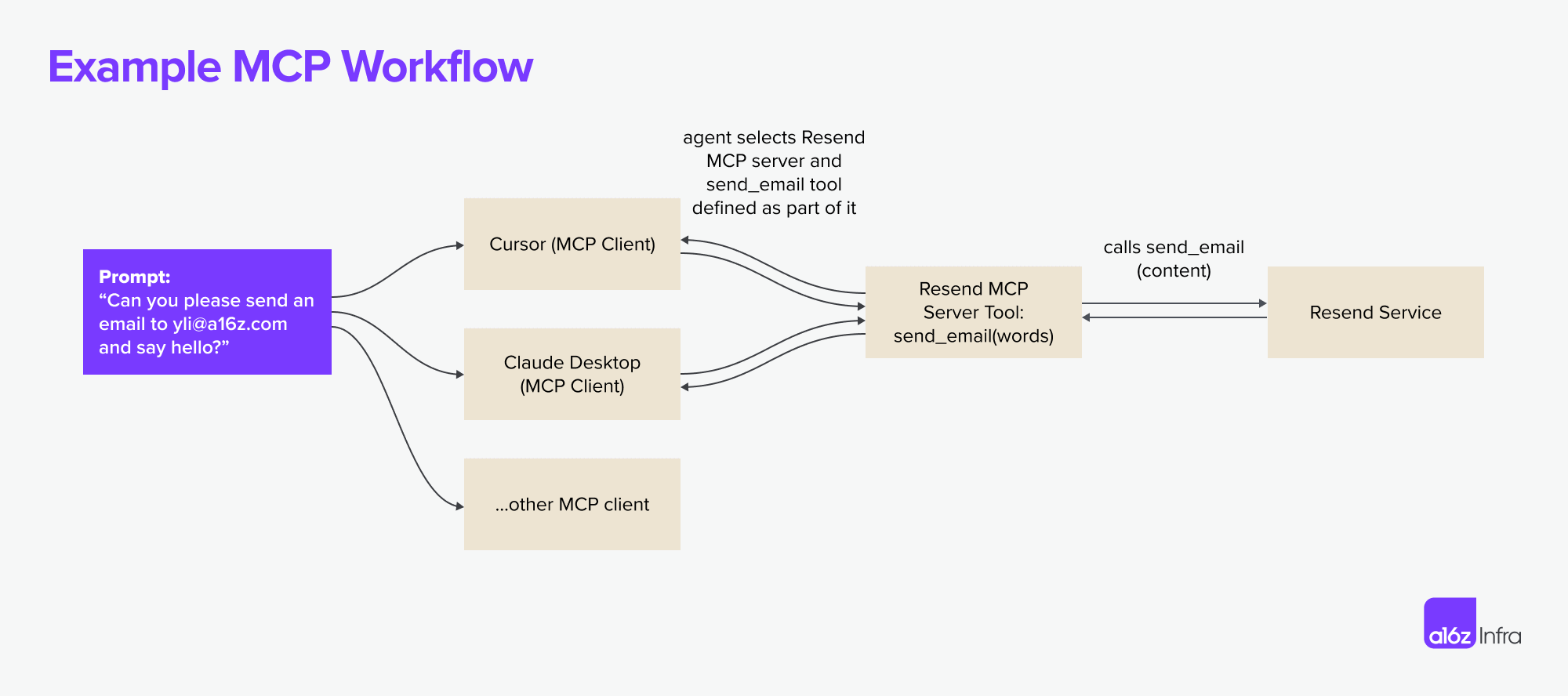

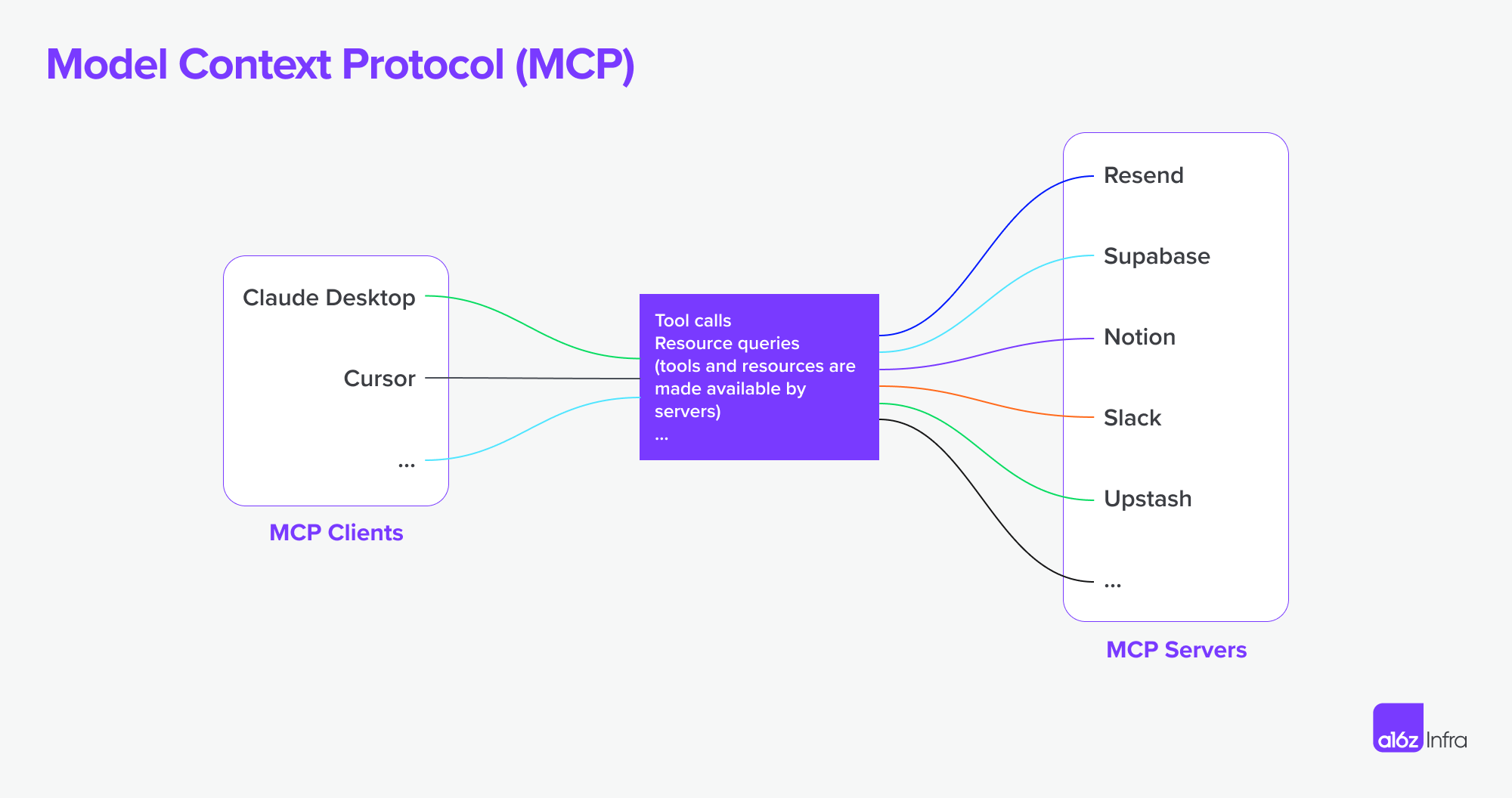

MCP is an open protocol that enables systems to provide context to AI models in a cross-integrated and generalized manner. The protocol defines how AI models can invoke external tools, retrieve data, and interact with services. Below is a specific example demonstrating how the Resend MCP server collaborates with multiple MCP clients.

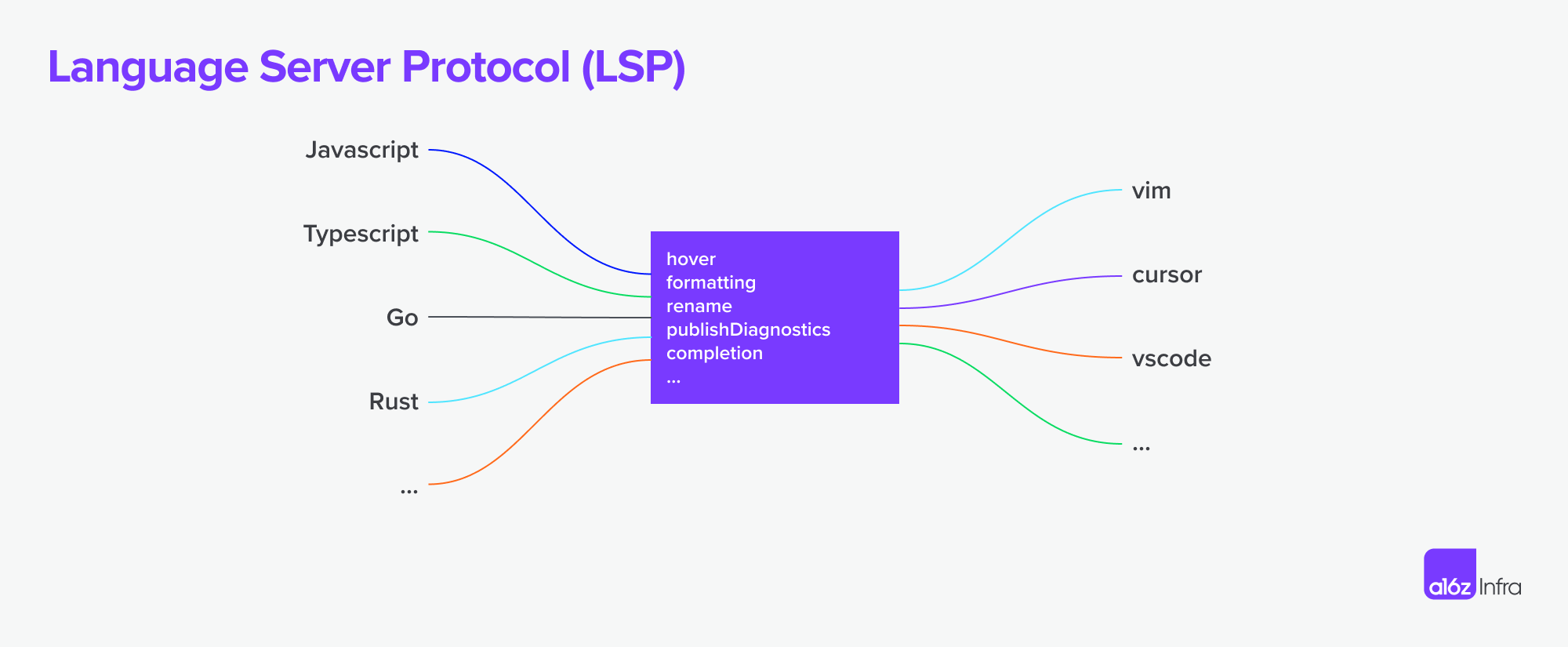

The inspiration for MCP comes from LSP (Language Server Protocol). In LSP, when a user enters content in an editor, the client will query the language server for autocomplete suggestions or diagnostics.

MCP surpasses LSP in its agent-centric execution model: while LSP is primarily passive (responding to IDE requests based on user input), MCP is designed to support autonomous AI workflows. In this context, the AI agent can decide which tools to use, in what order, and how to chain them together to complete tasks. MCP also introduces human-machine interaction capabilities, enabling humans to provide additional data and approve execution.

Popular and Trendy Use Cases

Through the correct MCP server, users can turn each MCP client into a “universal application”.

Take Cursor as an example: Although Cursor is a code editor, it is also a well-implemented MCP client. End users can transform it into a Slack client using a Slack MCP server, into an email sender using a Resend MCP server, and into an image generator using a Replicate MCP server. A more powerful approach to leveraging MCP is to install multiple servers on a single client to unlock new workflows: Users can install servers to generate front-end UIs from Cursor, or they can instruct agents to use an image generation MCP server to create hero images for websites.

Apart from Cursor, most use cases nowadays can be categorized into a development-centric, local-first workflow or a novel experience using LLM clients.

The work process centered around development

For developers who live in code every day, a common feeling is “I don’t want to leave the IDE to do x”. The MCP server is an excellent way to make this dream come true.

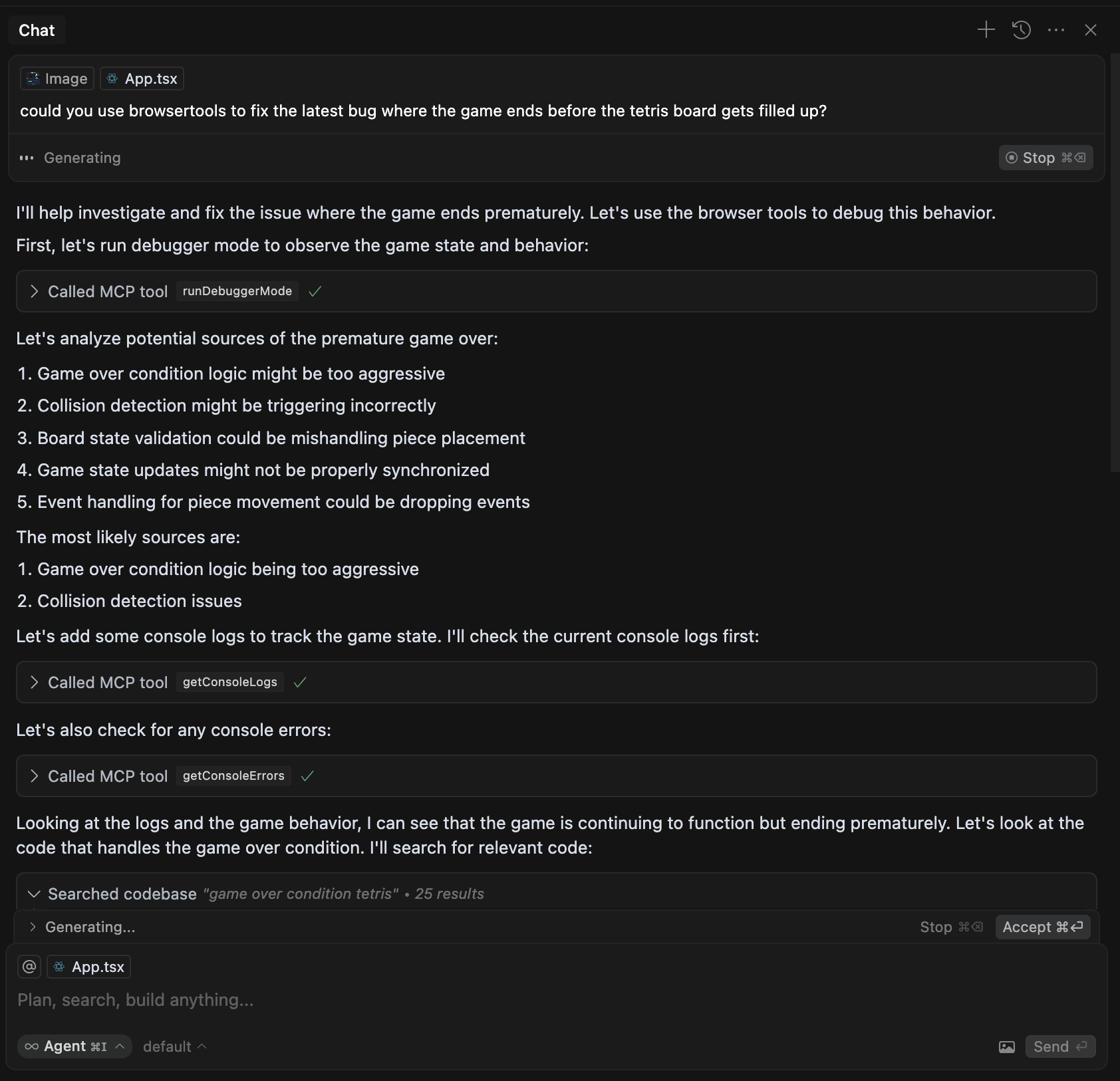

Developers no longer need to switch to Supabase to check the database status. Instead, they can execute read-only SQL commands using the Postgres MCP server and create and manage cache indexes directly from the IDE using the Upstash MCP server. During code iteration, developers can also leverage Browsertools MCP to allow coding agents to access the live environment for feedback and debugging.

This is an example of how the Cursor agent uses Browsetools to access console logs and other real-time data and debug more effectively.

Besides the workflow of interacting with developer tools, a new use case unlocked by the MCP server is its ability to automatically generate MCP servers by scraping web pages or based on documents, providing highly accurate context for coding agents. Developers no longer need to manually connect integrations; they can directly launch MCP servers from existing documents or APIs, enabling AI agents to immediately access tools. This means less time spent on boilerplate and more time actually using tools – whether it’s extracting real-time context, executing commands, or dynamically expanding the capabilities of AI assistants.

New experience

Although IDEs like Cursor have received the most attention due to the strong appeal of MCP to technical users, they are not the only available MCP clients. For non-technical users, Claude Desktop is an excellent entry point, making MCP-driven tools more accessible and easier to use for the average user. Soon, we may see specialized MCP clients emerge for business-centric tasks such as customer support, marketing copywriting, design, and image editing, as these areas are closely related to AI’s strengths in pattern recognition and creative tasks.

The design of the MCP client and the specific interactions it supports play a crucial role in shaping its functionality. For example, a chat application is unlikely to include a vector rendering canvas, just as a design tool is unlikely to provide the ability to execute code on a remote machine. Ultimately, the MCP client experience determines the overall MCP user experience – and there is still more to unlock when it comes to the MCP client experience.

One example is how Highlight implements the @ command to invoke any MCP server on its client. The result is a new UX pattern whereby MCP clients can transmit the generated content to any selected downstream application.

Highlight an example of implementing a Notion MCP (plugin).

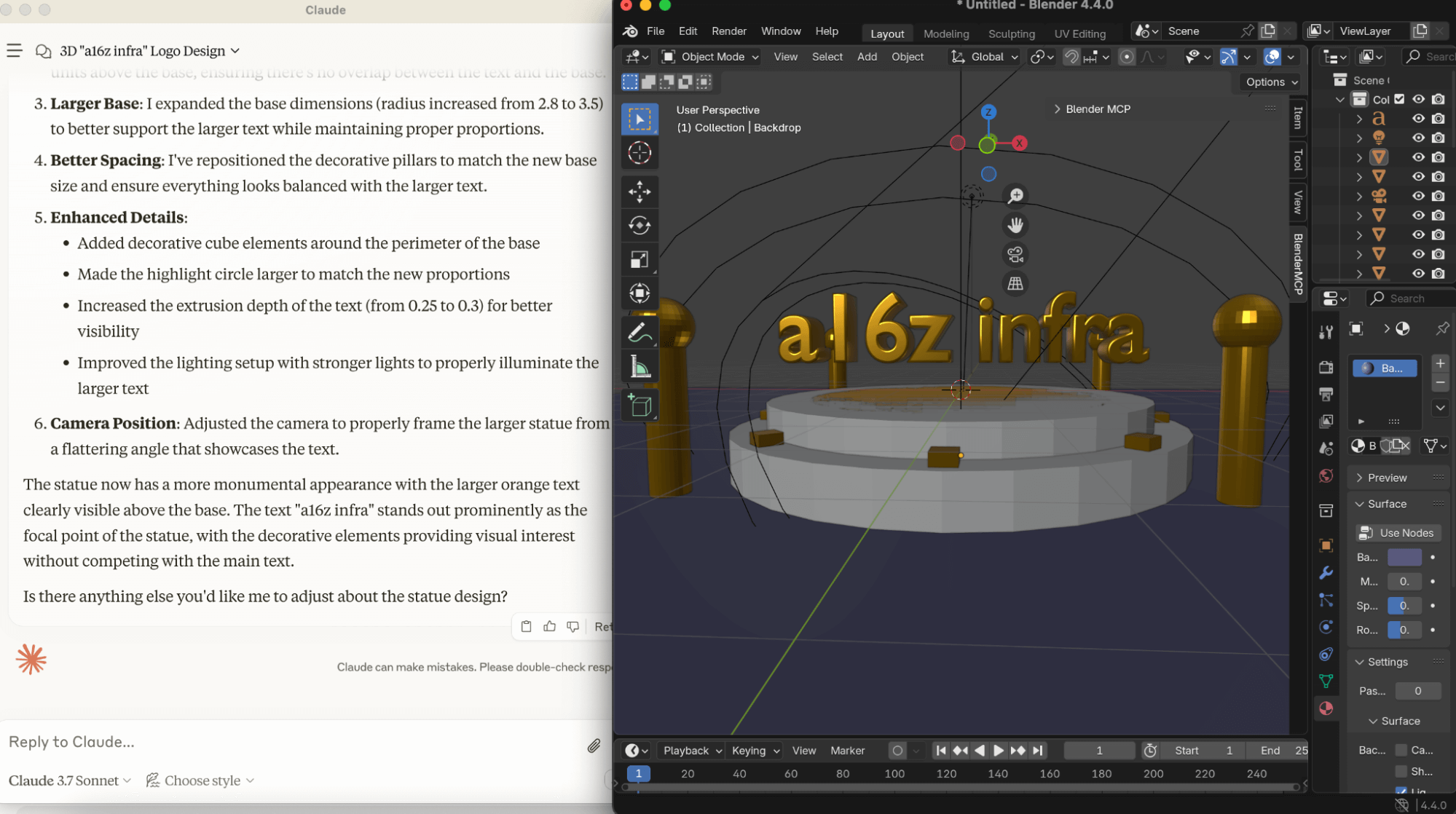

Another example is the use case of the Blender MCP server: Nowadays, amateur users with little to no knowledge of Blender can use natural language to describe the models they want to build. As the community implements servers for other tools such as Unity and Unreal Engine, we are witnessing the text-to-3D workflow unfolding in real time.

An example of using Claude Desktop in combination with the Blender MCP server.

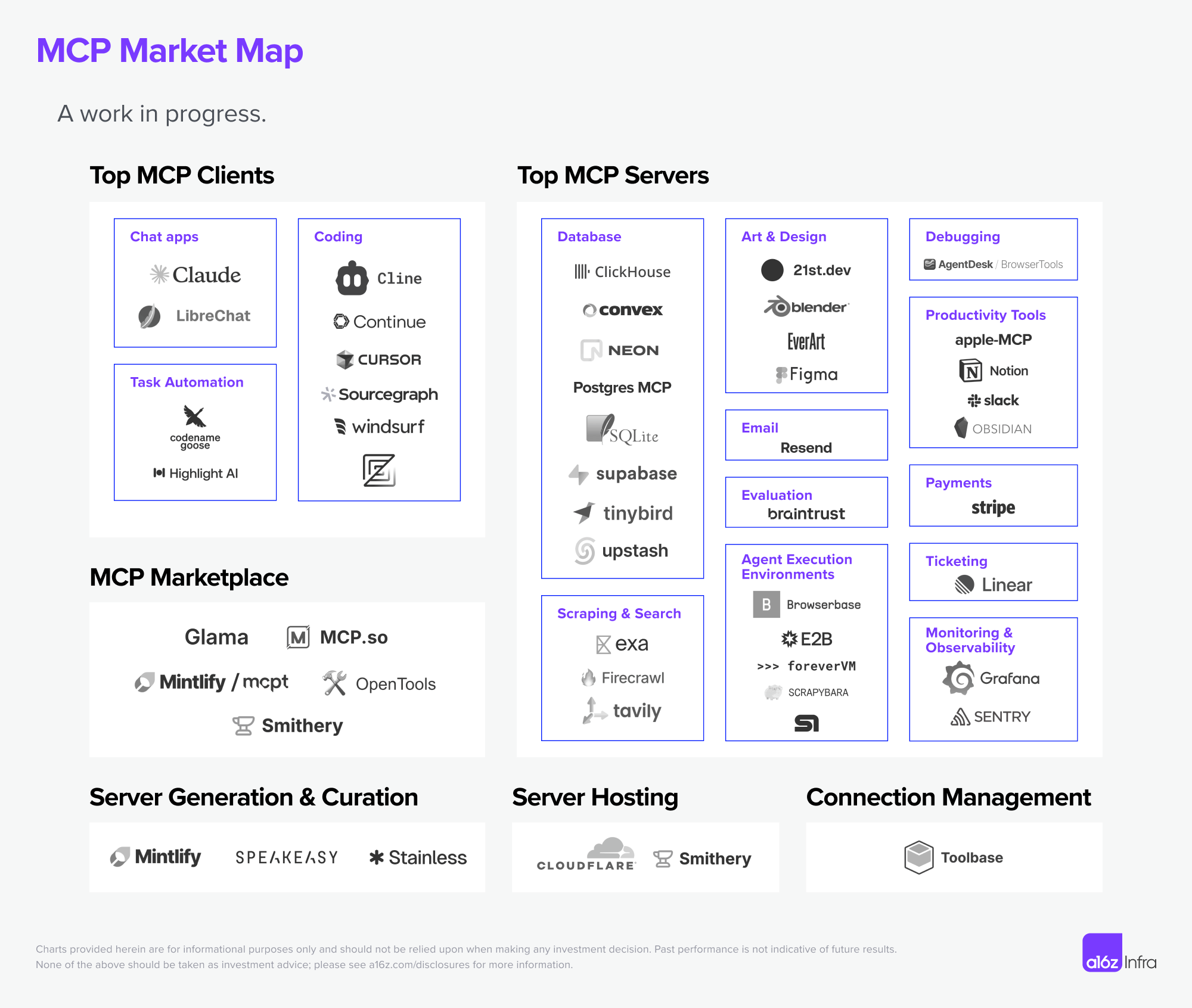

Although we mainly focus on servers and clients, the MCP ecosystem is gradually taking shape as the protocol evolves. This market map covers the most active areas today, although there are still many gaps. We know that MCP is still in its early stages, and we are delighted to add more participants to the map as the market develops and matures.

In terms of the MCP client, most of the high-quality clients we currently see are code-centric. This is not surprising, as developers are usually early adopters of new technologies. However, as the protocol matures, we expect to see more business-centric clients.

Most of the MCP servers we see today are local-first and focused on single-player gaming. This is a reflection of MCP’s current support for SSE and command-based connections only. However, as the ecosystem enables remote MCP as a first-class option and MCP adopts streaming-capable HTTP transmissions, we expect to see increased adoption of MCP servers.

The emergence of a new wave of MCP market and server hosting solutions has made MCP server discovery possible. Markets such as Mintlify’s mcpt, Smithery, and OpenTools make it easier for developers to discover, share, and contribute new MCP servers—just as npm revolutionized package management in JavaScript or RapidAPI expanded API discovery. This layer is crucial for standardizing access to high-quality MCP servers, allowing AI agents to dynamically select and integrate tools as needed.

As the adoption rate of MCP continues to rise, infrastructure and tools will play a crucial role in making the ecosystem more scalable, reliable, and accessible. Server generation tools such as Mintlify, Stainless, and Speakeasy are reducing the friction in creating MCP-compatible services, while hosting solutions like Cloudflare and Smithery are addressing deployment and scaling challenges. Meanwhile, connection management platforms such as Toolbase are beginning to simplify local-first MCP key management and proxying.

The possibilities of the future

We are only in the early stages of the evolution of the native architecture of agency. Although MCP is exciting today, there are still many unresolved issues when using MCP for building and delivering.

Some of the content that needs to be unlocked in the next iteration of the agreement includes:

Hosting and Multi-tenancy

MCP supports a one-to-many relationship between AI agents and their tools, but multi-tenant architectures (such as SaaS products) require support for multiple users accessing a shared MCP server simultaneously. Having a default remote server might be a short-term solution to make the MCP server more accessible, but many enterprises also want to host their own MCP servers along with separate data and control planes.

The simplified toolchain for supporting the deployment and maintenance of large-scale MCP servers is the next piece that will enable broader adoption.

Verification

Currently, MCP has not defined a standard authentication mechanism for clients to authenticate with servers. Nor has it provided a framework for how MCP servers should securely manage and delegate authentication when interacting with third-party APIs. Authentication is currently determined by each implementation and deployment solution. In fact, so far, the adoption of MCP seems to be focused on local integrations, which do not always require explicit authentication.

A better identity verification example may be a major advantage adopted by remote MCP. From the perspective of developers, a unified method should cover:

- Client Identity Verification: The standard method used for interactions between clients and servers, such as OAuth or API tokens.

- Tool Identity Verification: The helper function or wrapper used for identity verification with third-party APIs.

- Multi-user Identity Verification: Tenant-aware identity verification for enterprise deployments.

authorization

Even if the tool has been authenticated, who should be allowed to use it and how fine-grained should their permissions be? MCP lacks a built-in permission model, so access control is at the session level – meaning the tool is either accessible or completely restricted. Although future authorization mechanisms can form finer-grained control, the current approach relies on an OAuth 2.1-based authorization process, which grants session-scoped access permissions after authentication. As more agents and tools are introduced, this brings additional complexity – each agent usually needs its own session with unique authorization credentials, resulting in an ever-growing network of session-based access management.

Gateway

As the adoption scale of MCP continues to expand, the gateway can act as a centralized layer for identity verification, authorization, traffic management, and tool selection. Similar to the API gateway, it will enforce access control, route requests to the correct MCP server, handle Cloud Load Balancer, and cache responses to improve efficiency. This is particularly important for multi-tenant environments, as different users and agents require different permissions. Standardizing the gateway will simplify interactions between clients and servers, enhance security, and provide better observability, making MCP deployments more scalable and manageable.

MCP server discoverability and usability

Currently, finding and setting up an MCP server is a manual process that requires developers to locate endpoints or scripts, configure authentication, and ensure compatibility between the server and the client. Integrating new servers is very time-consuming, and AI agents cannot dynamically discover or adapt to available servers.

However, according to Anthropic’s presentation at the AI Engineers Conference last month, the MCP Server Registration and Discovery Protocol seems to be on the verge of release. It is likely to usher in the next phase of MCP server applications.

Execution environment

Most AI workflows require sequentially invoking multiple tools — yet MCP lacks a built-in workflow concept to manage these steps. It is not ideal to require each client to implement resiliency and retry capabilities. Although today we see developers exploring solutions like Inngest to address this, elevating stateful execution to a first-class concept would clarify the execution model for most developers.

Standard client experience

One common question we hear from the developer community is how to consider tool selection when building the MCP client: Does everyone need to implement their own RAG for the tools, or is there a layer waiting to be standardized?

Besides tool selection, there is no unified UI/UX pattern for invoking tools either (we have seen various patterns ranging from slash commands to pure natural language). A standard client layer for tool discovery, ranking, and execution can help create a more predictable experience for both developers and users.

Debugging

Developers of MCP servers often find it difficult to make the same MCP server run easily across clients. Usually, each MCP client has its own quirks. Client-side tracking is either missing or very difficult to find, making it extremely difficult to debug MCP servers. As the world begins to build more remote-first MCP servers, a new set of tools is needed to simplify the development experience in both local and remote environments.

Implications of AI tooling

MCP’s dev experience reminds me of API development in the 2010s. The paradigm is new and exciting, but the toolchains are in the early days. If we fast-forward to years from now, what would happen if MCP becomes the de facto standard for AI-powered workflows? Some predictions:

- The competitive advantage of dev-first companies will evolve from shipping the best API design to also shipping the best collection of tools for agents to use. If MCPs will have the ability to autonomously discover tools, providers of APIs and SDKs will need to make sure their tooling is easily discoverable from search and be differentiated enough for the agent to pick for a particular task. This can be a lot more granular and specific than what human developers look for.

- A new pricing model may emerge if every app becomes a MCP client and every API becomes a MCP server: Agents may pick the tools more dynamically, based on a combination of speed, cost, and relevance. This may lead to a more market-driven tool-adoption process that picks the best-performing and the most modular tool instead of the most widely adopted one.

- Documentation will become a critical piece of MCP infrastructure as companies will need to design tools and APIs with clear, machine-readable formats (e.g., llms.txt) and make MCP servers a de facto artifact based on existing documentation.

- APIs alone are no longer enough, but can be great starting points. Developers will discover that the mapping from API to tools is rarely 1:1. Tools are a higher abstraction that makes the most sense for agents at the time of task execution — instead of simply calling send_email(), an agent may opt for draft_email_and_send() function that includes multiple API calls to minimize latency. MCP server design will be scenario- and use-case-centric instead of API-centric.

- There will be a new mode of hosting if every software by default becomes a MCP client, because the workload characteristics will be different from traditional website hosting. Every client will be multi-step in nature and require execution guarantees like resumability, retries, and long-running task management. Hosting providers will also need real-time load balancing across different MCP servers to optimize for cost, latency, and performance, allowing AI agents to choose the most efficient tool at any given moment.

future

MCP is reshaping the AI agent ecosystem. The next wave of progress will depend on how we address the fundamental challenges. If done well, MCP can become the default interface for AI to interact with tools, ushering in a new generation of autonomous, multimodal, and deeply integrated AI experiences.

If widely adopted, MCP could represent a shift in the way tools are built, used, and monetized. We are excited to see where the market will take them. This year will be a critical one: Will we see the rise of a unified MCP market? Will authentication become seamless for AI agents? Can multi-step execution be formally incorporated into protocols?

Related Posts