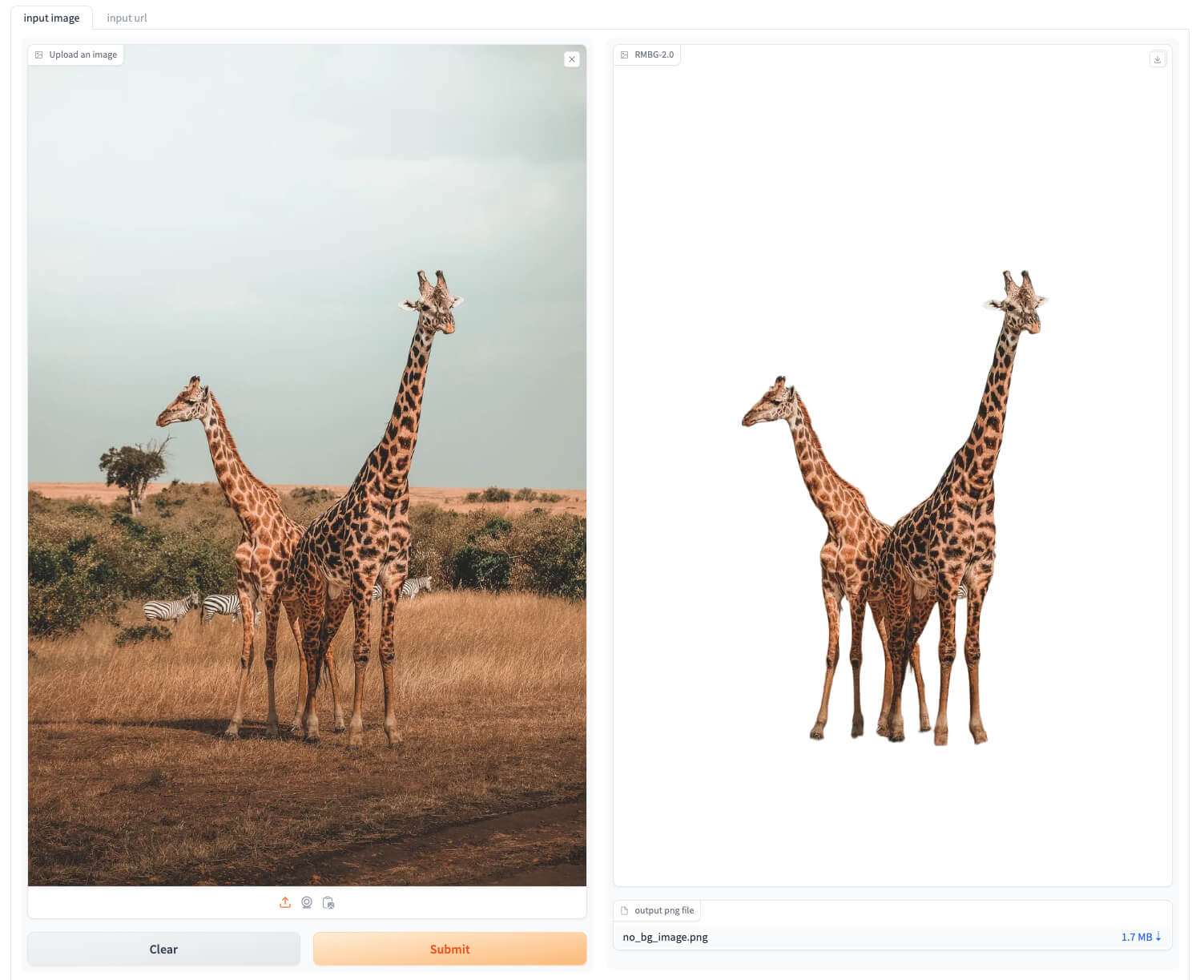

DeepSeek-GRM – A General Reward Model Jointly Released by DeepSeek and Tsinghua University

What is DeepSeek-GRM?

DeepSeek-GRM is a Generalist Reward Modeling (GRM) framework jointly proposed by DeepSeek and researchers from Tsinghua University. Leveraging advanced techniques such as Pointwise Generative Reward Modeling (GRM) and Self-Principled Critique Tuning (SPCT), it significantly enhances the quality of reward models and their scalability during inference. Instead of directly outputting a single scalar value, GRM generates structured evaluative text, including evaluation principles and detailed analyses of responses, to produce reward scores. DeepSeek-GRM demonstrates outstanding performance across multiple comprehensive reward model benchmark tests, significantly outperforming existing methods and various public models. Its scalability during inference is particularly remarkable, with performance continuously improving as the number of sampling iterations increases.

The main functions of DeepSeek-GRM

- Intelligent Q&A and Dialogue: Capable of quickly answering various types of questions, covering scientific knowledge, historical culture, common sense in life, technical issues, etc. DeepSeek can engage in intelligent conversations with users, understand their intentions and emotions, and provide appropriate responses.

- Content Generation: Able to generate various types of content, including news reports, academic papers, business copywriting, novels, and stories.

- Data Analysis and Visualization: Capable of processing data from Excel spreadsheets, CSV files, etc., performing data cleaning, statistical analysis, and generating visualization charts.

- Reasoning and Logical Ability: Excels in mathematics, logical reasoning, and other areas, capable of multi-step reasoning and thinking to solve complex reasoning tasks.

- API Integration: Provides API interfaces for developers to integrate it into their own applications, enabling broader application scenarios.

The Technical Principles of DeepSeek-GRM

- Point-based Generative Reward Modeling (GRM): Outputs reward scores by generating structured evaluation text (including evaluation principles and detailed analysis of the responses), rather than directly outputting a single scalar value. This enhances input flexibility and provides potential for expansion during reasoning.

- Self-Principle Comment Tuning (SPCT): Trains the GRM model to adaptively generate high-quality evaluation principles and accurate commentary through two stages: rejection fine-tuning and rule-based online reinforcement learning.

- Meta Reward Model (Meta RM): Evaluates the quality of the evaluation principles and commentary generated by GRM, selects high-quality samples for voting, and further improves the expansion performance during reasoning.

- Multi-Token Prediction (MTP): Enables the model to predict multiple tokens in a single forward pass, improving training efficiency and inference speed.

- Group Relative Policy Optimization: Optimizes the model’s strategy by comparing the relative advantages and disadvantages of different reasoning paths on the same task.

- Mixture of Experts (MoE): Dynamically selects expert networks to reduce unnecessary computational overhead, enhancing the speed and flexibility of handling complex tasks.

- FP8 Mixed Precision Training: Utilizes more appropriate data precision during training to reduce computational load, saving time and cost.

The project address of DeepSeek-GRM

- arXiv Technical Paper: https://arxiv.org/pdf/2504.02495

Application Scenarios of DeepSeek-GRM

- Precision Agriculture Management: Real-time monitoring of parameters such as soil moisture and light intensity through sensors, automatically adjusting irrigation and fertilization schemes to improve resource utilization efficiency.

- Intelligent Driving: Achieving precise environmental perception and decision-making by processing multi-source sensor data through deep learning models.

- Natural Language Processing (NLP): Includes text generation, dialogue systems, machine translation, sentiment analysis, text classification, information extraction, etc.

- Code Generation and Understanding: Supports automatic code completion, code generation, code optimization, error detection and repair, and supports multiple programming languages.

- Knowledge Question Answering and Search Enhancement: Combines with search engines to provide real-time and accurate knowledge question answering.

Related Posts