O3 and O4-Mini Arrive! OpenAI Breaks Through with Strongest ‘Visual Reasoning’, Open-Sources AI Programming Marvel, and Exposes Largest-Ever Acquisition

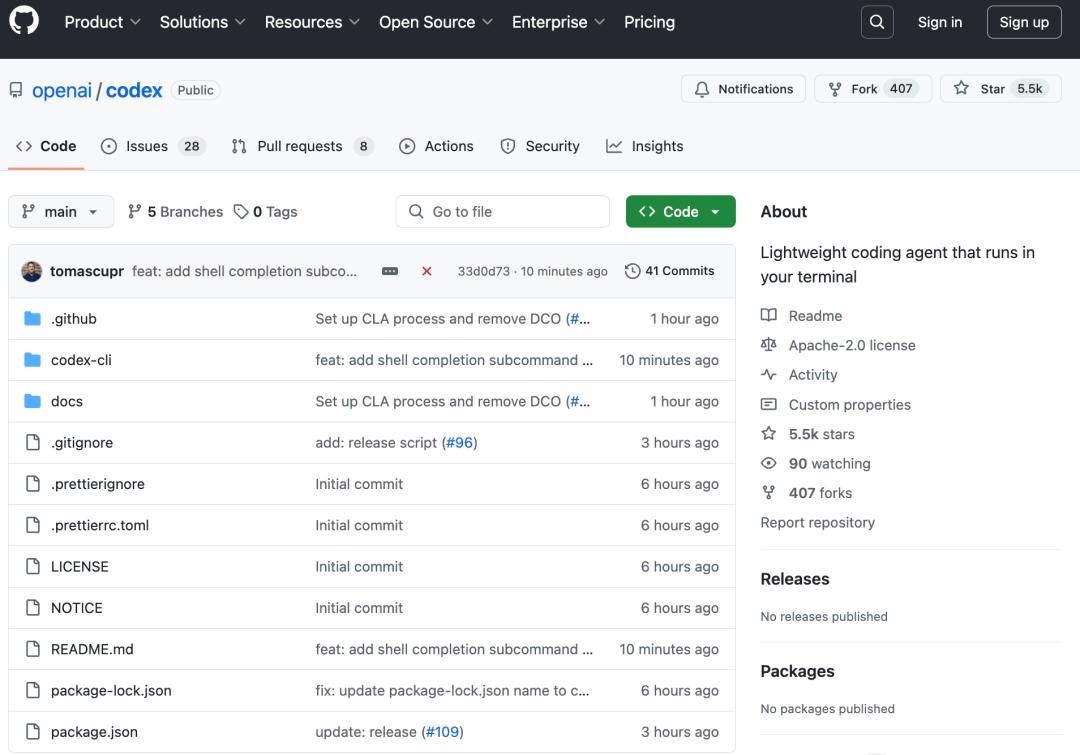

OpenAI has made a significant release of two visual reasoning models, OpenAI o3 and o4-mini. These are the first models in the OpenAI o-series that can perform chain-of-thought reasoning using images. OpenAI has also open-sourced the lightweight programming Agent, Codex CLI. Less than 7 hours after its release, the number of Stars it has received has already exceeded 5,500.

The difference between these two models lies in the fact that OpenAI o3 is the most powerful reasoning model, while OpenAI o4-mini is a smaller model optimized for fast and cost-effective reasoning. The new model incorporates images into the chain-of-thought process for the first time and can autonomously invoke tools to generate answers within one minute.

The programming Agent Codex CLI open-sourced by OpenAI can maximize the model’s reasoning capabilities and can be deployed on the edge side. Today, OpenAI also announced a major acquisition deal in the AI programming field. According to foreign media reports, OpenAI is in talks to acquire the AI-assisted programming tool Windsurf (formerly known as Codeium) for $3 billion (approximately RMB 21.9 billion), which would be the largest acquisition OpenAI has made to date.

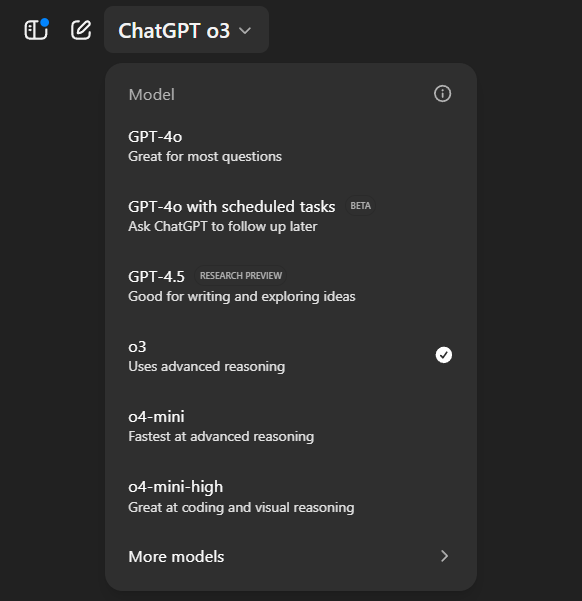

Starting today, ChatGPT Plus, Pro, and Team users can use o3, o4-mini, and o4-mini-high, which will replace o1, o3-mini, and o3-mini-high. ChatGPT Enterprise and Education users will gain access in one week. Free users can try o4-mini by selecting “Think” before submitting their queries. OpenAI expects to release OpenAI o3-pro with full tooling support in a few weeks. Currently, Pro users can still access o1-pro. o3 and o4-mini are made available to developers through the Chat Completions API and Responses API.

OpenAI co-founder and CEO Sam Altman praised o3 and o4-mini as being of “genius level” in a post on the social media platform X.

Windsurf, officially known as Exafunction Inc., is reportedly in acquisition talks with OpenAI. Founded in 2021, the company has raised over $200 million in venture capital funding, with a valuation of $3 billion. Investors, including Kleiner Perkins and General Catalyst, have also recently engaged in financing discussions with the company. In November last year, Windsurf launched the world’s first intelligent agent IDE.

AI programming startups have seen a surge in funding recently. Earlier this year, Anysphere, the startup behind Cursor, was in talks with investors to raise new funding at a valuation close to $10 billion.

Previously, OpenAI had acquired vector database company Rockset and remote collaboration platform Multi. If the new deal to acquire Windsurf is completed, OpenAI will further strengthen its AI programming assistant capabilities and engage in more direct competition with well-known AI programming companies such as Anthropic, Microsoft’s Github, and Anysphere.

The trading terms have not yet been finalized, and the negotiation is still subject to change.

Think in images, and handle hand-drawn sketches and inverted text.

The o3 and o4-mini models can directly integrate images into the chain of thought, use images for reasoning, and will also be trained to infer at which time points to use which tools.

Specifically, the model can interpret whiteboard photos, textbook charts, or hand-drawn sketches uploaded by users. If the images are blurry, inverted, or otherwise unclear, the model can utilize tools to manipulate the images in real time, such as rotating, scaling, or transforming them. Additionally, it can collaborate with other tools for tasks like Python data analysis, web searching, and image generation. All of these processes are part of the model’s reasoning capabilities.

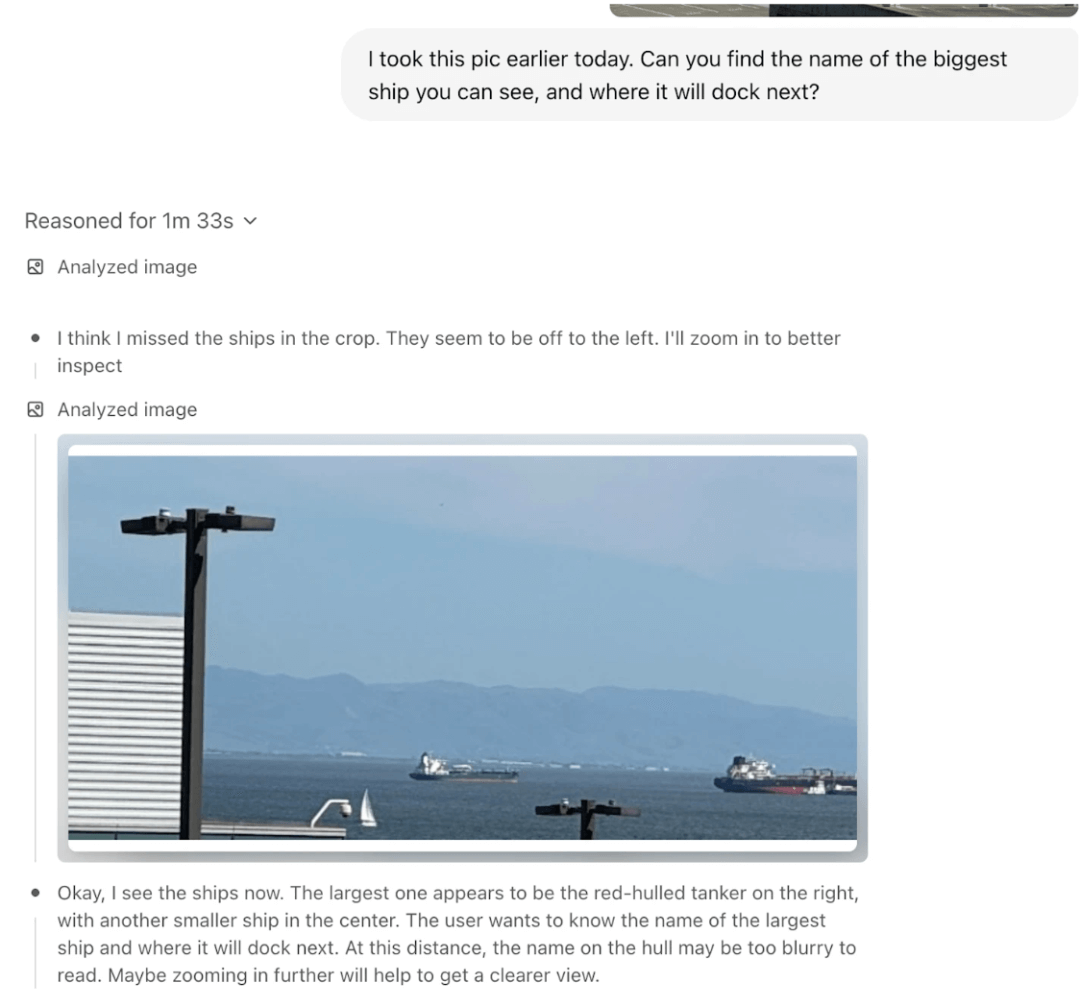

If a user uploads a casually taken photo, they can ask the model questions about the picture, such as “the name of the largest ship and where it is berthed”.

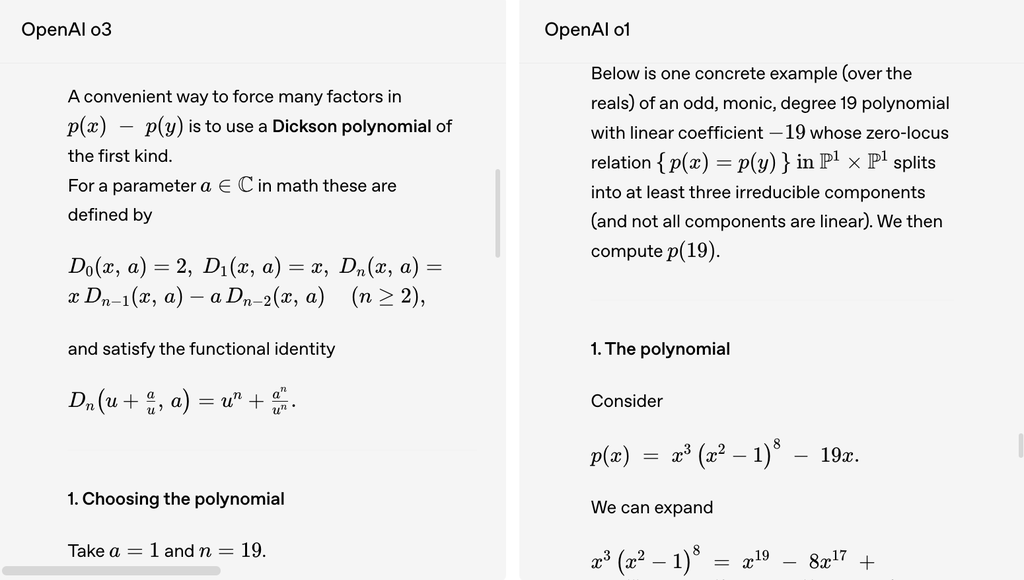

For example, when solving a math problem using built-in literature, OpenAI o3 can provide the correct answer without using search, while o1 cannot provide a correct response.

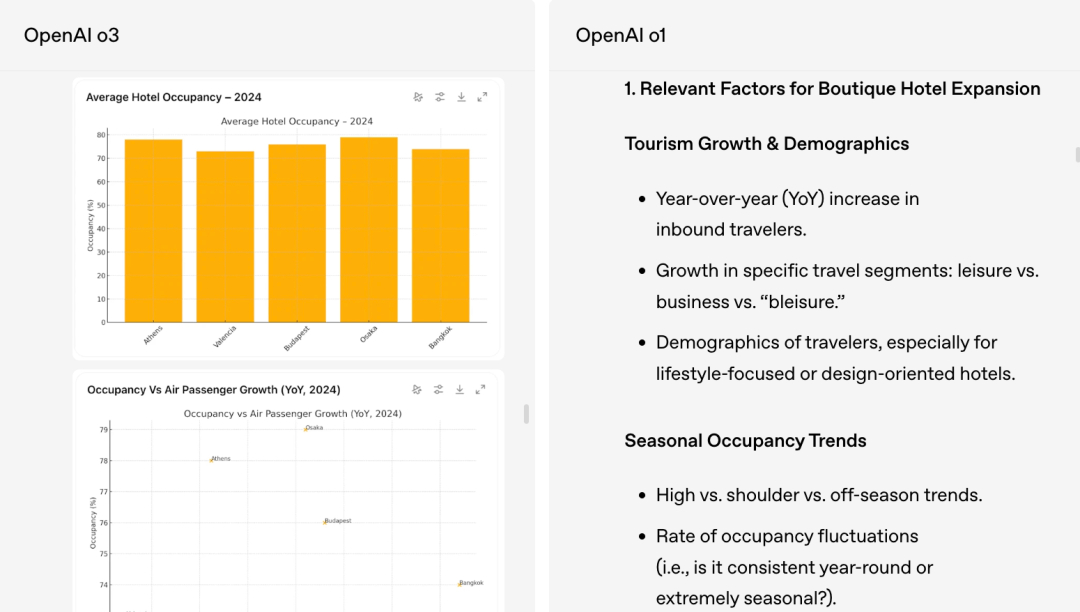

When dealing with the complex issue of “collecting regional travel data, economic statistics, and hotel occupancy rates, visually analyzing trends, and recommending ideal expansion locations” for users, the results from OpenAI o3 cited more industry-related sources and proposed detailed plans. It also predicted real-world challenges and provided proactive mitigation measures. In contrast, the results from o1 were more general.

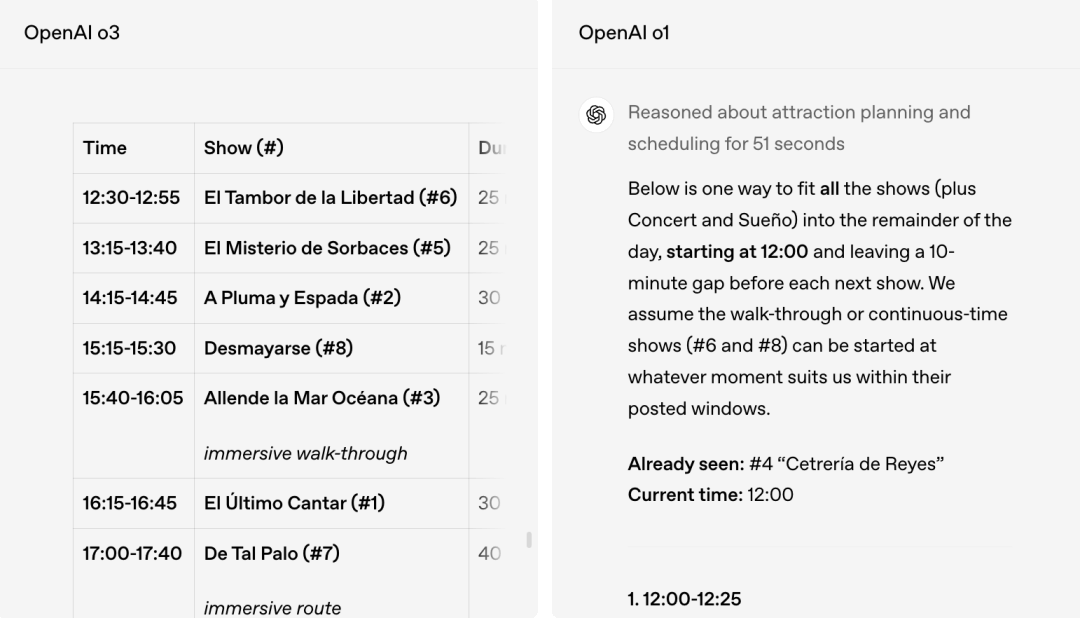

After analyzing a photo of a “handheld program schedule” uploaded by the user, OpenAI o3 can accurately take the schedule into account and output an available plan, while o1 has inaccuracies, with some program times being incorrect.

The model can react and adjust based on the information it encounters. For example, it can perform multiple web searches with the help of search providers, review the results, and attempt new searches when more information is needed. This enables the model to handle tasks that require accessing information beyond its built-in knowledge, expanding reasoning, synthesizing, and providing the latest information across multiple modalities.

Multimodal tasks significantly outperform previous models, with a visual reasoning accuracy of up to 97.5%

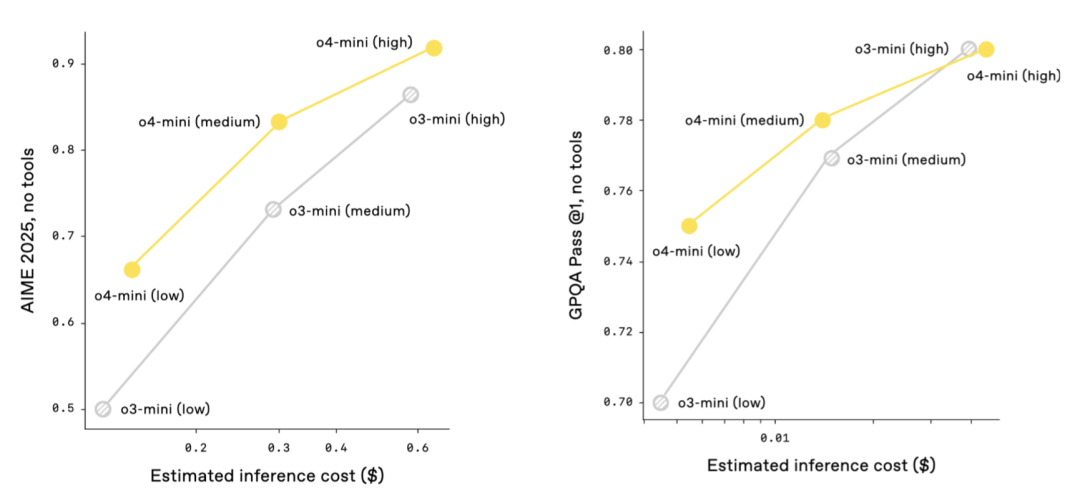

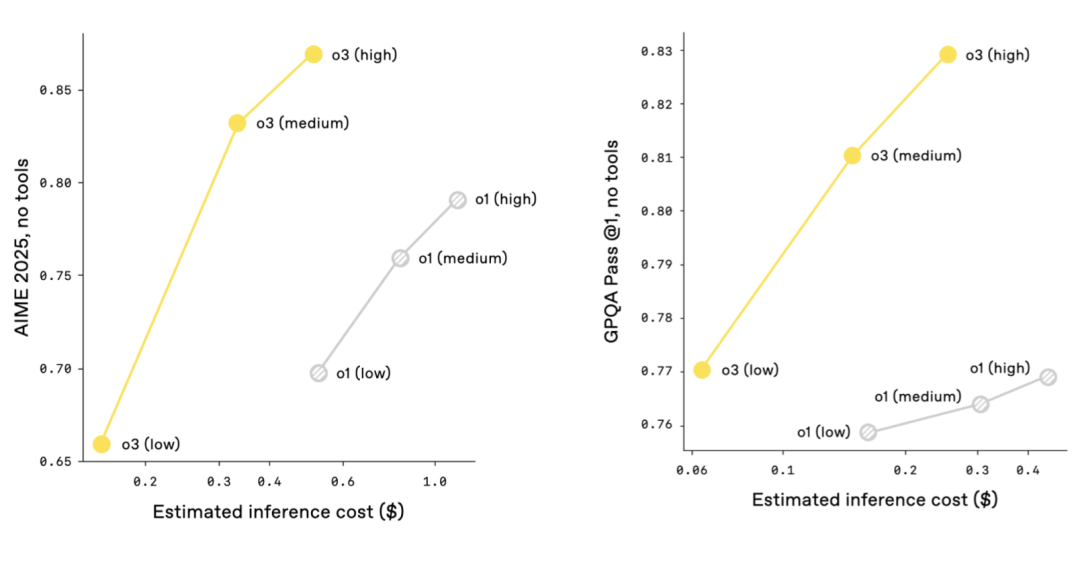

In terms of cost and performance, OpenAI expects that for most practical applications, o3 and o4-mini will be smarter and cheaper than o1 and o3-mini, respectively.

Comparison of cost and performance between o4-mini and o3-mini:

The comparison of cost and performance between O3 and O1:

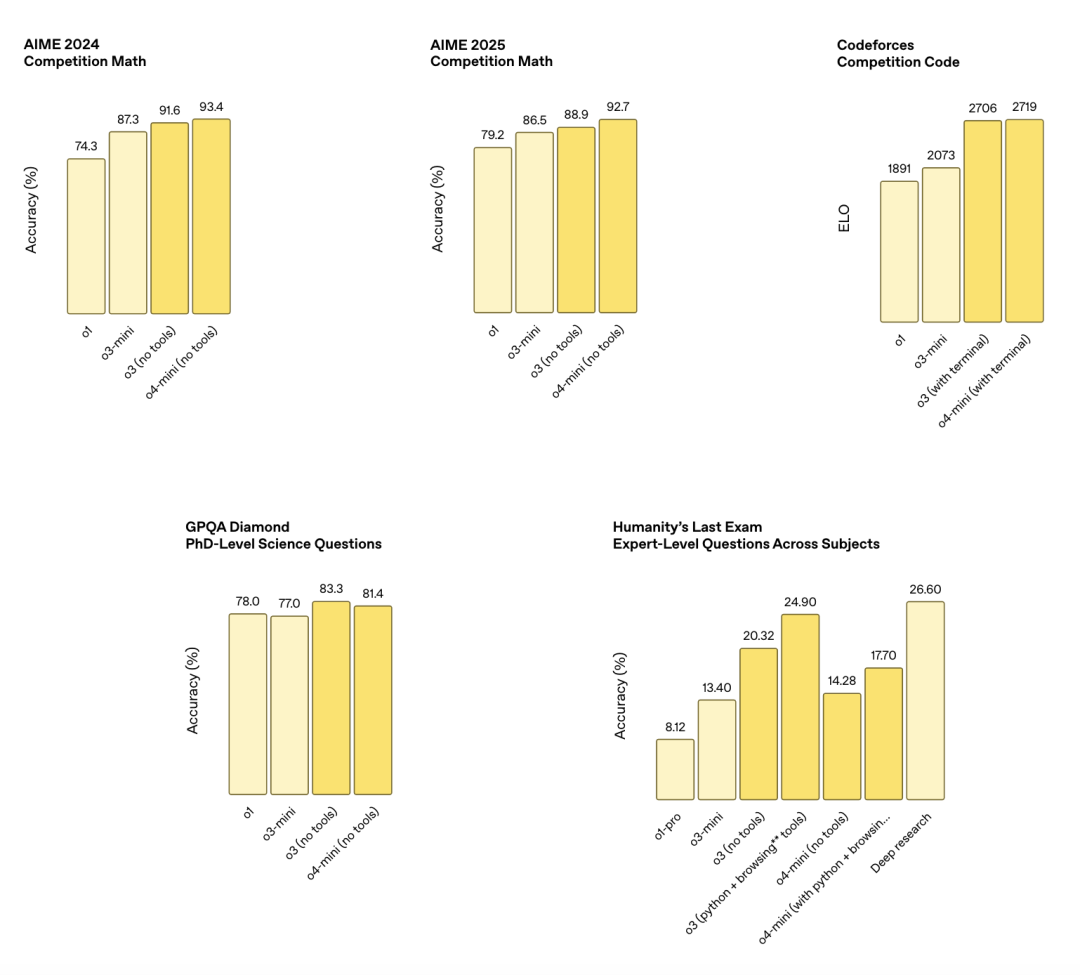

OpenAI tested OpenAI o3 and o4-mini on a series of human exams and machine learning benchmark tests. The results show that these new visual reasoning models significantly outperform their predecessors in all multimodal tasks across the tests.

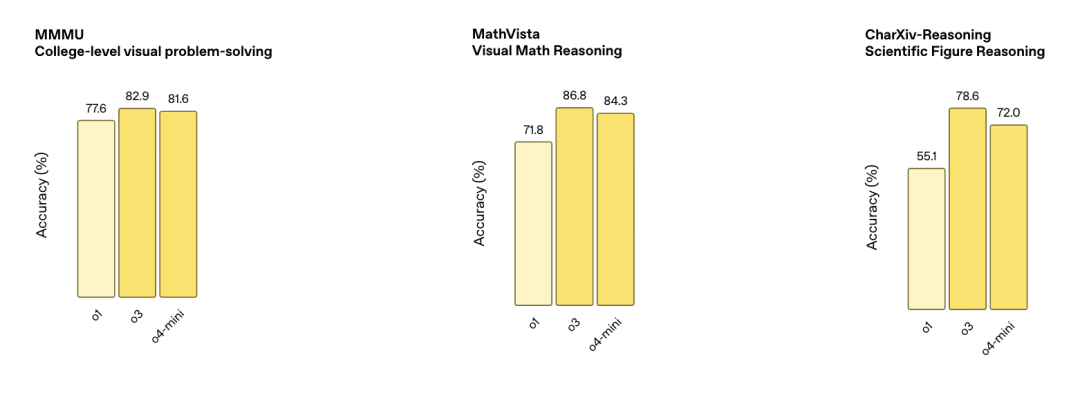

Among them, the image thinking without the need for browsing has achieved significant improvements in almost all of its evaluated perception benchmark tests. OpenAI o3 and o4-mini have reached new state-of-the-art performance in areas such as STEM question answering (MMMU, MathVista), chart reading and reasoning (CharXiv), perception primitives (VLMs are Blind), and visual search (V*). On V*, the new model’s visual reasoning method achieved an accuracy rate of 95.7%.

O3 performs better in visual tasks such as analyzing images, charts, and graphs. In evaluations by external experts, O3 makes 20% fewer major errors in difficult, real-world tasks compared to OpenAI o1. Early testers have highlighted its ability to analyze rigorously in contexts such as biology, mathematics, and engineering, as well as its capability to generate and critically evaluate novel hypotheses.

In expert evaluations, the o4-mini outperforms the o3-mini in non-STEM tasks and fields such as data science. Moreover, the o4-mini supports significantly higher usage limits than the o3-mini, offering advantages in high capacity and high throughput.

External expert evaluators believe that both of these models demonstrate better instruction-following abilities and more useful, verifiable responses compared to previous models. In addition, the new models can make responses more personalized in natural conversations by referring to memory and past interactions.

Evaluation results of multimodal capabilities:

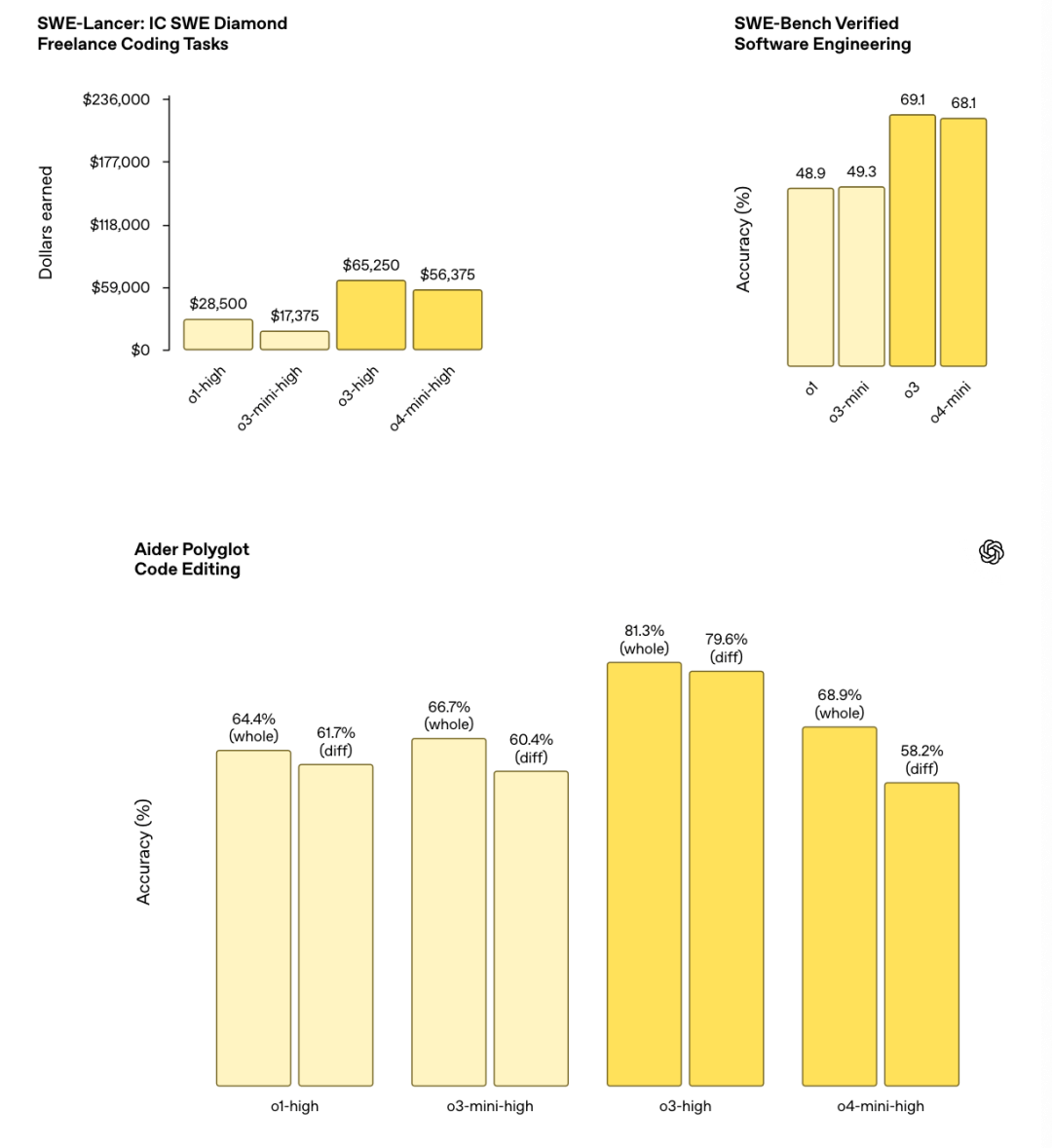

Assessment results of coding ability:

Evaluation results of instruction-following and agent tool usage:

Continuing the approach of “More computation = Better performance”, we have open-sourced a lightweight programming agent.

During the development of OpenAI o3, researchers observed that large-scale reinforcement learning exhibits the same trend as seen in the pre-training of the GPT series, where “more computation = better performance.”

They achieved a significant performance improvement in the model by tracing back the expansion paths in reinforcement learning, which pushed the training computation and inference time up by an order of magnitude. This verifies that the model’s performance continues to improve as it is allowed to think more.

Achieving higher performance than o1 in ChatGPT under the same latency and cost, o3 has demonstrated its capabilities. Additionally, it was revealed in their blog that researchers have confirmed the model’s performance will continue to improve if allowed to think for longer periods.

The researchers also trained the new model to use tools through reinforcement learning, which includes not only how to use the tools but also how to reason about when to use them. The new model can deploy tools according to the desired outcomes, enabling it to perform better in open-ended scenarios involving visual reasoning and multi-step workflows.

OpenAI has also shared a lightweight programming agent, Codex CLI, designed to maximize the inference capabilities of models such as o3 and o4-mini. Users can run it directly in the terminal. OpenAI plans to support more API models, including GPT-4.1.

Users can pass screenshots or low-fidelity sketches to the model and, combined with access to local code, obtain the benefits of multimodal reasoning directly from the command line. OpenAI believes this can connect the model with users and their computers. Starting today, Codex CLI is fully open source.

Meanwhile, OpenAI has launched a $1 million initiative to support engineering projects that utilize the Codex CLI and OpenAI models. It will evaluate and accept grant applications in the form of $25,000 API credit allocations.

There are still three major limitations: the reasoning chain process, perception errors, and insufficient reliability.

However, the researchers also mentioned in the blog that image reasoning currently has the following limitations:

Overly Long Reasoning Chain: The model may perform redundant or unnecessary tool calls and image processing steps, resulting in an excessively long reasoning chain.

Perceptual Errors: The model may still make basic perceptual errors. Even if the tool invocation correctly advances the reasoning process, visual misunderstandings may still lead to incorrect final answers.

Reliability: The model may attempt different visual reasoning processes when trying to solve a problem multiple times, some of which may lead to incorrect results.

In terms of safety, OpenAI has rebuilt the safety training data and added new rejection prompts in areas such as biothreats (biosecurity risks), malicious software generation, and jailbreaking. This enables o3 and o4-mini to perform well in their internal rejection benchmark tests.

OpenAI has also developed system-level mitigation measures to flag dangerous prompts in frontier risk areas. Researchers trained an inference large model monitor based on human-written interpretable safety specifications. When applied to biosecurity risks, the monitor successfully flagged about 99% of the conversations during human red-team exercises.

The researchers have updated the emergency preparedness framework and conducted an assessment of o3 and o4-mini in the three tracking capability areas covered by the framework: biological and chemical, cybersecurity, and AI self-improvement. Based on the results of these assessments, they determined that o3 and o4-mini fall below the “high” threshold of the framework in all three categories.

Conclusion: Striving for Visual Reasoning, Advancing Towards Multimodal Reasoning

OpenAI o3 and o4-mini have significantly enhanced the visual reasoning capabilities of the models. These improvements in visual perception tasks enable them to solve problems that were previously challenging for earlier models, marking an important step forward in the development of multimodal reasoning.

OpenAI mentioned in their blog that they will combine the professional reasoning capabilities of the o-series with the natural conversation and tool-using abilities of the GPT series. In the future, this integration will enable the model to support seamless and natural conversations while proactively using tools to solve more complex problems.

In addition, researchers are also continuously optimizing the ability of models to perform inference using images, making them more concise, less redundant, and more reliable.

Related Posts