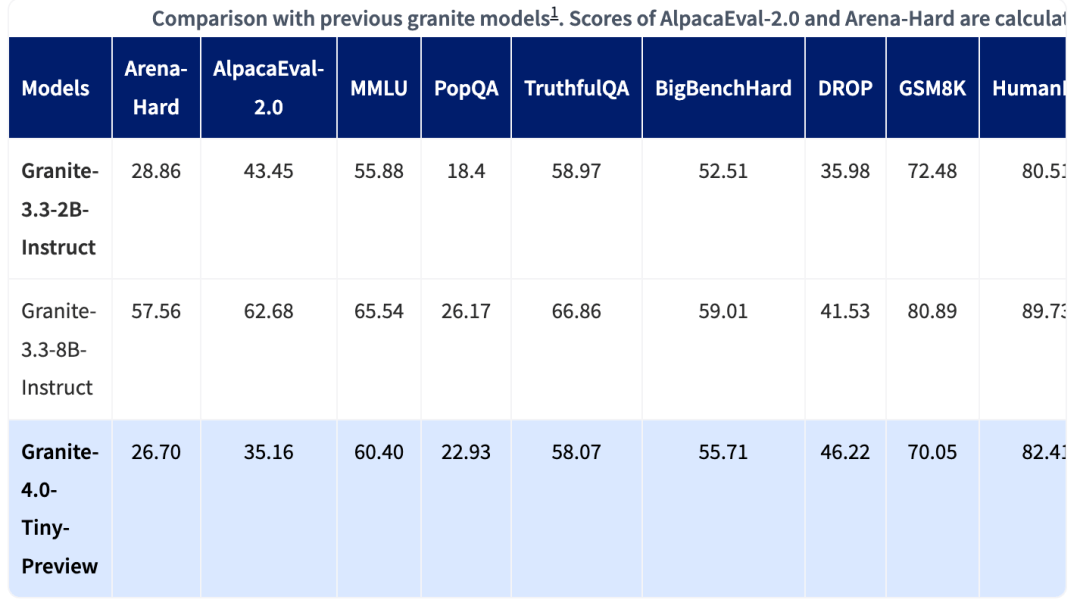

IBM has newly released the granite – 4.0 – tiny – 7B – A1B – preview model

IBM has released the granite – 4.0 – tiny – 7B – A1B – preview model, which adopts a new Mamba – 2/Transformer architecture. The key to this architecture lies in the fact that each Transformer block contains 9 Mamba blocks. The Mamba blocks can effectively capture global context and pass this information to the attention layer for more detailed local context parsing. This technological innovation aims to enhance the model’s context – understanding ability, enabling it to handle complex data more efficiently. In addition, related discussions have also attracted widespread attention on social media, reflecting the industry’s emphasis on this new development.

© Copyright Notice

The copyright of the article belongs to the author. Please do not reprint without permission.

Related Posts

No comments yet...