HunyuanCustom – Tencent’s open-source multimodal customized video generation framework

What is HunyuanCustom?

HunyuanCustom is a multimodal-driven customized video generation framework developed by Tencent’s Hunyuan team. It supports various input modalities including images, audio, video, and text, enabling the generation of high-quality videos with specific subjects and scenes. By integrating a LLaVA-based text-image fusion module and an enhanced image ID module, HunyuanCustom significantly outperforms existing methods in identity consistency, realism, and text-video alignment. The framework supports audio-driven and video-driven video generation, making it highly versatile for applications such as virtual human advertising, virtual try-on, and video editing.

Key Features of HunyuanCustom

-

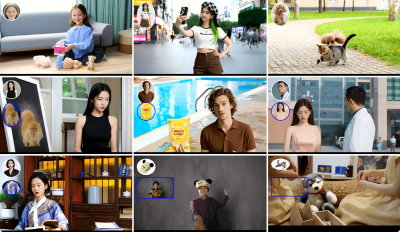

Single-Subject Video Customization: Generates videos based on input images and textual descriptions while maintaining subject identity consistency.

-

Multi-Subject Video Customization: Supports interactions between multiple subjects, enabling complex multi-character scenarios.

-

Audio-Driven Video Customization: Produces videos driven by audio input combined with textual descriptions, allowing dynamic and expressive animations.

-

Video-Driven Video Customization: Enables object replacement or addition in existing videos, ideal for video editing and augmentation.

-

Virtual Human Advertising & Try-On: Creates interactive videos between virtual humans and products or generates virtual try-on videos to enhance e-commerce experiences.

-

Flexible Scene Generation: Generates videos in various scenes based on textual prompts, supporting diverse content creation needs.

Technical Principles Behind HunyuanCustom

-

Multimodal Fusion Modules:

-

Text-Image Fusion Module: Based on LLaVA, this module integrates identity features from images with textual context to improve multimodal comprehension.

-

Image ID Enhancement Module: Utilizes temporal concatenation and the video model’s temporal modeling ability to reinforce subject identity features and ensure consistency in the generated video.

-

-

Audio-Driven Mechanism: The AudioNet module employs spatial cross-attention to inject audio features into video representations, achieving hierarchical alignment between audio and visual content.

-

Video-Driven Mechanism: A video feature alignment module compresses input videos into latent space using VAE, then aligns features via a patchify module to ensure consistency with latent noise variables.

-

Identity Decoupling Module: A video condition module that decouples identity features and efficiently injects them into the latent space, enabling precise video-driven generation.

-

Data Processing & Augmentation: Includes strict preprocessing steps such as video segmentation, text filtering, subject extraction, and data augmentation to ensure high-quality input and enhance model performance.

Project Links for HunyuanCustom

-

Official Website: https://hunyuancustom.github.io/

-

GitHub Repository: https://github.com/Tencent/HunyuanCustom

-

Technical Paper (arXiv): https://arxiv.org/pdf/2505.04512v1

Application Scenarios of HunyuanCustom

-

Virtual Human Advertising: Generate compelling promotional videos where virtual humans interact with products to boost engagement.

-

Virtual Try-On: Allow users to upload a photo and see themselves wearing different outfits in generated videos, enhancing the online shopping experience.

-

Video Editing: Replace or add objects in existing videos, offering greater flexibility and creative control in post-production.

-

Audio-Driven Animation: Generate synchronized video animations based on audio input, suitable for virtual livestreaming or animated content creation.

-

Educational Videos: Combine text and images to automatically generate teaching videos, improving educational delivery and engagement.

Related Posts