DICE-Talk – An Emotional Dynamic Portrait Generation Framework Jointly Launched by Fudan University and Tencent Youtu

What is DICE-Talk?

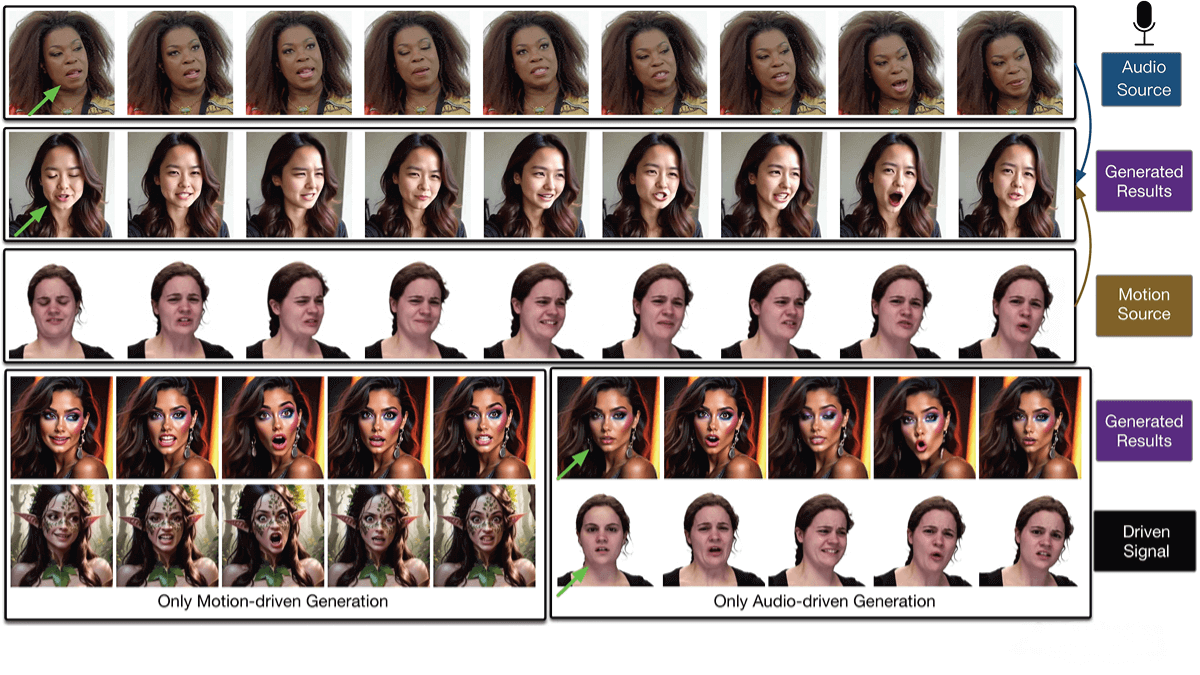

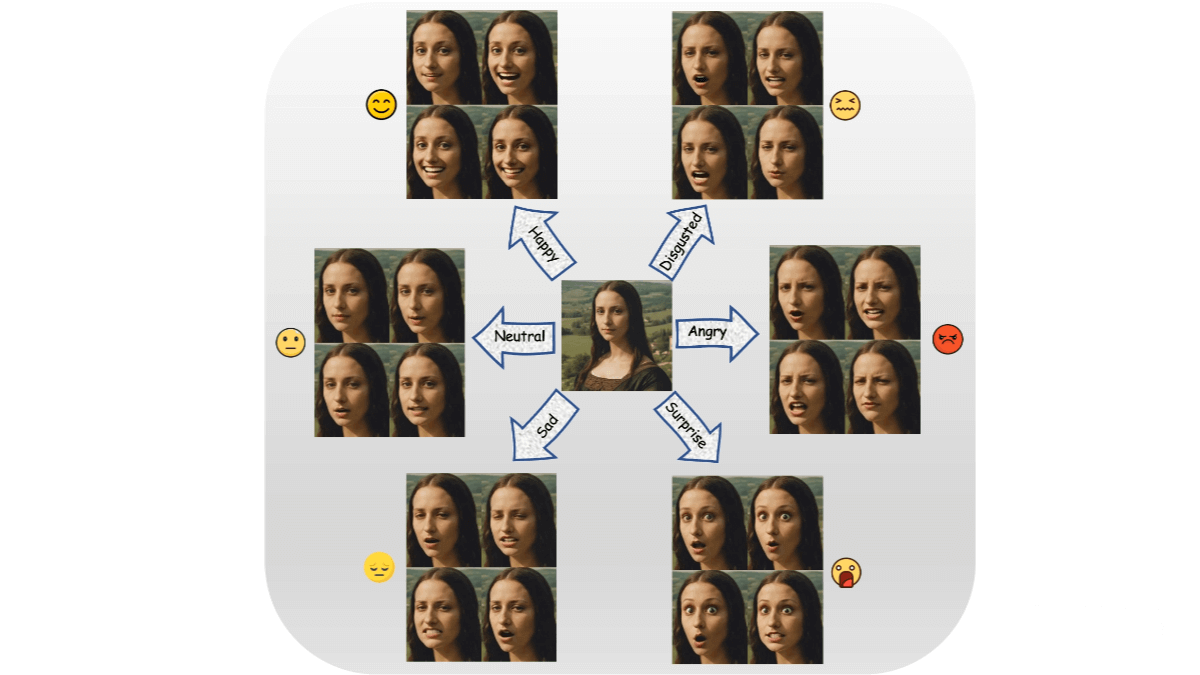

DICE-Talk is an innovative emotional dynamic portrait generation framework jointly developed by Fudan University and Tencent Youtu Lab. It supports the generation of dynamic portrait videos with vivid emotional expressions while preserving identity consistency. DICE-Talk introduces an Emotion Association Enhancement Module that captures relationships between different emotions based on an emotion library, thereby improving the accuracy and diversity of emotional generation. The framework also incorporates an emotion classification objective to ensure emotional consistency during generation. Experiments on the MEAD and HDTF datasets demonstrate that DICE-Talk outperforms existing methods in terms of emotional accuracy, lip synchronization, and visual quality.

Key Features of DICE-Talk

-

Emotion-Driven Dynamic Portrait Generation: Generates dynamic portrait videos with specific emotional expressions based on input audio and a reference image.

-

Identity Preservation: Maintains the identity features of the reference image during video generation, preventing identity leakage or confusion.

-

High-Quality Video Output: Achieves high standards in visual quality, lip synchronization, and emotional expression.

-

Strong Generalization: Capable of adapting to unseen identity-emotion combinations, demonstrating strong generalization capability.

-

User Control: Allows users to specify desired emotional targets to control the emotional expression in generated videos, enabling a high degree of customization.

-

Multimodal Input: Supports multiple input modalities including audio, video, and reference images.

Technical Principles of DICE-Talk

-

Disentangling Identity and Emotion: Utilizes a cross-modal attention mechanism to jointly model audio and visual emotional cues, representing emotions as identity-independent Gaussian distributions. Emotional embeddings are trained using contrastive learning (e.g., InfoNCE loss) to cluster features of the same emotion while separating those of different emotions.

-

Emotion Association Enhancement: The emotion library is a learnable module that stores feature representations of various emotions. Through vector quantization and attention-based feature aggregation, it learns the relationships between emotions. This helps the model generate a wider range of emotions by understanding their interrelations.

-

Emotion Discrimination Objective: During the video generation process, an emotion discriminator ensures emotional consistency by being co-trained with the diffusion model. This ensures the generated video matches the target emotion while maintaining visual quality and accurate lip synchronization.

-

Diffusion-Based Framework: Begins from Gaussian noise and progressively denoises to generate the target video. A variational autoencoder (VAE) maps video frames to a latent space, where Gaussian noise is gradually introduced and removed via the diffusion model. During denoising, a cross-modal attention mechanism incorporates the reference image, audio features, and emotion features to guide the video generation.

Project Resources

-

Official Website: https://toto222.github.io/DICE-Talk/

-

GitHub Repository: https://github.com/toto222/DICE-Talk

-

arXiv Paper: https://arxiv.org/pdf/2504.18087

Application Scenarios of DICE-Talk

-

Digital Humans and Virtual Assistants: Enhances emotional expression in digital avatars and virtual assistants, making human-computer interactions more natural and engaging.

-

Film and Animation Production: Facilitates fast generation of emotion-rich portrait videos for special effects and animation, improving efficiency and reducing costs.

-

Virtual and Augmented Reality: Enables emotionally responsive virtual characters in VR/AR settings, enhancing immersion and emotional resonance.

-

Online Education and Training: Creates emotionally engaging teaching videos, making learning more lively and effective.

-

Mental Health Support: Develops emotion-aware virtual characters for use in psychological therapy and emotional support, helping users express and understand emotions better.

Related Posts