SurfSense – An open-source AI research assistant that seamlessly connects personal knowledge bases with global data sources

What is SurfSense?

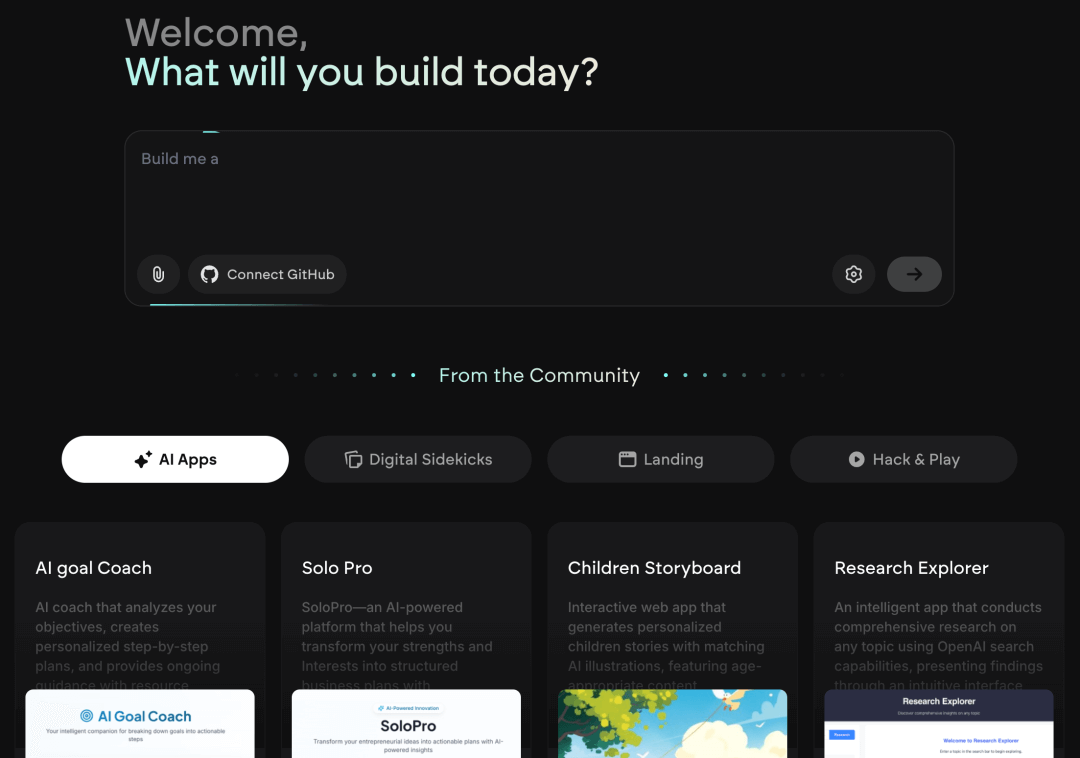

SurfSense is an open-source AI research assistant designed for flexible integration and powerful personal knowledge management—positioned as a more extensible alternative to tools like NotebookLM and Perplexity. It connects to various external data sources such as search engines, Slack, Notion, YouTube, GitHub, and supports file uploads in multiple formats. By aggregating content into a personalized knowledge base, SurfSense enables semantic and full-text search, along with natural language interaction, helping users retrieve and reference information efficiently.

Key Features of SurfSense

-

Advanced Search Capabilities:

Combines semantic and full-text search to help users quickly locate relevant content from their knowledge base. -

Multi-Format File Support:

Users can upload and organize diverse content formats, including documents, PDFs, and images. -

Natural Language Interaction:

Ask questions in natural language and receive context-aware answers with direct references from your stored materials. -

External Data Integration:

Seamlessly connects with platforms such as Slack, Notion, YouTube, GitHub, and search engines for richer content ingestion. -

Privacy & Local Deployment:

SurfSense supports running local LLMs and self-hosting options, allowing users to maintain control of their data. -

Browser Extension:

A browser extension lets users save web content directly, even from login-restricted pages. -

Document Management:

Offers intuitive file management and supports multi-document interaction for streamlined research workflows.

How SurfSense Works (Technical Overview)

-

RAG (Retrieval-Augmented Generation):

SurfSense uses a dual-layer RAG architecture that combines vector embeddings and full-text indexing. It retrieves the most relevant document fragments and feeds them as context into an LLM to generate responses. The search results are optimized using Reciprocal Rank Fusion (RRF) to improve relevance. -

Vector Embedding & Indexing:

Documents are embedded into a vector space using pgvector (a PostgreSQL vector extension), enabling fast semantic similarity searches. -

Hierarchical Indexing:

A two-layer indexing structure is used—layer one quickly filters relevant documents, and layer two refines results with deeper fragment analysis. -

Backend Stack:

Built with FastAPI for a modern, high-performance web API. Uses PostgreSQL with pgvector for vector search. LLM integration is handled via LangChain and LiteLLM, supporting multiple language models. -

Frontend Stack:

Developed using Next.js and React for a dynamic user interface. Tailwind CSS and Framer Motion provide customizable design and animation effects. -

Browser Extension:

Built with Plasmo, the extension works across multiple browsers and allows users to easily clip and save online content.

Project Links

-

Official Website: https://www.surfsense.net/

-

GitHub Repository: https://github.com/MODSetter/SurfSense

Use Cases for SurfSense

-

Personal Knowledge Management:

Organize notes, documents, and references into a centralized, searchable system for long-term retention. -

Academic Research:

Streamline literature reviews, research compilation, and report generation by integrating academic content and documentation. -

Enterprise Knowledge Sharing:

Upload internal resources and promote collaborative access across teams to improve knowledge flow and project efficiency. -

Content Creation:

Collect inspiration, organize source materials, and draft creative content with contextual support from your knowledge base. -

Information Curation:

Save and structure information from the web or other platforms to create a coherent, easily retrievable digital library.

Related Posts