What is Sidekick CLI?

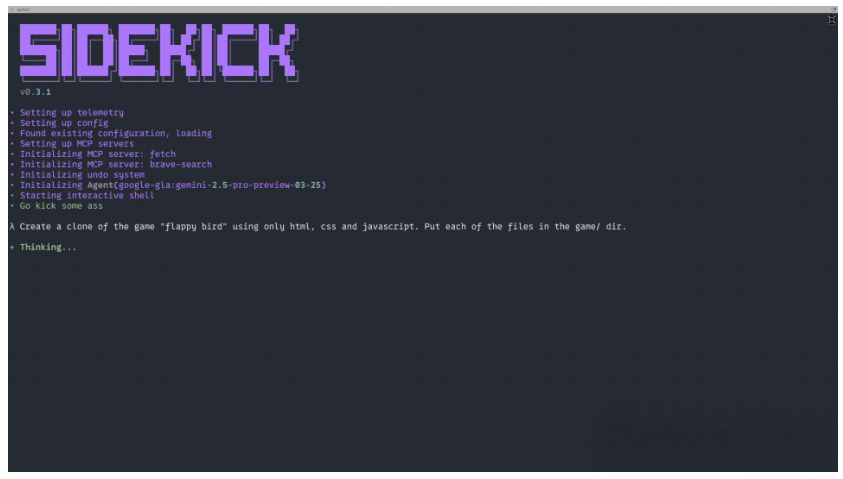

Sidekick is an open-source command-line interface (CLI) AI tool designed to give developers access to multiple large language models (LLMs) and boost productivity. It aims to serve as an open-source alternative to tools like Claude Code, Copilot, Windsurf, and Cursor. Sidekick offers several core features, including support for various LLM providers such as Anthropic, OpenAI, and Google Gemini, compatibility with the Model Context Protocol (MCP), and convenient command-based operations like /undo and /model for debugging and control. Additionally, Sidekick allows users to customize its behavior, track costs and token usage, enhance the command-line experience, and collects telemetry data via Sentry to analyze usage patterns.

Key Features

-

Multi-Model Support: Seamlessly switch between different LLMs (e.g., GPT-4, Claude-3) within the same session.

-

MCP (Model Context Protocol) Integration: Enables enhanced AI capabilities via external tool and data integrations—e.g., fetching code from GitHub.

-

Project-Aware Customization: Define your tech stack and preferences in a

SIDEKICK.mdfile placed in your project root. Sidekick adapts accordingly. -

One-Click VPS Deployment: Simplified VPS setup on Ubuntu 20.04+, including SSH, Docker, Traefik, and HTTPS configuration.

-

Token & Cost Tracking: Monitor token usage and LLM costs in real-time with budget limit support.

-

Undo Support: Easily revert the last AI modification with the

/undocommand. -

Command Confirmation Control: Use

/yoloto skip confirmation prompts for faster workflows. -

Telemetry Toggle: Basic telemetry (via Sentry) is enabled by default but can be disabled using

--no-telemetryfor privacy.

Technical Overview

1. CLI-First Design

Built entirely around the command-line experience, Sidekick CLI is optimized for developers who prefer working in the terminal over traditional IDEs.

2. Flexible LLM Integration

Sidekick allows developers to choose from various AI providers, reducing dependency on any single vendor and enabling tailored use-case optimization.

3. Model Context Protocol (MCP)

MCP enables Sidekick to communicate with external tools and services, enriching AI outputs—such as fetching contextual code or files from GitHub.

4. Project-Level Intelligence

With SIDEKICK.md, you can specify your project’s language, frameworks, and style guidelines. Sidekick then tunes its AI prompts to match your context.

Project Info & Installation

-

GitHub Repository: https://github.com/geekforbrains/sidekick-cli

-

PyPI Package: https://pypi.org/project/sidekick-cli/

Installation Steps

-

Ensure Python 3.8+ and pip are installed.

-

Install via pip:

-

On first run, you’ll be prompted to configure your LLM provider. The config file is stored at

~/.config/sidekick.json.

Use Cases

-

AI-Powered Coding: Generate, debug, and understand code using natural language, across multiple LLMs.

-

Model Switching: Dynamically toggle between models to suit different coding tasks or language capabilities.

-

Context-Aware Development: Customize the assistant’s behavior based on your project’s specific technologies and conventions.

-

Rapid VPS Setup: Great for spinning up dev environments quickly with secure access.

-

Cost Monitoring: Keep LLM usage within budget by actively tracking tokens and cost.

Related Posts