Core Concepts

AI Agents:

AI Agents are autonomous software systems built upon AI technologies that can independently perceive, make decisions, and execute tasks within specific environments. Their key features include:

-

Autonomous Operation:

AI Agents can complete tasks independently without real-time human supervision. They support automatic task initiation, make decisions based on built-in intelligent algorithms, and are capable of 24/7 uninterrupted operation. -

Goal-Oriented:

Each AI Agent operates around a specific objective. It breaks down complex tasks into smaller subtasks, intelligently sequences their execution, and continuously evaluates performance quality. -

Environmental Perception:

AI Agents can interpret diverse types of information, such as text, voice, and images. They adjust their behavior in real-time based on contextual inputs and can integrate with other systems for collaborative tasks. -

Continuous Improvement:

AI Agents learn from each task execution, optimizing themselves through the analysis of both successful and failed cases. They adapt to user preferences over time, becoming increasingly intelligent, especially in applications like recommendation systems.

Agentic AI:

Distinct from individual AI Agents, Agentic AI refers to a new type of intelligent architecture—a collaborative system composed of multiple AI Agents working together through coordinated mechanisms to tackle complex tasks. Key features include:

-

Multi-Agent Collaborative Architecture:

Specialized agents divide and conquer tasks, leveraging intelligent communication mechanisms to efficiently execute complex objectives. -

Advanced Task Planning Capabilities:

These systems support recursive task decomposition, multi-path reasoning, and dynamic adjustment strategies to handle complicated problem-solving scenarios. -

Distributed Memory System:

Agentic AI combines global shared memory with agent-specific private memory to support inter-agent knowledge retrieval and storage. -

Meta-Agent Coordination Mechanism:

A central scheduler oversees task distribution, quality control, and sandbox security measures to ensure reliable and safe collaboration among agents. -

Self-Evolution Capability:

Agents within the system share experiences and continuously optimize their cooperation strategies, leading to overall performance improvement.

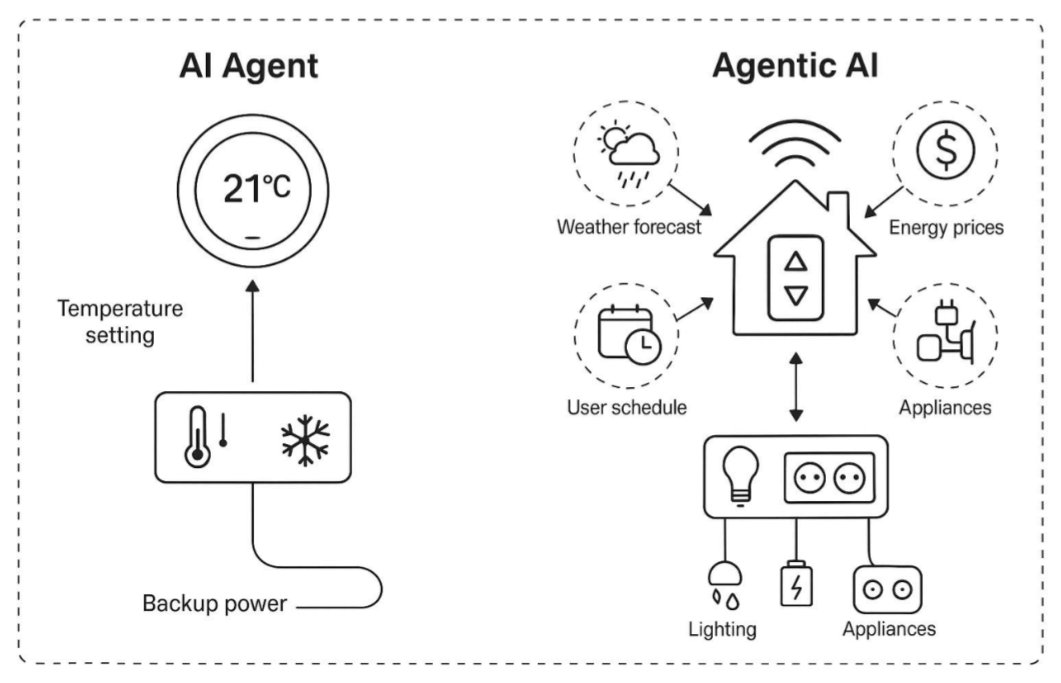

The research team illustrates the difference between AI Agents and Agentic AI using a smart home system case study.

This comparison chart vividly illustrates the evolution of AI technology from single-function intelligence to systemic intelligence. On the left, the AI Agent (e.g., a smart thermostat) represents a basic intelligent unit focused on independently executing specific tasks (such as temperature control), with limited self-learning and rule-based response capabilities. On the right, the Agentic AI system forms a collaborative network of multiple agents (integrating modules like weather forecasting, energy management, and security), enabling cross-domain optimization through real-time data sharing and dynamic decision-making (e.g., automatically adjusting home environments based on electricity pricing and weather forecasts).

The fundamental difference lies in their roles: the former is a task executor, while the latter is a system-level decision-maker. This shift signifies AI’s progression from isolated tools to organizational-level intelligent ecosystems—paving the way for key technological paradigms capable of addressing complex scenarios such as smart cities and the industrial Internet of Things.

Application Scenarios

AI Agent Application Scenarios:

-

Customer Service Automation: Intelligent customer service systems can quickly and accurately respond to common questions based on preset rules and user inputs, improving customer satisfaction.

-

Schedule Management: Automatically analyzes users’ calendars to reasonably arrange meetings and events, avoiding scheduling conflicts.

-

Data Summarization: Automatically extracts and summarizes key information from large volumes of data to generate concise reports.

-

Email Filtering: Automatically classifies and prioritizes emails to help users manage high email traffic.

-

Personalized Content Recommendation: Recommends personalized content such as news, music, or videos based on user behavior and preferences.

-

Automated Document Processing: Automatically extracts and processes information from documents to generate summaries or reports.

Agentic AI Application Scenarios:

-

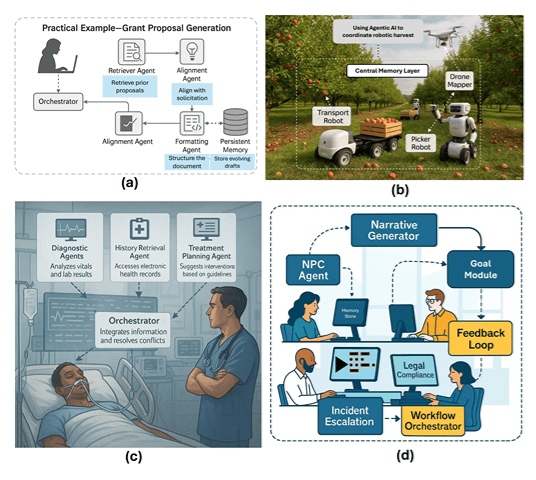

Research Automation: Automates research workflows, including literature retrieval, experimental design, and data analysis.

-

Robot Coordination: Coordinates multi-robot systems to accomplish complex tasks such as logistics delivery and environmental monitoring.

-

Medical Decision Support: Provides services for medical diagnosis, treatment recommendation, and patient monitoring.

-

Intelligent Transportation Systems: Optimizes traffic flow and manages coordination and scheduling of autonomous vehicles.

-

Supply Chain Management: Optimizes resource allocation, logistics scheduling, and inventory management within the supply chain.

-

Smart Energy Management: Optimizes energy consumption and manages smart grids and distributed energy resources.

Challenges

AI Agents: Inherit the limitations of large models (hallucinations, shallow reasoning), lack long-term memory and goal proactivity. Specifically reflected in the following aspects:

-

Lack of causal understanding: AI Agents struggle to distinguish correlation from causation, resulting in poor performance when facing new situations.

-

Inherent LLM limitations: Issues such as hallucinations, prompt sensitivity, shallow reasoning, knowledge cut-off, and bias lead to inaccurate information generation, affecting reliability.

-

Incomplete agent attributes: AI Agents lack sufficient autonomy, proactivity, and social abilities, limiting their application scope and functionality.

-

Lack of long-term memory: Difficulty in multi-step planning and handling task failures restricts adaptability in complex environments.

Agentic AI: Faces challenges such as error propagation among multiple agents, lack of unified standards, and security and ethical risks. Specifically reflected in the following aspects:

-

Error propagation: Errors from a single agent can be amplified through collaborative chains, contaminating overall system decisions.

-

System stability: Dynamic multi-agent interactions often cause decision oscillations, with task success rates dropping significantly as the number of agents increases.

-

Protocol fragmentation: Different frameworks use independent communication protocols (e.g., gRPC/JSON-RPC), leading to poor cross-platform interoperability.

-

Verification difficulty: Explosive growth in multi-agent interaction paths means current testing tools can only verify simple collaboration chains.

-

Scalability bottlenecks: Efficiency sharply declines when coordinating more than seven agents, with hardware performance becoming a hard constraint for large-scale deployment.

Technical Solutions

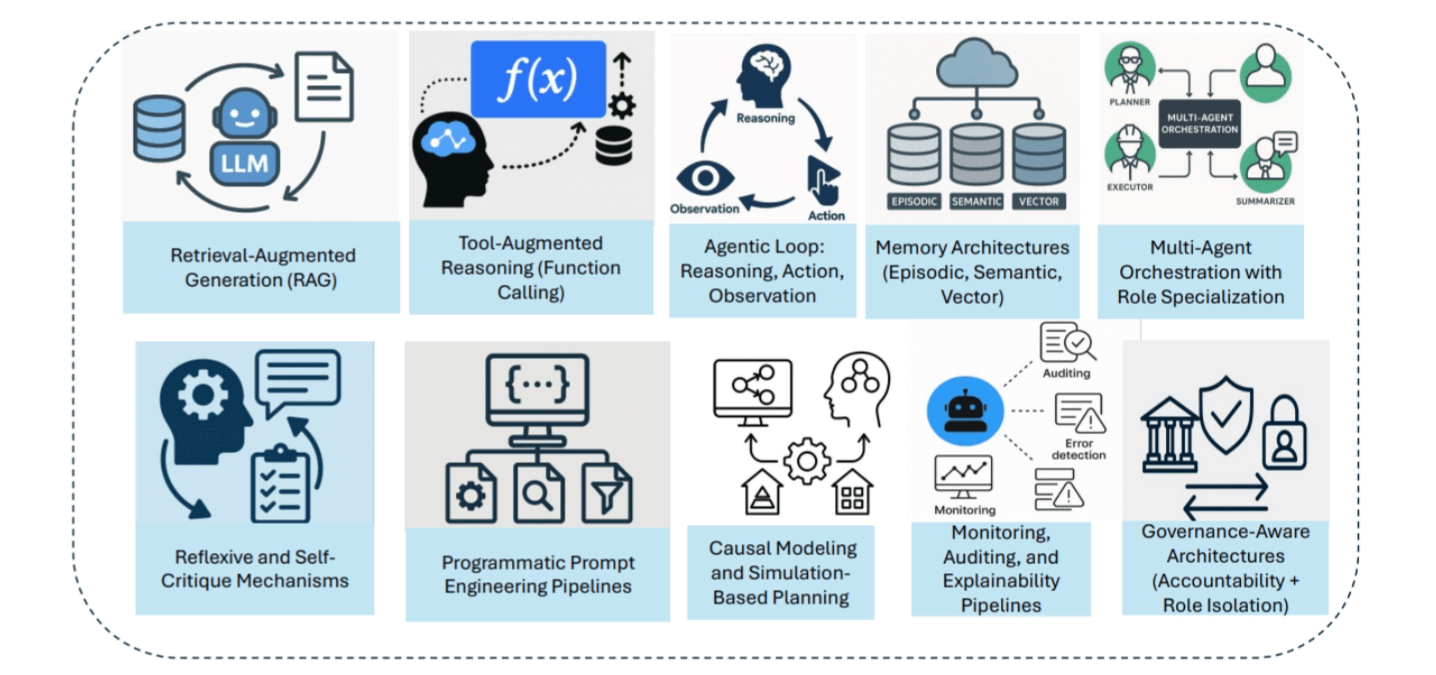

Ten major solutions addressing the diverse challenges faced by AI Agents and Agentic AI:

-

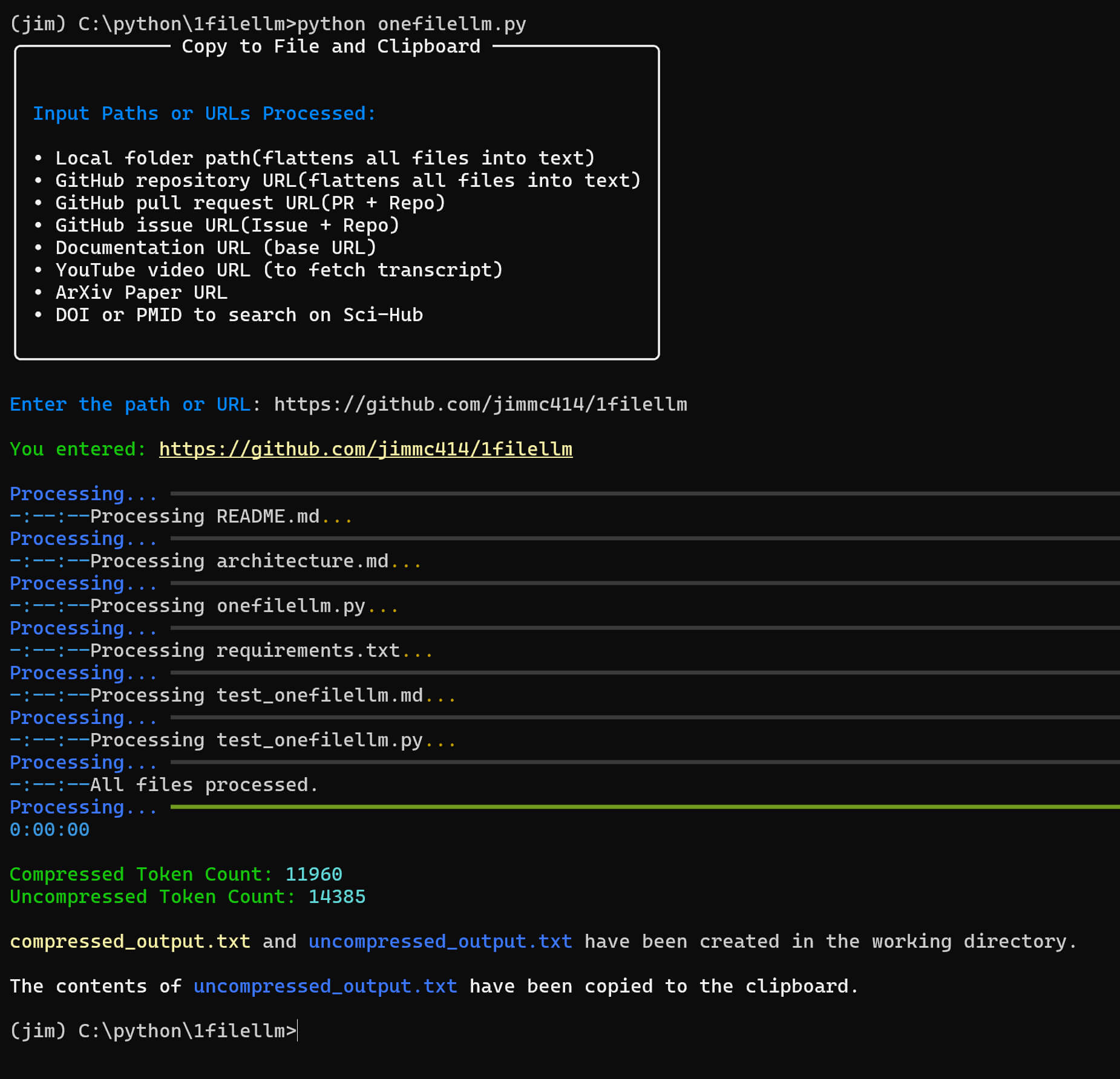

Retrieval-Augmented Generation (RAG): Provides agents with fact-checking capabilities by retrieving real-time external knowledge bases, reducing hallucinations (e.g., customer service agents accessing the latest product database for responses).

-

Tool-Augmented Reasoning: Grants agents API access (e.g., querying weather or stock prices) to extend their problem-solving boundaries (e.g., investment agents automatically fetching financial data to aid decisions).

-

Agent Behavior Loop: Establishes a closed loop of “reasoning-action-observation” (e.g., writing agents first outline then generate content in sections) to enhance decision rigor.

-

Hierarchical Memory Architecture: Utilizes a three-layer storage system combining episodic memory (short-term conversation), semantic memory (long-term knowledge), and vector memory (fast retrieval).

-

Role-Based Multi-Agent Orchestration: Divides labor like company departments (e.g., MetaGPT’s CEO/CTO roles), leveraging specialization to improve collaboration efficiency.

-

Self-Critique Mechanism: Implements verification agents (e.g., auditor roles) to cross-check outputs and reduce error propagation risks.

-

Programmatic Prompt Engineering: Uses templated prompts (e.g., “You are an experienced doctor”) to standardize agent behavior and reduce randomness.

-

Causal Modeling: Constructs causal graphs to distinguish correlation from causation (e.g., “cough–cold”) enhancing reasoning reliability.

-

Explainability Pipeline: Records complete decision logs (e.g., AutoGen’s dialogue history) to support fault tracing and accountability.

-

Governance-Aware Architecture: Enforces sandbox isolation (e.g., trading limits for financial agents) and RBAC permission control to ensure system security.

Future Directions

AI Agents: From Passive Response to Proactive Intelligence.

-

Autonomous Decision-Making: No longer limited to simple task execution, agents will proactively reason based on context and goals, autonomously planning action paths (e.g., smart assistants proactively reminding of meetings and preparing materials).

-

Tool Integration: Deep integration with external APIs, databases, and physical devices (e.g., robots accessing industry knowledge bases or controlling robotic arms) to extend capability boundaries.

-

Causal Reasoning: Moving beyond correlation analysis to understand the “why,” enhancing decision reliability (e.g., medical diagnostic agents distinguishing causal chains of symptoms).

-

Continuous Learning: Optimization through online learning and feedback (e.g., recommendation systems adapting to user preferences in real time), achieving long-term performance improvement.

-

Trust & Safety: Introducing explainability, audit logs, and value alignment mechanisms to ensure AI behavior is ethical and controllable.

Agentic AI: From Single Agents to Systemic Intelligence.

-

Multi-Agent Scaling: Building large-scale collaborative networks (e.g., thousands of agents collaboratively optimizing traffic signals in urban traffic management).

-

Unified Orchestration: Developing standardized communication protocols and scheduling frameworks (similar to Kubernetes for containers) to enable cross-platform agent collaboration.

-

Persistent Memory: Supporting long-term knowledge retention (e.g., research agent systems accumulating domain research history) to avoid redundant learning.

-

Simulation Planning: Rehearsing decisions in virtual environments (e.g., autonomous driving agents testing extreme scenarios via digital twins) to reduce real-world risks.

-

Ethical Governance: Establishing accountability mechanisms for multi-agent systems (e.g., blockchain-based evidence preservation) to ensure compliance with legal and social norms.