The Stanford University course CS336, “Language Modeling from Scratch”

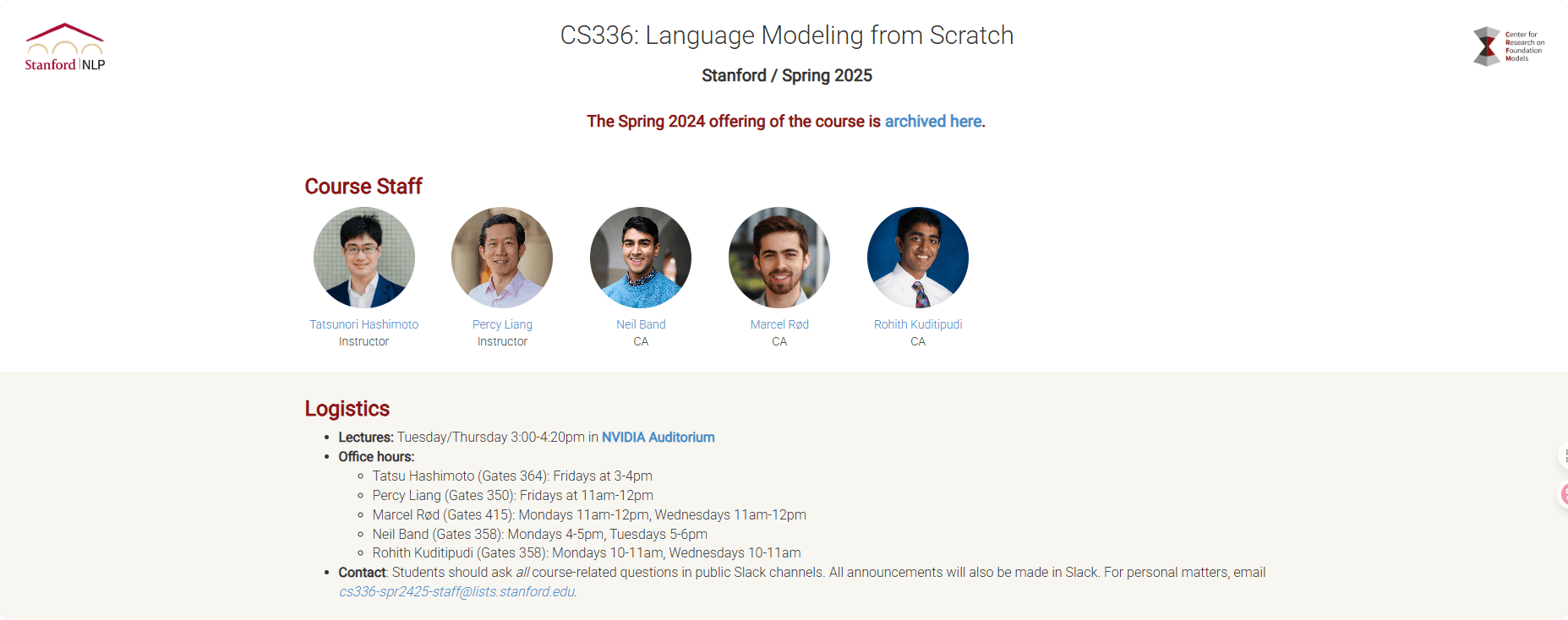

Stanford CS336: Language Modeling from Scratch

Course Overview

Stanford University’s CS336 course, Language Modeling from Scratch, is a deep learning course focused on the development of language models. Through hands-on practice, students learn the entire development workflow of language models, from data collection and model building to training and evaluation. Inspired by the structure of operating systems courses, CS336 requires students to build a complete language model from scratch, including implementing a tokenizer, the Transformer architecture, optimizer, and other key components.

Course Objectives

Through this course, students will gain a deep understanding of the internal mechanisms of language models and learn how to optimize models to tackle challenges in large-scale training and real-world applications.

Course Content

-

Course Focus: CS336 aims to provide students with a comprehensive understanding of the language model development pipeline, including data collection and preprocessing, Transformer model construction, training, and evaluation.

-

Pedagogical Approach: Drawing inspiration from operating systems courses, this course emphasizes a bottom-up approach, requiring students to build a full language model from scratch.

Prerequisites

-

Python Proficiency: Most assignments are completed in Python, so strong programming skills are required.

-

Deep Learning & Systems Experience: Familiarity with PyTorch and basic system concepts (e.g., memory hierarchy) is essential.

-

Mathematics: A solid foundation in linear algebra, probability, and statistics is required.

-

Machine Learning Basics: Students should have a basic understanding of machine learning and deep learning concepts.

-

Course Intensity: This is a 5-credit course with a strong emphasis on practical implementation, requiring a significant time investment.

Course Assignments

-

Assignment 1: Implement basic components of a Transformer-based language model (tokenizer, model architecture, optimizer), and train a minimal language model.

-

Assignment 2: Use advanced tools for performance analysis and optimization, implement a Triton version of FlashAttention2, and build distributed training code.

-

Assignment 3: Analyze each Transformer component and use training APIs to model and understand scaling laws.

-

Assignment 4: Convert raw Common Crawl data into pretraining-ready datasets, applying filtering and deduplication to enhance model performance.

-

Assignment 5: Apply supervised fine-tuning and reinforcement learning to train a language model to solve math problems, with an optional implementation of safety alignment techniques.

Official Website

- Course website: https://stanford-cs336.github.io/spring2025/

Additional Information

-

GPU Resources: Students are encouraged to use cloud GPU resources for assignments. The course provides several recommended cloud service options.

-

Academic Integrity: Students may consult AI tools for low-level programming help or high-level conceptual questions but are prohibited from using AI tools to directly solve assignments.

-

Assignment Submission: All assignments are submitted via Gradescope. Late days are allowed, up to a maximum of 3 days per assignment.

Related Posts