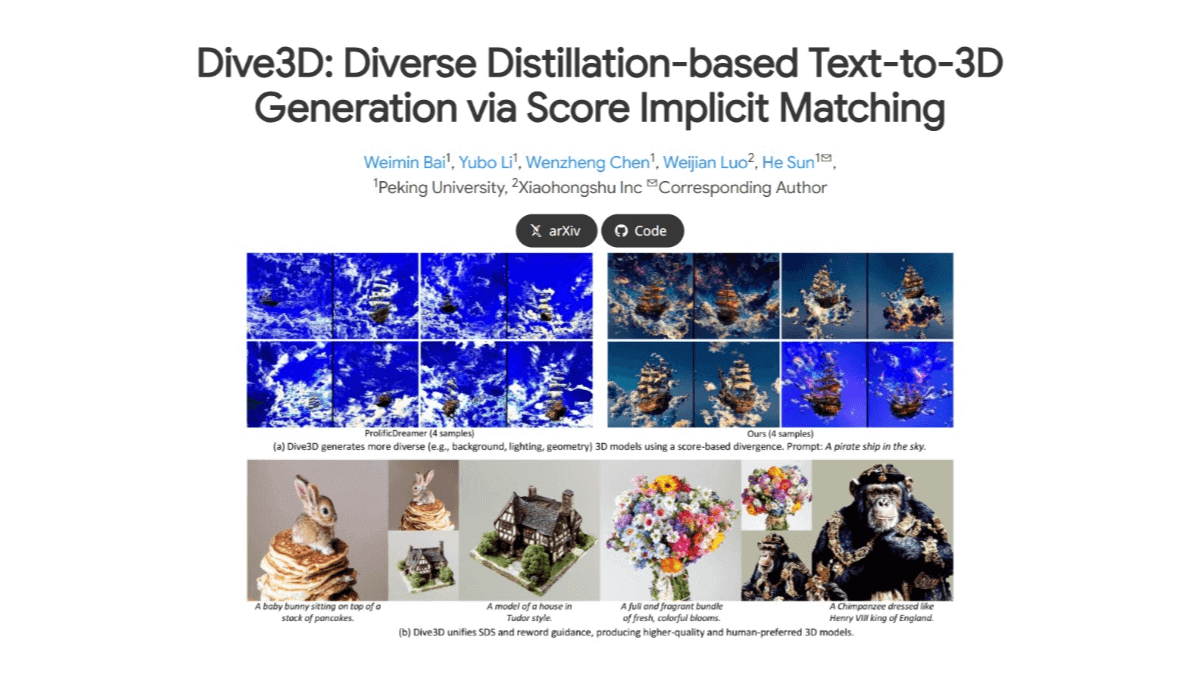

Dive3D – A Text-to-3D Generation Framework Jointly Developed by Peking University and Xiaohongshu

What is Dive3D?

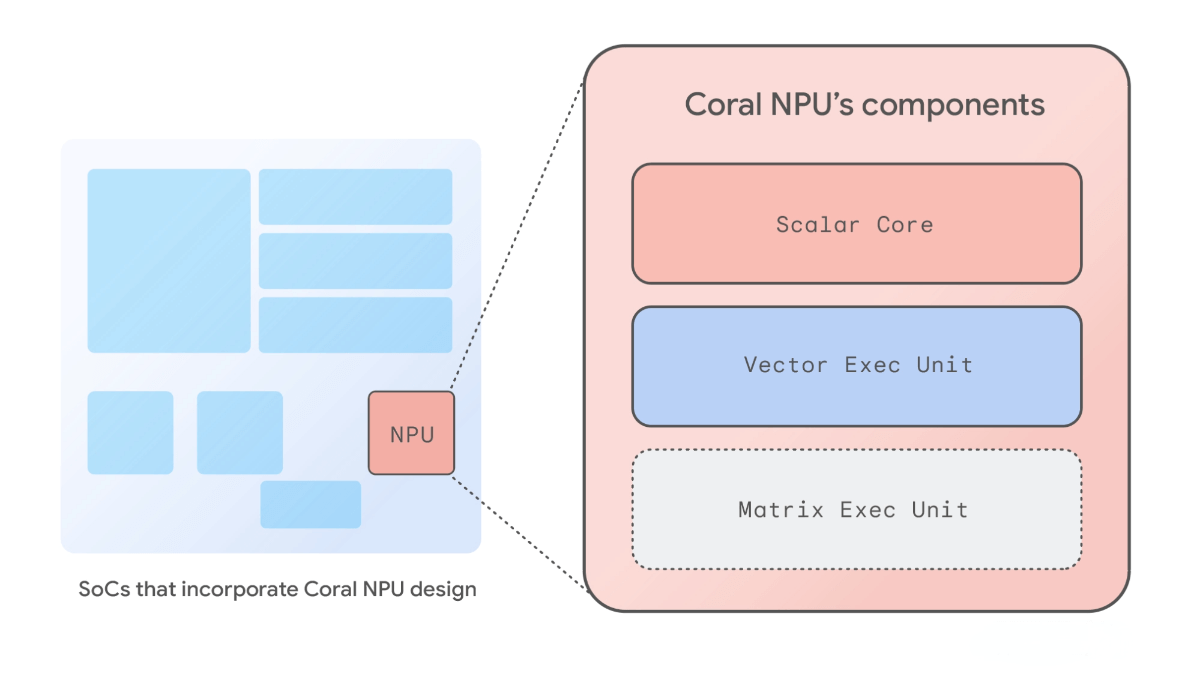

Dive3D is a text-to-3D generation framework jointly developed by Peking University and Xiaohongshu. It replaces the traditional KL-divergence objective with a novel Score Implicit Matching (SIM) loss, effectively avoiding mode collapse and significantly improving the diversity of generated 3D content. Dive3D demonstrates outstanding performance in text alignment, human preference, and visual fidelity, achieving excellent quantitative results on the GPTEval3D benchmark. This showcases its strong ability to generate high-quality and diverse 3D assets.

Key Features of Dive3D

-

Diverse 3D Content Generation: Generates 3D models in various styles and with varying details based on textual prompts, avoiding the common mode collapse problem that leads to overly uniform and similar outputs.

-

High-Quality 3D Models: Produces models with high visual fidelity, including detailed textures, realistic geometry, and appropriate lighting effects.

-

Strong Text-to-3D Alignment: Ensures that the generated 3D models closely match the input text descriptions, accurately reflecting described elements and features.

-

Support for Multiple 3D Representations: Capable of generating different 3D formats such as Neural Radiance Fields (NeRF), Gaussian Splatting, and Meshes, accommodating diverse use cases and user needs.

Technical Foundations of Dive3D

-

Score Implicit Matching (SIM) Loss: A core innovation of Dive3D, SIM loss directly matches the score (gradient of probability density) between generated content and the diffusion prior, unlike traditional KL-divergence-based losses (e.g., used in Score Distillation Sampling, SDS), which often cause mode-seeking behavior. SIM encourages the model to explore multiple high-probability regions, improving diversity while preserving fidelity.

-

Unified Divergence Perspective Framework: Dive3D unifies diffusion distillation and reward-guided optimization into a divergence-based framework comprising three core loss functions—Conditional Diffusion Prior (CDP) loss, Unconditional Diffusion Prior (UDP) loss, and Explicit Reward (ER) loss. Through proper combination and weighting of these losses, the framework balances diversity, text alignment, and visual quality.

-

Diffusion-Based Optimization: Utilizes pre-trained 2D diffusion models (e.g., Stable Diffusion) as priors. By rendering 3D representations into multi-view 2D images, Dive3D iteratively refines the 3D model to match the image distribution expected by the diffusion model, aligning visual appearance with the textual prompt.

-

Efficient Optimization Algorithms: To improve optimization speed, Dive3D employs techniques such as Classifier-Free Guidance (CFG) to better balance conditional and unconditional generation, along with carefully tuned noise schedules and step sizes to accelerate convergence and reduce generation time.

Project Links for Dive3D

-

Official Website: https://ai4scientificimaging.org/dive3d/

-

GitHub Repository: https://github.com/ai4imaging/dive3d

-

arXiv Technical Paper: https://arxiv.org/pdf/2506.13594

Application Scenarios of Dive3D

-

Game Development: Quickly generate characters, props, and environments based on script descriptions, reducing the workload of game artists and enabling faster iteration.

-

Film and Animation Production: Provide creative prototypes and concept designs for movies, TV shows, and animated films. Text descriptions from scripts can be transformed into 3D models for better visualization.

-

Architectural Design: Generate building models from textual descriptions, helping architects present design concepts quickly and compare or optimize different schemes.

-

Virtual Scene Construction: Produce realistic scenes and objects for VR and AR applications. For example, in virtual tourism, users can input a location description to generate immersive 3D environments.

-

Science Education: Create complex scientific models such as biological cells or molecular structures, aiding students in visualizing and understanding abstract scientific concepts.

Related Posts