Next-Frame Diffusion – A self-regressive video generation model jointly developed by Peking University and Microsoft

What is Next-Frame Diffusion?

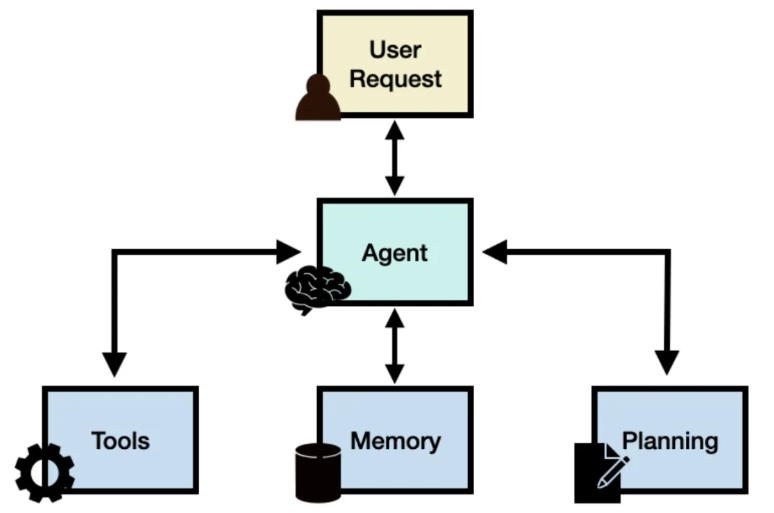

Next-Frame Diffusion (NFD) is an autoregressive video generation model jointly developed by Peking University and Microsoft Research. It combines the high-fidelity generation capability of diffusion models with the causality and controllability of autoregressive models. NFD leverages a Block-wise Causal Attention mechanism and a Diffusion Transformer to enable efficient, frame-level video generation. While ensuring video quality and temporal coherence, the model achieves real-time video generation at over 30 FPS.

It further enhances sampling efficiency through techniques such as Consistency Distillation and Speculative Sampling. NFD has demonstrated outstanding performance in large-scale action-conditioned video generation tasks, significantly surpassing existing methods.

Key Features of Next-Frame Diffusion

-

Real-Time Video Generation: Capable of generating videos at over 30 FPS on high-performance GPUs, making it ideal for interactive applications requiring rapid responses such as games, virtual reality, and real-time video editing.

-

High-Fidelity Video Synthesis: Produces visually rich and detailed video content in continuous space, outperforming traditional autoregressive models in capturing fine-grained details and textures.

-

Action-Conditioned Generation: Dynamically generates video content based on real-time user input, offering high flexibility and controllability in interactive environments.

-

Long-Term Video Generation: Supports generation of videos with arbitrary lengths, making it suitable for applications requiring long-term coherence, such as storytelling or environment simulation.

Technical Principles Behind Next-Frame Diffusion

-

Block-wise Causal Attention: At the core of NFD is a block-wise causal attention mechanism that combines bidirectional attention within each frame (capturing spatial dependencies) and causal attention across frames (ensuring temporal coherence). Each frame can only attend to preceding frames to maintain causal consistency in generation.

-

Diffusion Model and Diffusion Transformer: Built upon the diffusion generation principle, the model generates video frames through iterative denoising. The Diffusion Transformer, a key component of NFD, utilizes the powerful modeling capabilities of the Transformer architecture to handle both spatial and temporal dependencies.

-

Consistency Distillation: To accelerate the sampling process, NFD introduces Consistency Distillation, extending the Simplified Consistency Model (sCM) from the image domain to video. This significantly improves sampling speed while preserving video quality.

-

Speculative Sampling: This technique uses the consistency of action inputs across neighboring frames to pre-generate future frames. If the model detects changes in input actions later, it discards the speculative frames and resumes generation from the last validated frame, reducing inference time and enhancing real-time performance.

-

Action Conditioning: NFD supports action-conditioned inputs to guide the direction and content of video generation. These inputs can be user commands, control signals, or other forms of conditional data, enabling responsive and controllable video synthesis.

Project Resources

-

Project Website: https://nextframed.github.io/

-

arXiv Technical Paper: https://arxiv.org/pdf/2506.01380

Application Scenarios for Next-Frame Diffusion

-

Game Development: Real-time dynamic game environments generated based on player actions, enhancing immersion and responsiveness.

-

Virtual Reality (VR) and Augmented Reality (AR): Real-time generation of immersive virtual scenes for next-generation VR and AR experiences.

-

Video Content Creation: High-quality video synthesis for advertising, film, and TV production workflows.

-

Autonomous Driving and Robotics: Simulated scenarios and behaviors for autonomous vehicles and robots, useful for training and testing.

-

Education and Training: Generation of virtual experiment environments to support student learning and scientific exploration.

Related Posts