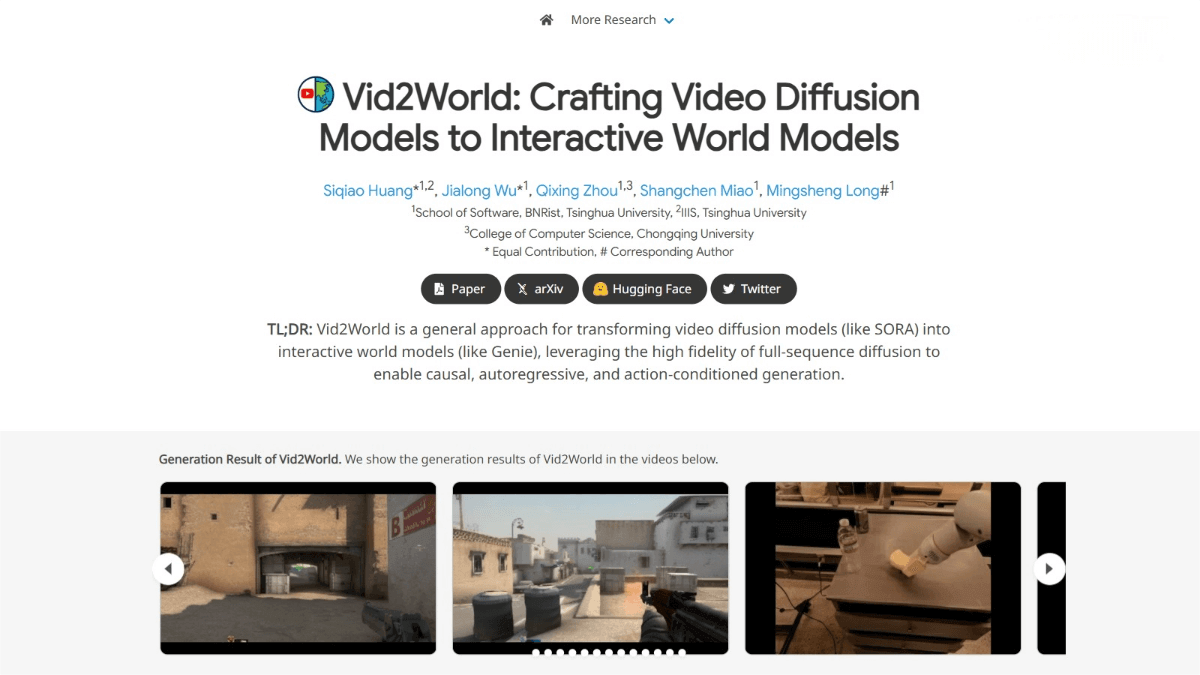

ImmerseGen – A 3D World Generation Framework Jointly Developed by ByteDance and Zhejiang University

What is ImmerseGen?

ImmerseGen is an innovative 3D world generation framework developed by ByteDance’s PICO team in collaboration with Zhejiang University. It allows users to create immersive 3D environments based on text prompts by leveraging an agent-guided asset design and arrangement system. Through this approach, ImmerseGen generates compact agents with alpha-textured assets to construct full panoramic worlds, reducing dependency on complex 3D models while ensuring visual diversity and realism. By incorporating dynamic visual effects and synthesized ambient sound, ImmerseGen significantly enhances multimodal immersion—making it particularly suitable for VR applications.

Key Features of ImmerseGen

-

Base Terrain Generation: Generates foundational terrains based on user text prompts by retrieving base terrain assets, applying terrain-aware texture synthesis, and producing RGBA terrain textures and skyboxes aligned with the base mesh.

-

Rich Environmental Composition: Lightweight assets are selected using asset agents powered by visual-language models (VLMs), which design detailed asset prompts and determine optimal spatial arrangements. Each asset is instantiated with context-aware RGBA textures and alpha blending for seamless scene integration.

-

Multimodal Immersive Enhancements: Adds dynamic visual effects and synthesized environmental audio to increase the sensory richness and realism of the generated 3D world.

Technical Principles Behind ImmerseGen

-

Agent-Guided Asset Design & Arrangement: Agents, driven by VLMs, interpret text prompts to select appropriate templates, design detailed asset descriptions, and determine optimal placement—ensuring alignment with user intent.

-

Terrain-Conditioned Texture Synthesis: During base terrain creation, the system uses texture synthesis techniques to generate realistic terrain textures and skyboxes that align with the underlying 3D mesh.

-

Context-Aware RGBA Texture Synthesis: Each placed asset undergoes context-aware texture generation, using surrounding environmental cues to produce alpha-blended RGBA textures, enhancing visual coherence.

-

Multimodal Fusion: Combines real-time visual effects with synthetic audio to provide immersive audiovisual feedback, elevating the VR/AR user experience.

Project Links

-

Official Website: https://immersegen.github.io/

-

Technical Paper: https://immersegen.github.io/static/assets/paper/paper.pdf

Application Scenarios

-

Virtual Reality (VR) and Augmented Reality (AR): ImmerseGen enables the generation of lifelike 3D environments for use in VR experiences like virtual tourism, meetings, and exhibitions. In AR, it supports content blending with the physical world, aiding fields like industrial and architectural visualization.

-

Game Development: Helps developers rapidly prototype game environments, saving time and resources. It can dynamically generate worlds based on gameplay and story progression, offering players a highly varied experience.

-

Architecture and Urban Design: Creates virtual 3D models of buildings and cityscapes, helping architects and clients visualize plans and provide early-stage feedback.

-

Education: Facilitates the creation of interactive virtual labs or educational simulations, increasing student engagement and learning efficiency.

-

Film and Media Production: Generates virtual scenes for film production, reducing on-site shooting costs. The 3D environments serve as bases for visual effects, speeding up post-production workflows.

Related Posts