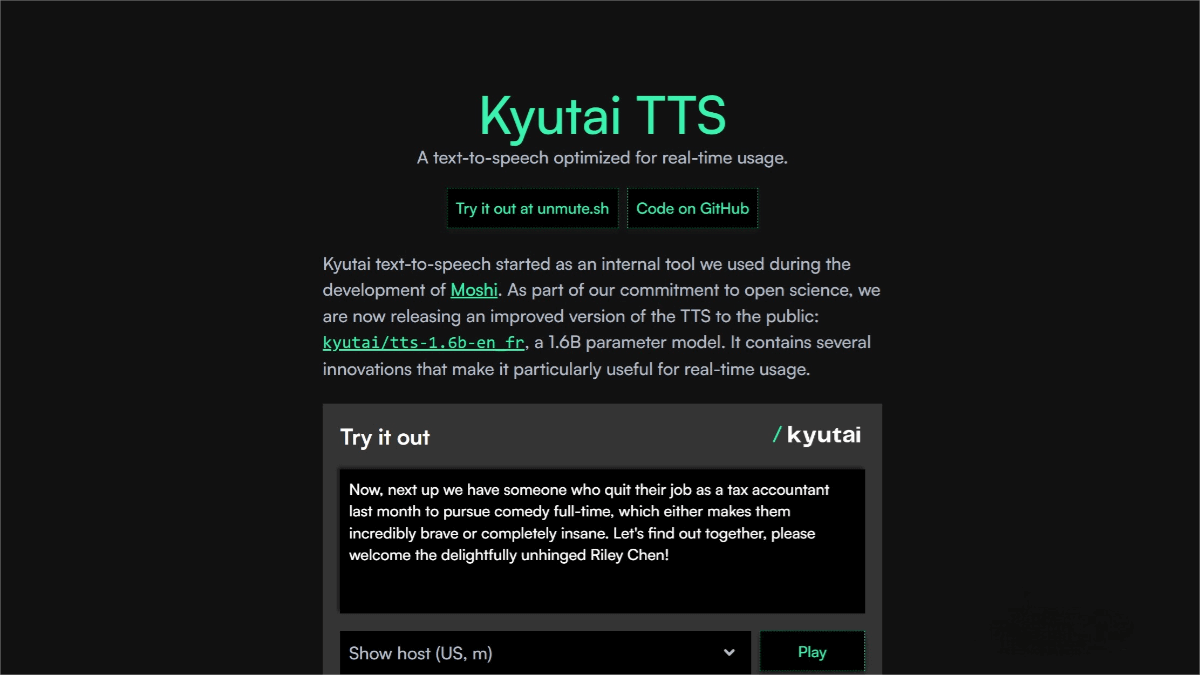

Kyutai TTS – A streaming text-to-speech technology developed by Kyutai Labs

What is Kyutai TTS?

Kyutai TTS is a streaming text-to-speech (TTS) technology developed by the French AI research organization Kyutai Labs. It is an innovative speech synthesis system capable of converting text into natural, fluid speech in real time, without requiring complete text input to begin generating audio. With extremely low latency (just 220 milliseconds), it excels in real-time interactive scenarios such as smart customer service, live translation, and streaming. It supports English and French and features voice cloning, allowing it to match a speaker’s tone and intonation using just a 10-second audio sample. Kyutai TTS also enables long-form speech generation, overcoming the duration limitations of traditional TTS systems, making it suitable for applications like news broadcasting and audiobooks.

Kyutai TTS Key Features

-

Streaming Text Processing: Supports streaming text input, allowing audio generation to begin before the full text is available. Ideal for real-time interactions like smart customer service, live translation, and streaming.

-

Low Latency: On a single NVIDIA L40S GPU, Kyutai TTS can handle 32 concurrent requests with a latency of just 350 milliseconds, ensuring rapid responses for high user demand.

-

High-Fidelity Voice Cloning: Can clone a voice from a 10-second audio sample, producing natural and fluent speech with a speaker similarity score of 77.1% (English) and 78.7% (French). Word error rates (WER) are 2.82% and 3.29%, respectively.

-

Long-Form Text Generation: Breaks the 30-second limit of traditional TTS systems, enabling the processing of lengthy articles for applications like news broadcasts and audiobooks.

-

Multilingual Support: Currently supports English and French.

Technical Principles of Kyutai TTS

-

Delay-Stream Modeling (DSM): The core architecture of Kyutai TTS treats speech and text as two time-aligned data streams. The text stream is slightly delayed relative to the audio stream, allowing the model to “see a bit of the future speech,” improving accuracy and naturalness. During inference, the model progresses step-by-step without waiting for complete audio input, enabling real-time streaming generation.

-

Audio Codec: Uses a custom causal audio codec (e.g., Mimi) to encode speech into low-frame-rate discrete tokens, supporting real-time streaming while maintaining high-quality output.

-

High Concurrency & Low Latency: On a single NVIDIA L40S GPU, Kyutai TTS can process 32 concurrent requests with a latency of just 350 milliseconds.

-

Voice Cloning & Personalization: The model can clone voices from a 10-second sample, matching the original speaker’s pitch, tone, emotion, and recording quality.

-

Word-Level Timestamps: Each word in the generated speech is timestamped, enabling real-time captioning and interactive applications.

Kyutai TTS Project Links

-

Official Website: https://kyutai.org/next/tts

Applications of Kyutai TTS

-

Smart Customer Service: Its low latency allows instant voice responses in customer service interactions, improving efficiency and user experience.

-

Real-Time Translation: Facilitates seamless communication in international business meetings and academic exchanges by quickly converting translated text into speech.

-

Video Conferencing & Live Streaming: Provides real-time captioning for videos and live streams, enhancing audience comprehension.

-

Education: Offers high-quality text-to-speech services for visually impaired individuals and enhances e-learning platforms with dynamic voiceovers.

-

Media Production: Generates long-form speech for news broadcasts, audiobooks, and other media applications.

-

Voice Navigation: Supports high-concurrency scenarios like in-car navigation and public transit announcements, delivering clear and timely voice prompts.

Related Posts