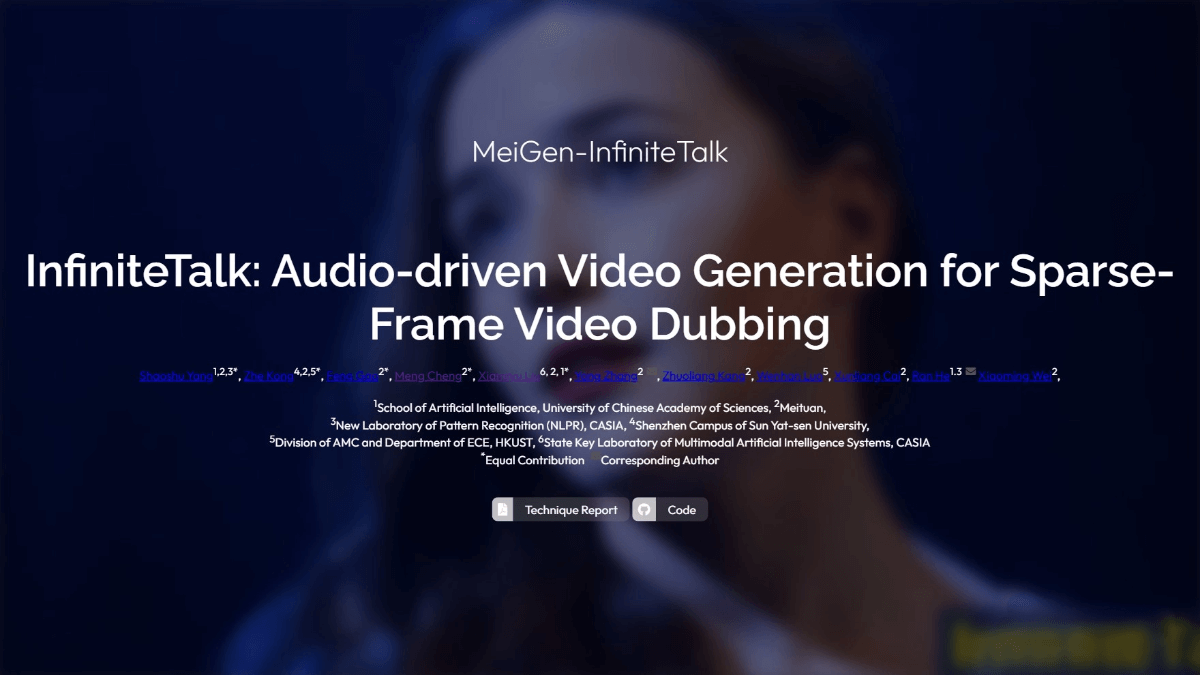

InfiniteTalk – a digital human video generation framework open-sourced by Meituan

What is InfiniteTalk?

InfiniteTalk is a novel digital human-driven technology developed by Meituan’s Visual Intelligence Division. Using a sparse-frame video dubbing paradigm, it can generate natural and fluid digital human videos with only a few keyframes. This approach addresses the common problem in traditional techniques where lip movements, facial expressions, and body gestures are not synchronized. InfiniteTalk enhances the immersion and realism of digital human videos while maintaining high generation efficiency and low cost. Its paper, code, and model weights have been open-sourced, providing an important reference for the development of digital human technology.

Key Features

-

Efficient digital human driving: Generates natural and fluid videos with only a few keyframes, achieving perfect synchronization of lip movements, facial expressions, and body gestures.

-

Versatile scenario adaptation: Suitable for virtual anchors, customer service avatars, actors, and other applications, providing cost-effective digital human solutions across industries.

-

High-efficiency video generation: Uses sparse-frame driving and temporal interpolation to quickly produce high-quality videos, greatly reducing production time and cost.

Technical Principles of InfiniteTalk

-

Sparse-frame video dubbing paradigm: Only a few keyframes are needed to capture the main variations in lip movements, expressions, and body gestures. Intermediate frames are generated via temporal interpolation, creating a complete video sequence. Advanced interpolation algorithms fill the time gaps between keyframes, while fusion techniques ensure smooth transitions of actions, expressions, and lip movements, resulting in coherent video content.

-

Multimodal fusion and optimization: Combines text, audio, and visual information. For example, speech recognition extracts content from audio, which is combined with text input to accurately control the digital human’s lip movements and expressions. Deep learning-based optimization fine-tunes movements, expressions, and lip-sync to ensure high consistency with audio and text, enhancing realism.

-

Efficient computational architecture: Lightweight deep learning models reduce computational resource consumption while maintaining performance. Parallel computing is used to handle multiple tasks during video generation, further improving speed and efficiency.

Project Links

-

Official site: https://meigen-ai.github.io/InfiniteTalk/

-

GitHub repository: https://github.com/MeiGen-AI/InfiniteTalk

-

HuggingFace model hub: https://huggingface.co/MeiGen-AI/InfiniteTalk

-

arXiv paper: https://arxiv.org/pdf/2508.14033

Application Scenarios for InfiniteTalk

-

Virtual anchors: Provides 24/7 virtual anchors for news, variety shows, and livestreams, improving efficiency and engagement.

-

Film and TV production: Speeds up virtual character creation and motion capture in movies and TV shows, reducing production cost and time.

-

Game development: Enhances naturalness and fluidity of in-game virtual character movements, increasing player immersion.

-

Online education: Creates virtual teachers to provide personalized teaching, including live Q&A and course explanations, improving learning outcomes.

-

Training simulations: Used for enterprise training simulations, such as customer service or sales training, allowing employees to practice in a virtual environment.

Related Posts